How are Foundation Models Transforming AI Adoption?

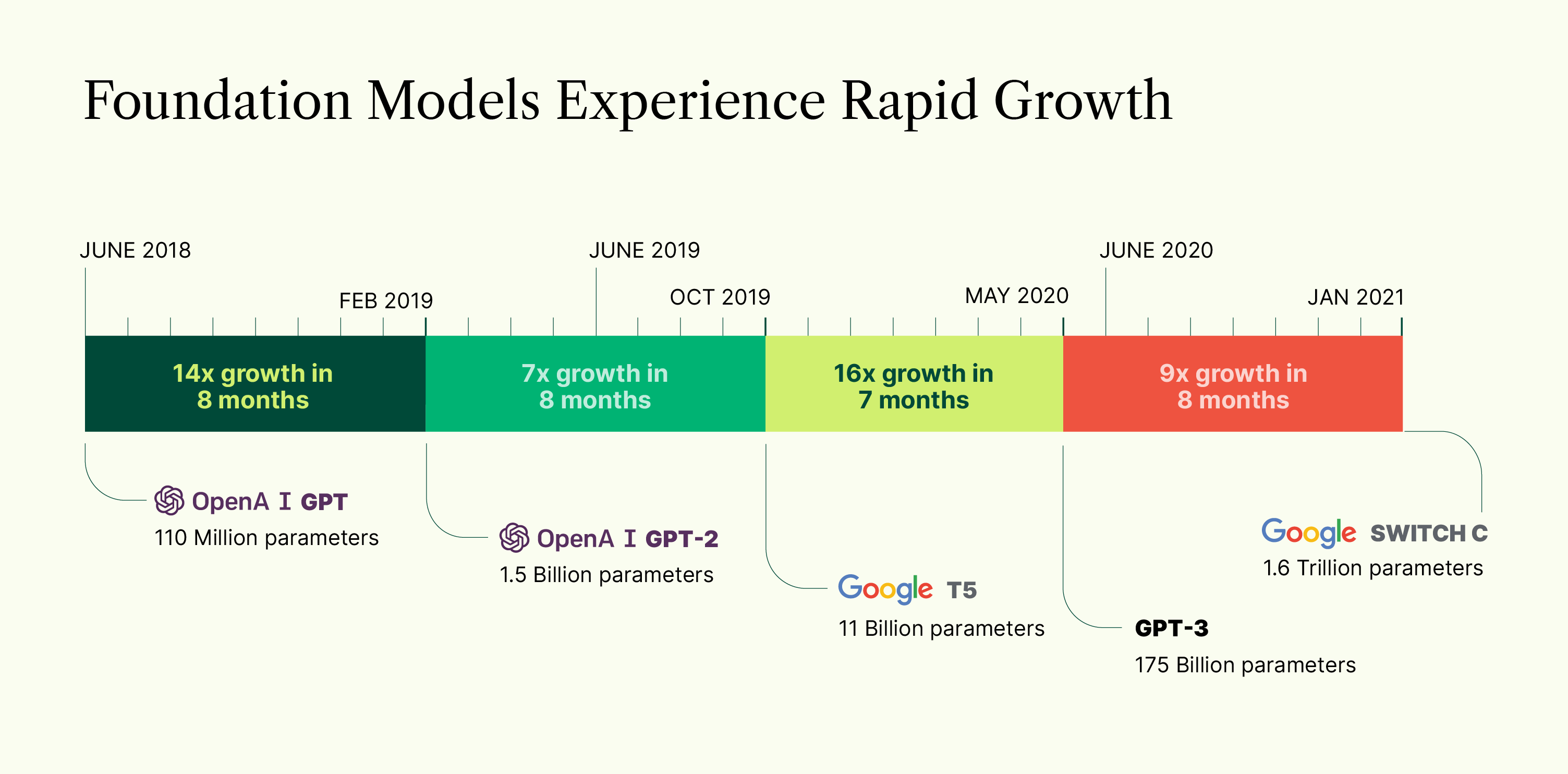

In recent years, artificial intelligence (AI) has grown quickly, in huge part due to the creation of foundation models. Large, pre-trained language models known as "foundation models" can perform a wide range of tasks, including computer vision and natural language processing.

By enabling the development of new applications and solutions to be simpler and more effective, these models are revolutionizing the field of artificial intelligence.

In this blog, we will examine how foundation models are reshaping the field of artificial intelligence and the possible effects they may have on society in the years to come.

What are Foundation Models?

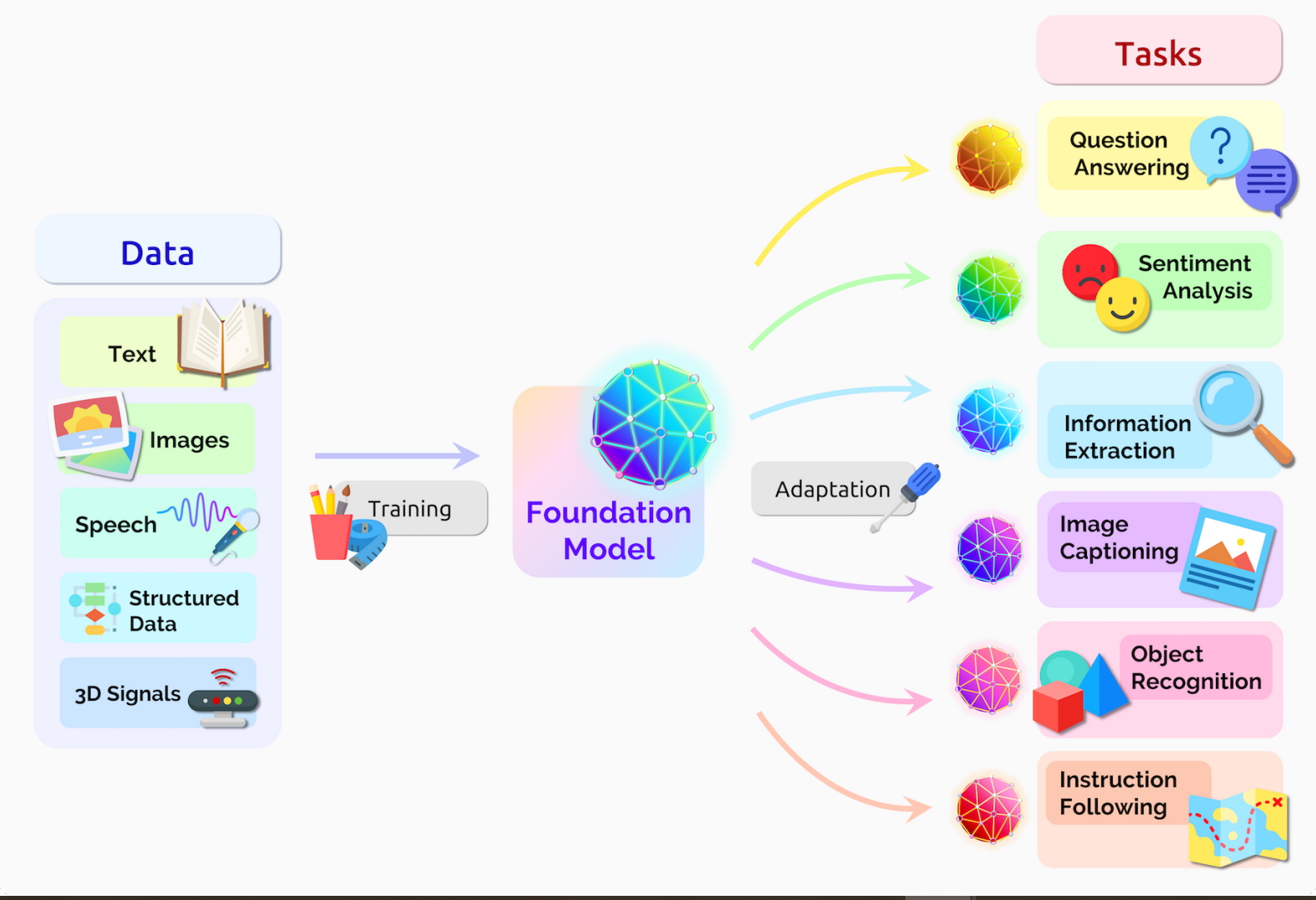

Foundation models are sizable, pre-trained machine learning models that have been trained using deep learning methods on enormous volumes of data. They are made to be general in purpose. These models can carry out a wide range of tasks in various areas, including natural language processing, computer vision, speech recognition, and more.

Instead of developing a unique model for each activity, foundation models aim to establish a single, all-purpose model that may be tailored for certain purposes. The accuracy and performance of the models can be improved while saving time and money during model creation.

Some examples of foundation models include OpenAI's GPT-3, Google's BERT, and Facebook's RoBERTa. These models have been pre-trained on massive amounts of data and can be fine-tuned for various tasks such as language translation, sentiment analysis, text classification, and more.

How have Foundation Models impacted the AI industry?

Foundation models have had a significant impact on the AI industry in a number of ways. Here are some of the key impacts:

1. Improved Accuracy

In various applications, including natural language processing, computer vision, speech recognition, and others, foundation models have produced state-of-the-art results. These models have learned to recognize intricate patterns and relationships in the data through pre-training vast amounts of data, improving accuracy in subsequent tasks.

2. Reduced Development Time

Developers can fine-tune models for certain tasks more quickly with pre-trained foundation models than when creating models from the start. This has reduced the time needed for development and accelerated the release of new AI apps.

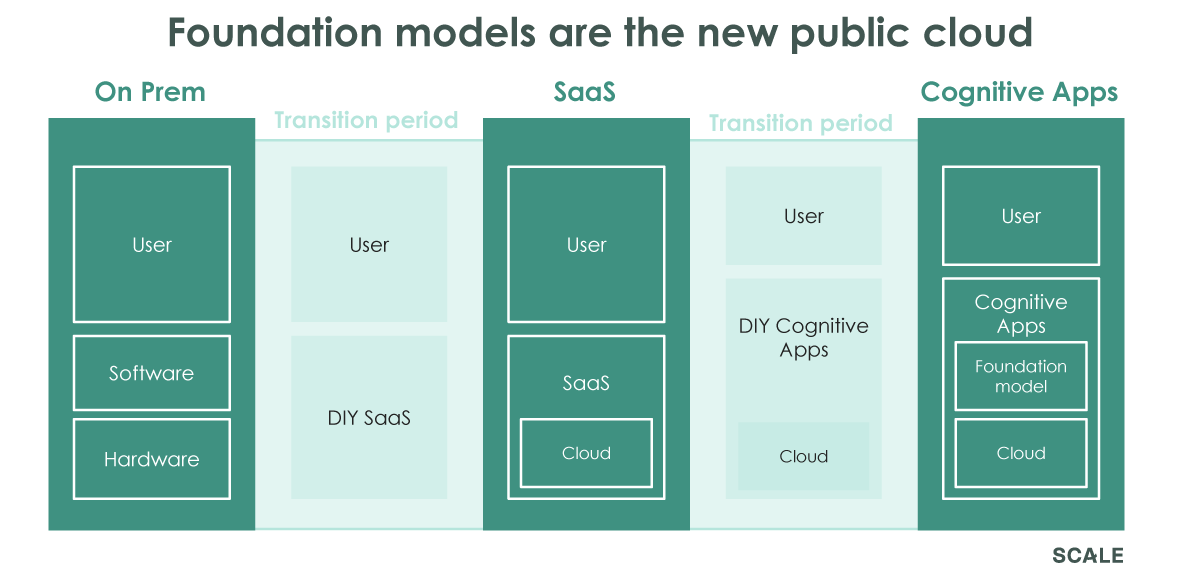

3. Democratization of AI

Building and deploying AI applications has become simpler for non-experts thanks to foundation models. Developers can create applications with little machine learning or data science skills thanks to the availability of pre-trained models.

4. Lower Cost

The cost of creating AI applications has also decreased because of foundation models. Startups can save money by leveraging pre-trained models instead of having to pay for the infrastructure needed to train their own models.

5. Access to Cutting-Edge Technology

Some of the largest technology firms in the world, like Google, Facebook, and OpenAI, create foundation models. Startups can use these methods to access cutting-edge technologies they might not otherwise have.

6. Innovation

Foundation models have enabled new and innovative applications of AI, such as language translation, chatbots, image and speech recognition, and more. By providing a solid foundation on which to build, these models have opened up new possibilities for what can be achieved with AI.

7. Business Opportunities

AI business potential has been expanded due to foundation models. Businesses can develop and deploy AI applications more quickly and for less money, allowing them to launch new goods and services more swiftly.

The development of new AI applications and solutions has become faster, simpler, and more widely available because foundation models have fundamentally changed the AI industry. They have also facilitated new AI advances and created new business prospects.

How Foundation Models Impacted the Model Training Process?

Foundation models have significantly impacted the model training process in AI. Here are some of the ways in which foundation models have impacted model training:

1. Pre-Training

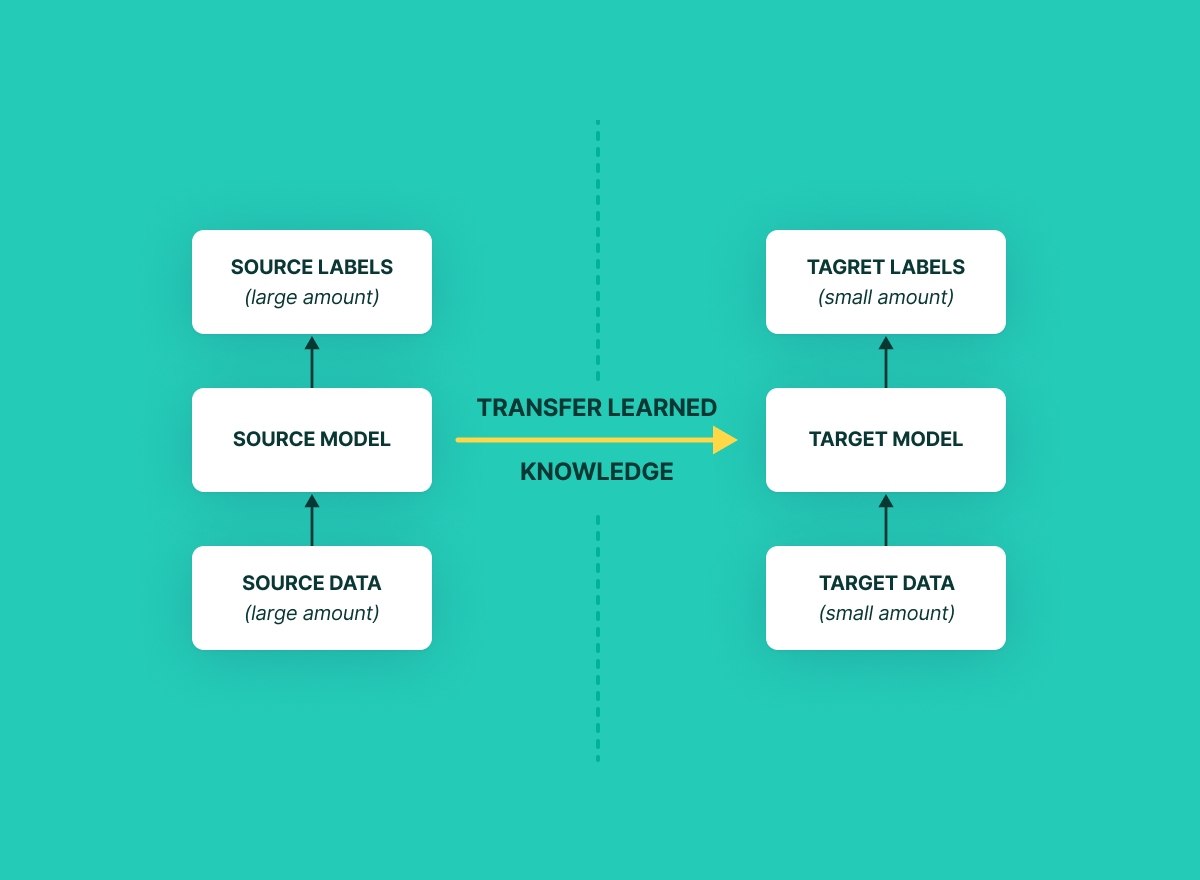

Foundation models have already understood the fundamental patterns and correlations in the data since they have been pre-trained on vast volumes of data. The amount of training time required is decreased by the pre-training, which permits quicker and more effective fine-tuning for certain jobs.

2. Standardization

Foundation models have helped to standardize the model training process. With widely used pre-trained models available, developers can use a common starting point for their models. This has enabled more consistency in model development and evaluation, making comparing and benchmarking different models easier.

3. Increased Efficiency

With the help of pre-trained foundation models, developers can get cutting-edge outcomes with minimal training time and processing power. As a result, model training has become more effective, and new AI applications can now be developed and deployed more quickly.

How do Foundation Models help in Training data?

Foundation models, also known as pre-trained language models, can help in training data in several ways:

1. Transfer Learning

Transfer learning has proliferated as a model training method with foundation models. Instead of beginning from scratch, transfer learning entails tweaking a previously trained model for a particular task. This method has decreased the amount of data, computing power, and time needed for model creation.

2. Feature Extraction

Foundation models can be applied to text data to extract valuable features, which can subsequently be fed into more advanced models for classification, regression, or other purposes. By doing so, feature engineering time and effort can be reduced, and task performance may improve.

3. Data Augmentation

By producing variations of current data, foundation models can produce new data. For instance, a language model can produce new sentences that are similar to current sentences in both structure and meaning, which can then be added to the training data. This can increase the model's generalizability and resilience.

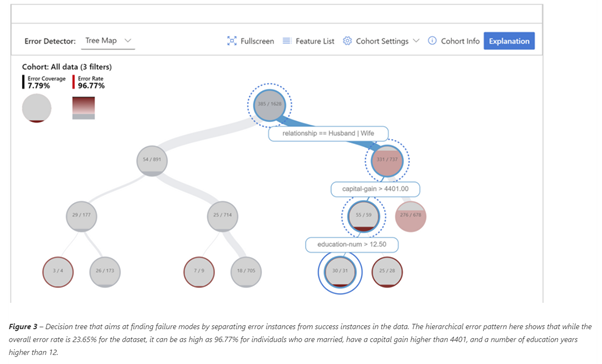

4. Error Analysis

Foundation models can be used to examine errors in downstream models that were made in relation to particular activities. Insights about the types of faults and potential solutions can be gleaned by comparing the model's output and input data. The effectiveness of the downstream model and the training data's quality can both be enhanced as a result.

Building models has been faster, more effective, and more standardized because of foundation models, which have fundamentally changed the model training process in AI. These models have opened up new avenues for what AI may accomplish by giving a strong base upon which to develop.

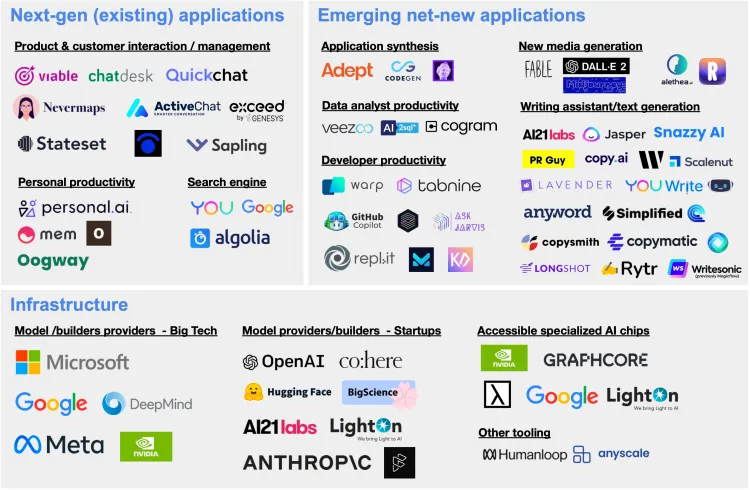

Popular Companies Using Foundation Models

Many companies are using foundation models in their AI applications. Here are some examples:

Google has developed several foundation models, including BERT (Bidirectional Encoder Representations from Transformers), which has been widely used for natural language processing tasks. Google also uses foundation models in its search engine, voice recognition, and other AI applications.

Facebook has created a number of foundational models, including XLM-R (Cross-lingual Language Model for Robust Universal Sentence Representations) and SAM (Segment Anything Model). Other AI applications, as well as Facebook's language translation tools, have made use of these models.

OpenAI

OpenAI has created a number of foundational models, such as the DALL-E neural network and the GPT (Generative Pre-trained Transformer), which creates graphics from text descriptions. These models have been employed in numerous artificial intelligence (AI) applications, including image recognition and natural language processing.

Microsoft

Microsoft has developed several foundation models, including Turing-NLG and GPT-3. These models have been used in Microsoft's AI applications, such as its language translation tools and virtual assistants.

Amazon

The T5 (Text-to-Text Transfer Transformer) and BERT foundation models are just two of the ones created by Amazon. Amazon's AI products have utilized these models, including its speech recognition software and recommendation engines.

Finally, a large number of businesses use foundation models in a variety of AI applications. These models have made it possible to construct AI models that are more precise, effective, and standardized, which has expanded the range of what can be done with AI.

Conclusion

In conclusion, foundation models have had a significant influence on the field of artificial intelligence. Foundation models have facilitated the quicker and more effective creation of AI applications by offering a pre-trained starting point for model development. Additionally, they have improved accuracy and standardized the model creation process.

Utilizing foundation models has benefited both large and small businesses, creating new business prospects and bettering products and services. The future of AI will depend more and more on foundation models as they develop and get better, fostering new ideas and advancements in the field.

FAQs

- What do foundation models mean in the deployment of AI?

The term "foundation models" refers to extensive pre-trained language models that are the foundation for several AI applications. To comprehend and produce writing that resembles that of a person, they are trained on massive volumes of data.

2. How do foundation models alter the adoption of AI?

Foundation models have transformed the way AI is adopted by giving developers a strong place to start. They provide a wide variety of features, including text production, translation, and natural language processing, which eliminates the need for in-depth training from the beginning.

3. What are some examples of foundation models?

A few such foundation models include the GPT-3 and GPT-4 from OpenAI. These models can generate meaningful text, respond to queries, translate across languages, and even write code. They have billions of parameters

4. What benefits come from employing foundation models?

Foundation models make it easier to implement AI by providing pre-made solutions for numerous problems. They lessen the amount of money, effort, and computing power needed to develop AI applications. They also provide developers access to cutting-edge language processing capabilities.

5. What are the challenges associated with foundation models?

Some of the challenges include the possibility of bias in the training data, the requirement for enormous computational resources to train and perfect models, and the ethical issues surrounding the ethical use of AI.

6. How are foundation models used in real-world applications?

A variety of applications, such as virtual assistants, chatbots for customer support, language translation services, content creation, sentiment analysis, and more, leverage foundation models. They supply the core AI abilities that drive these applications.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)