AutoGPT: Architecture, Performance, & Application

AutoGPT is a language model based on the Transformer architecture designed to generate human-like text for various natural language tasks such as language modeling, understanding, and generating natural language.

AutoGPT is pre-trained on large amounts of text data using unsupervised learning. The pre-training process involves two stages:

- Pre-training on a large corpus of text using a language modeling objective

- Fine-tuning the model on a specific downstream task using supervised learning.

AutoGPT models come in various sizes, ranging from a few million parameters to 175 billion parameters.

The largest version of AutoGPT, GPT-3, has 175 billion parameters and is currently the largest language model ever created. The increase in model size has led to significant improvements in AutoGPT's performance on various natural language tasks.

Table of Contents

How Autogpt Works?

AutoGPT is a newly introduced natural language processing tool that requires three inputs: AI Name, AI Role, and up to 5 goals.

Its primary role is to conduct market research on tech products.

One example is researching headphones, which involves conducting web searches and generating a list of the top 5 headphones with pros and cons and respective prices.

AutoGPT utilizes ChatGPT-4 or GPT-3.5 as its brain to make decisions, and it can learn from past experiences to improve its results.

It can also retain context and make informed decisions by integrating with vector databases, a memory storage solution.

Additionally, it has internet access, which enables it to fetch desired information and manipulate files by accessing and summarizing data.

You must provide three inputs to AutoGPT.

- AI Title / Name

- AI Function / Role

- Up to 5 objectives

The name of the AI is ResearchGPT.

AI Role: Artificial intelligence is meant for market research on technological items.

Next, we define five goals we aim to achieve:

- Goal 1: Conduct market research on various headphones on the market today.

- Goal 2: Obtain the best five headphones and outline their advantages and disadvantages.

- Goal 3: Include each one's pricing and save the analysis.

- Goal 4: When you're finished, stop.

It will now do all necessary to achieve all four objectives. It entails researching headphones online and listing the best five headphones, their pros and disadvantages, and their costs.

The four different components of AutoGPT include:

Brain

Auto-GPT's brain is the ChatGPT-4 language model, which it utilizes to make judgments. If you don't have access to GPT-4, it can also operate on GPT-3.5.

Long and short-term memory management

This is how people learn from their mistakes. Auto-GPT can evaluate its work, learn from previous experiences, and use its history to provide more exact results.

Combining Auto-GPT with vector databases, a memory storage system, enables it to maintain context and make intelligent judgments.

Internet access

Unlike ChatGPT, Auto-GPT can use the internet to conduct online searches and get information for you.

Storage and summarising of files

It can edit files, which means it can access and extract data from files before summarising them.

Performance Applications

The impressive performance of AutoGPT is due to its large size and the massive amount of text data it has been trained on.

Its performance on natural language tasks has been benchmarked against state-of-the-art models and has shown great potential for various real-world applications.

Below are some details about the performance of AutoGPT over different domains:

Market Research

One of the primary applications of AutoGPT is market research.

It can research different products and services and gather customer needs and preferences data.

For example, if a company wants to launch a new product, it can use AutoGPT to conduct market research and gather data on customer needs and preferences. AutoGPT can also evaluate competitors' products and identify gaps in the market.

Customer Service

AutoGPT can be used in customer service to answer customers' queries, provide troubleshooting assistance, and resolve issues.

By integrating with customer service platforms, AutoGPT can handle a large volume of customer queries quickly and accurately.

This can significantly reduce the workload of customer service agents and improve the overall customer experience.

Content Creation

AutoGPT can generate high-quality content for blogs, social media, and other marketing channels.

By analyzing the content preferences of target audiences, AutoGPT can generate engaging and relevant content that resonates with them.

This can help businesses and organizations build a strong online presence and attract customers.

Personalization

AutoGPT can personalize content and marketing messages based on customer preferences and behavior.

AutoGPT can generate personalized recommendations and offers tailored to each customer's needs and interests by analyzing customer data.

This can help businesses build stronger customer relationships and increase customer loyalty.

Fraud Detection

AutoGPT can detect fraudulent activities such as credit card fraud, identity theft, and money laundering.

By analyzing large volumes of data, AutoGPT can identify patterns and anomalies that indicate fraudulent activities.

This can help businesses and organizations prevent financial losses and protect their customers' personal and financial information.

Architecture Details

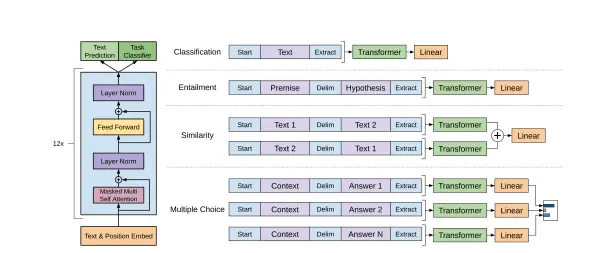

The architecture of AutoGPT is based on the GPT family of language models, which are built using the transformer architecture.

The transformer architecture was introduced by Vaswani et al. in 2017 and is highly effective in a wide range of NLP tasks.

The GPT family of models, including AutoGPT, uses a specific variant of the transformer architecture called the decoder-only transformer.

The decoder-only transformer consists of several layers of self-attention and feedforward neural networks.

Figure: Architecture layout for Gpt-4

The input to the model is a sequence of tokens embedded into a high-dimensional vector space.

The model then processes the sequence of embeddings through a series of self-attention layers, which allow the model to capture the relationships between the tokens in the sequence.

The self-attention mechanism computes a weighted sum of the embeddings based on their relevance to each other in the sequence.

After the self-attention layers, the model passes the sequence of embeddings through a feedforward neural network, which applies non-linear transformations to each embedding independently.

The output of the feedforward network is then added back to the input embedding to produce a residual connection. This helps to mitigate the vanishing gradient problem and allows the model to learn deeper representations.

AutoGPT also includes several additional features that improve its performance on natural language generation tasks.

One of these features is positional encoding, which provides the model with information about the position of each token in the sequence. This helps the model to learn the order of the tokens in the sequence, which is important for tasks such as language translation.

Another key feature of AutoGPT is its use of a large-scale language model pre-training approach. The model is pre-trained on a massive corpus of text data using a self-supervised learning approach.

This allows the model to learn the underlying patterns and structures of natural language, which can then be fine-tuned for specific NLP tasks.

Conclusion

The above blog introduces AutoGPT, a language model based on the transformer architecture designed for various natural languages tasks such as language modeling, understanding, and generation.

The model is pre-trained on large amounts of text data using unsupervised learning and fine-tuned on a specific downstream task using supervised learning. AutoGPT models come in various sizes, ranging from a few million parameters to 100 trillion parameters.

The blog further discusses the impressive performance of AutoGPT on natural language tasks and benchmarked against state-of-the-art models. The architecture of AutoGPT is based on the GPT family of language models, which are built using the transformer architecture.

The article also discusses potential applications of AutoGPT, such as chatbots, content generation, language translation, and summarization.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)