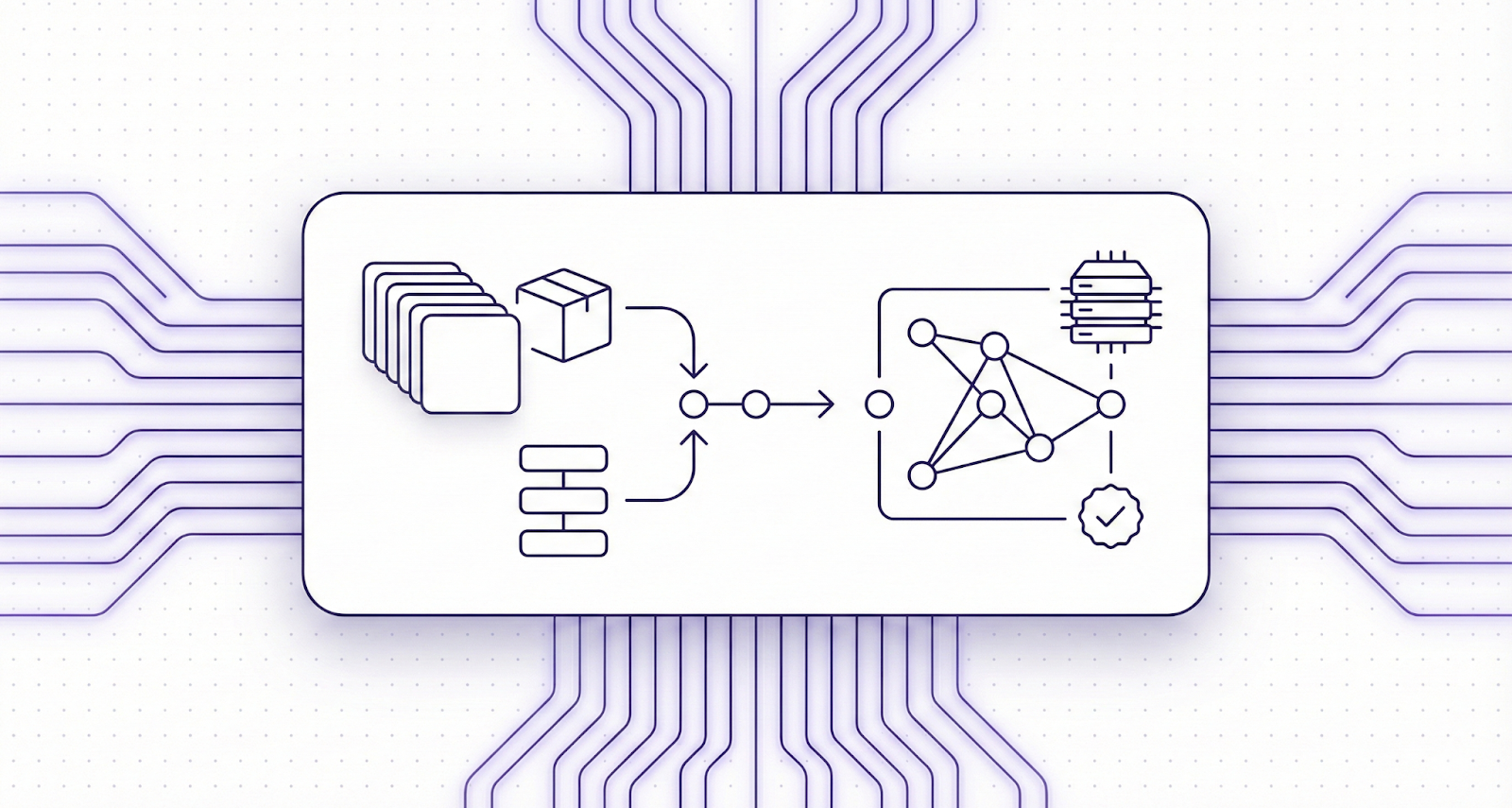

Collect, annotate, and ship training-ready egocentric and multimodal robotics datasets with verified quality.

Book a demo

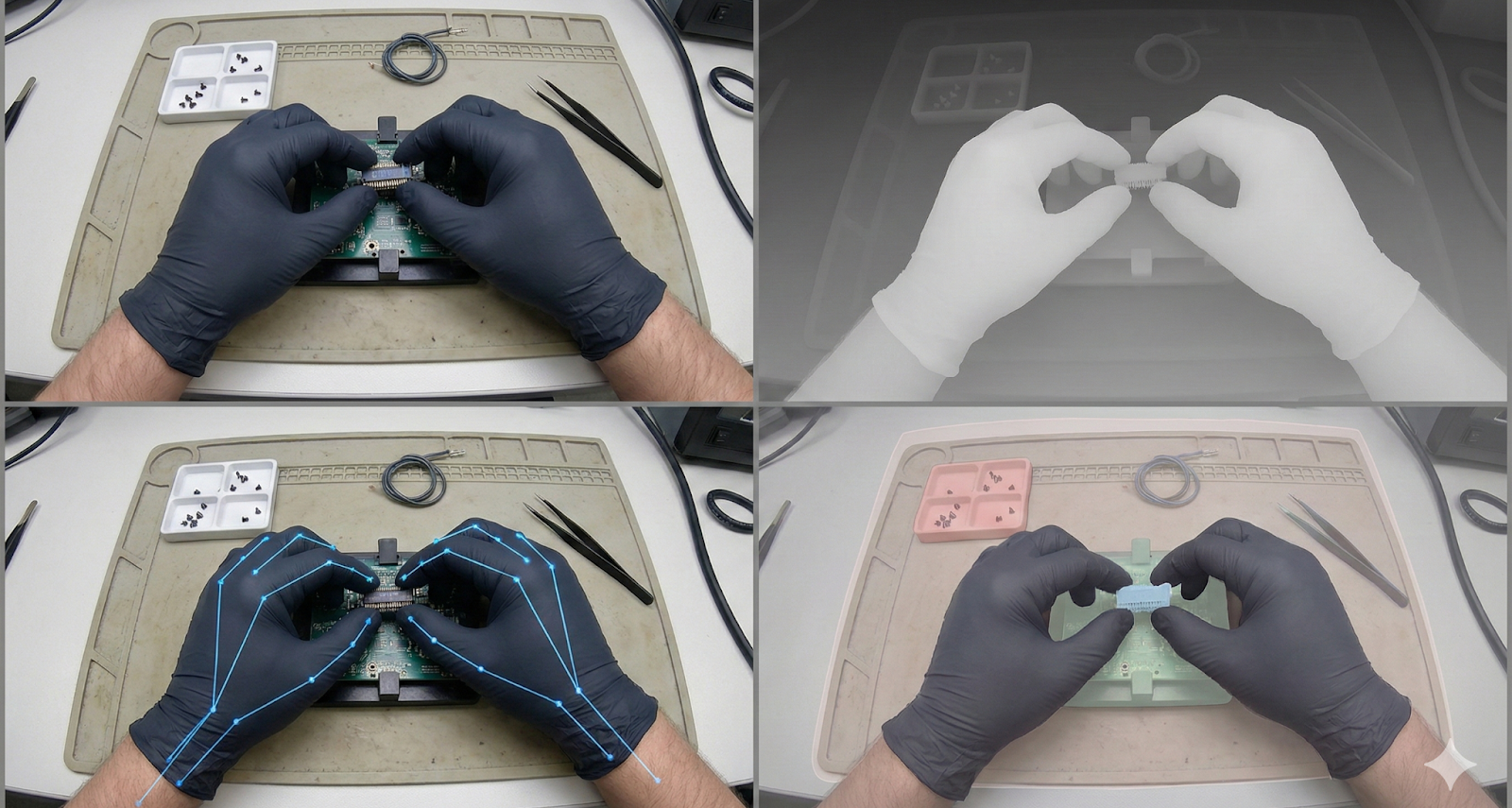

First-person (head-mounted or wearable POV) video capture of humans performing real tasks, recorded to support:

Such first-person views are crucial for embodied AI and robots to learn from human behavior directly, reducing the gap between simulation and real-world perception.

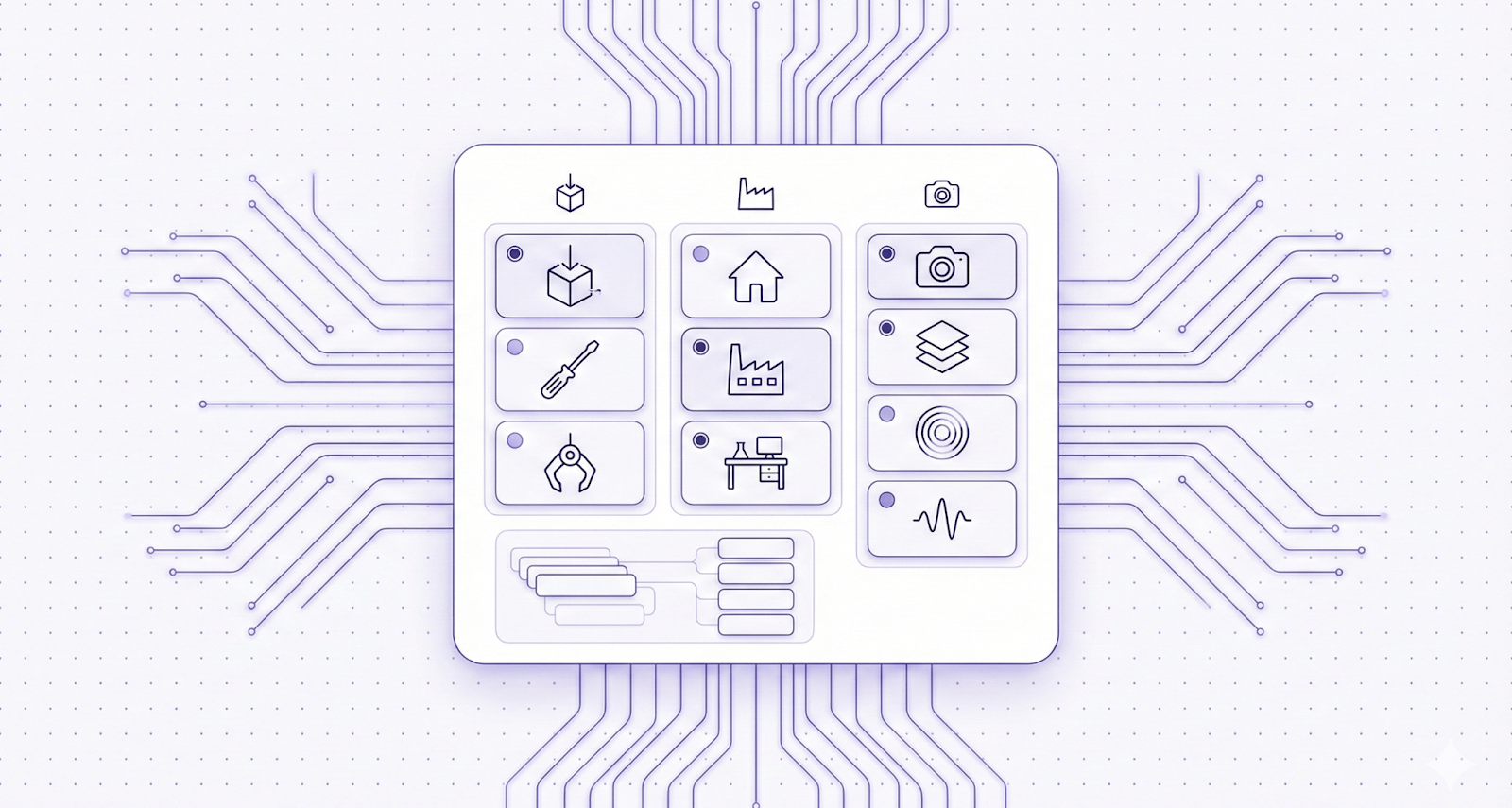

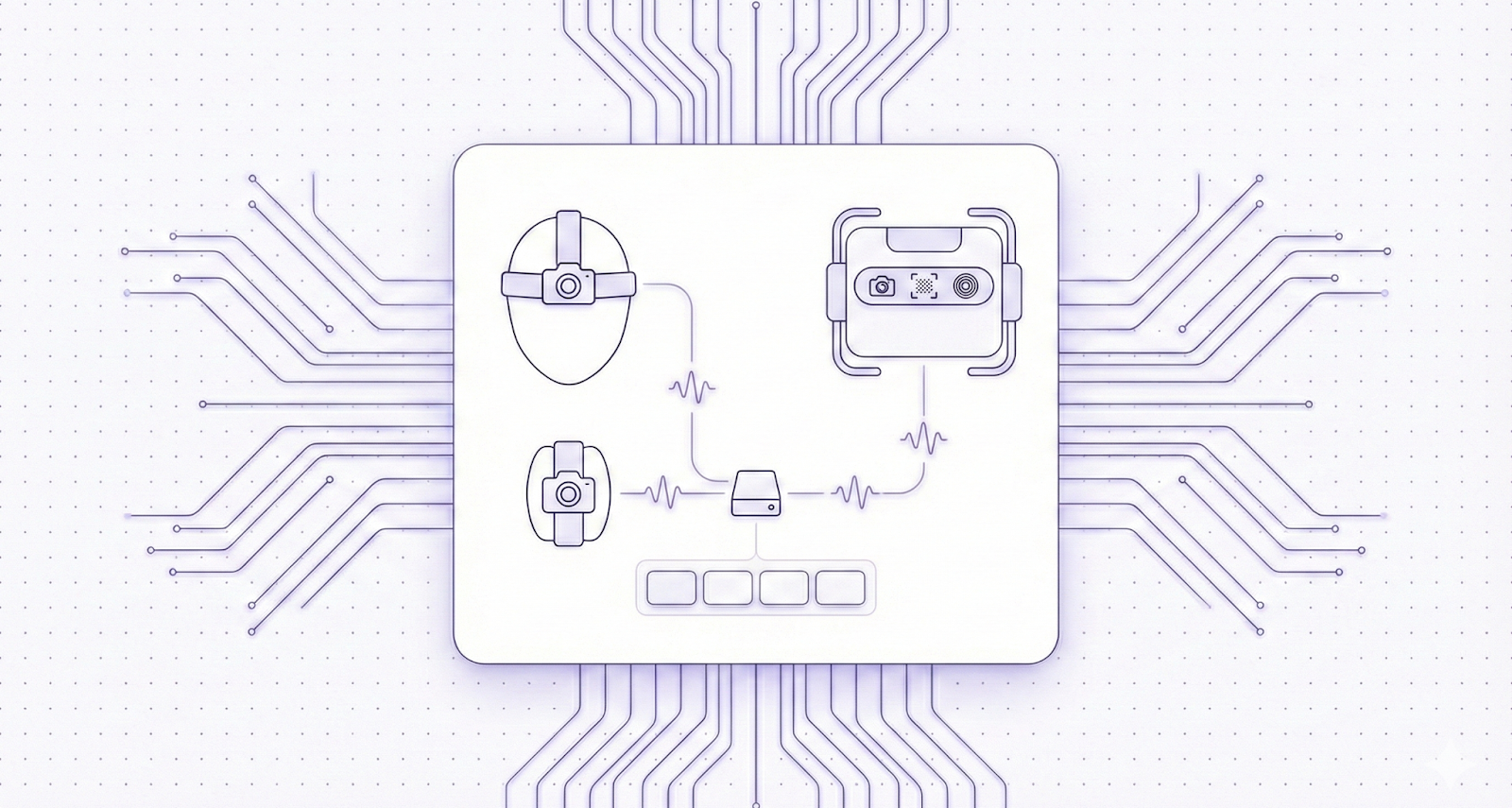

We support rich sensor and multi-modal data capture including:

This multi-modal data helps your models make sense of what to do, how to do it, and when to do it in real settings.

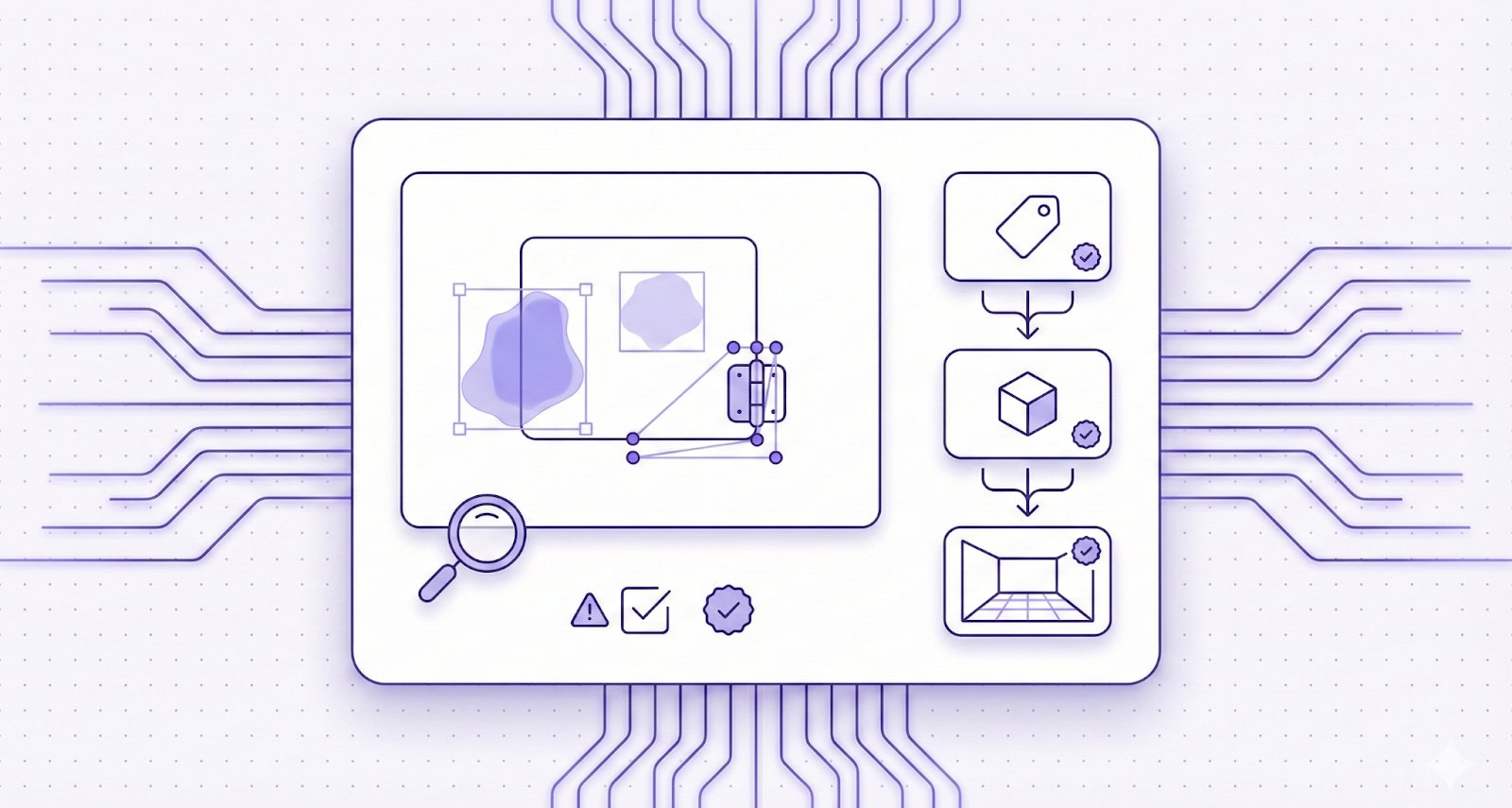

Structured annotations tailored for robotics and embodied AI:

Every dataset can be delivered in formats ready for training robotics models (e.g., JSON, COCO, custom schemas).

We handle large-scale data with expert annotation workflows - combining automation with domain experts to ensure label precision and consistency. ✔ Ready-to-Use for Vision & Learning Workflows Data is exported in ML-ready formats compatible with robotics learning frameworks and training pipelines.

We tailor recordings, sensors, environments, and annotation schemas to your robotics goals —from household robots to industrial automation AI.

Your data is securely processed with enterprise-grade privacy and cloud integration options

Tell us what tasks, environments, and sensors you need data for

Wearable or robot-mounted gear for egocentric capture; optionally synchronized multi-sensor streams.

Our expert team labels actions, objects, and environmental context with hierarchical accuracy checks.

Receive annotated datasets formatted for direct ingestion into your AI training pipelines.

Train robots to perform human tasks with human-style intuition and situational awareness.

Link visual perception, instruction semantics, and task execution with structured human demonstrations.

Improve adaptability of assistive robots by learning from natural human behavior.