Top 10 New computer vision datasets published in CVPR 2022-Part 2

1. Multi-Dimensional, Nuanced and Subjective – Measuring the Perception of Facial Expressions

Author: De'Aira Bryant, Siqi Deng, Nashlie Sephus, Wei Xia, Pietro Perona

Humans are able to distinguish several expressions on a face, each with a different intensity. We suggest an approach for gathering and modelling human annotators' multidimensional modulated expression annotations. Our data show that different observers may perceive some phrases rather differently, so our model is built to include both ambiguity and intensity. Six primary expression dimensions are enough, according to empirical research on the number of dimensions required to accurately capture the sense of facial expression. For 1,000 photos selected from the well-known ExpW in-the-wild collection, they have used the approach to get multidimensional modulated expression annotations. They have used these annotations to test four publicly available algorithms for automatic face expression prediction as proof of concept for our enhanced measuring method.

The dataset:

A fresh set of multi-dimensional modulated annotations are offered for 1000 photos of faces in the MDNS (multi-dimensional, nuanced, subjective) annotation dataset.

You can download this dataset from here

2. Point Cloud Pre-Training With Natural 3D Structures

Author: Ryosuke Yamada, Hirokatsu Kataoka, Naoya Chiba, Yukiyasu Domae, Tetsuya OGATA

The creation of 3D point cloud datasets is a labour-intensive process. Consequently, it is challenging to create a dataset of large-scale 3D point clouds. They have suggested a newly created point cloud fractal database (PC-FractalDB), a distinct family of formula-driven supervised learning driven by fractal geometry found in natural 3D structures, to address this problem. Their research is founded on the notion that by learning fractal geometry, we might learn representations from much more real-world 3D structures than traditional 3D datasets. We demonstrate how the PC-Fractal DB makes it easier to address a number of recent dataset-related issues with 3D scene interpretation, including the collecting of 3D models and labour-intensive annotation.

The experimental section demonstrates how we improved upon the existing top scores obtained using the Point Contrast, contrastive scene contexts (CSC), and Random Rooms to attain performance rates of up to 61.9% and 59.0% for the ScanNetV2 and SUN RGB-D datasets, respectively. Additionally, the pre-trained PC-FractalDB model performs very well while training with fewer data. For instance, the PC-FractalDB pre-trained VoteNet performs at 38.3% in 10% of training data on ScanNetV2, which is +14.8% more accurate than CSC. Notably, we discovered that the suggested strategy yields the best results for 3D object detection pre-training in a little amount of point cloud data.

The dataset:

14,197,122 images have been labelled in the WordNet hierarchy as part of the ImageNet dataset. The ImageNet Large Scale Visual Recognition Challenge (ILSVRC), a benchmark in picture classification and object recognition, has been using the dataset since 2010. A group of hand-annotated training photos is included in the dataset that was made available to the public. Additionally, a set of test photos is made available without manual annotations. There are two types of ILSVRC annotations: (1) object-level annotations that include a tight bounding box and a class label around an instance of an object in the image, such as "there is a screwdriver centred at position (20,25) with a width of 50 pixels and height of 30 pixels," and (2) image-level annotations that include a binary label for the existence or absence of an object class in the image, such as "there are cars in this image" but "there is no tig Because the images in the ImageNet project are not its property, just thumbnails and URLs are given.

- The total number of non-empty WordNet synsets: 21841

- The total number of images: 14197122

- Number of images with bounding box annotations: 1,034,908

- Number of synsets with SIFT features: 1000

- Number of images with SIFT features: 1.2 million

The ModelNet40 collection includes point clouds of created objects. ModelNet40 is the most used benchmark for point cloud analysis because of its many categories, neat shapes, well-constructed dataset, etc. The original ModelNet40 comprises 12,311 CAD-generated meshes in 40 categories (including automobiles, plants, and lamps), of which 9,843 are used for training and the remaining 2,468 are saved for testing.

ScanNet is an indoor RGB-D dataset with 2D and 3D data at the instance level. Instead of being a set of points or objects, it is a collection of labelled voxels. ScanNet v2, the most recent version of ScanNet, has so far amassed 1513 annotated scans with a rough surface coverage of 90%. This dataset is divided into 20 classes of annotated 3D voxelized objects for the semantic segmentation challenge.

10335 actual RGB-D photos of room scenarios are included in the SUN RGBD dataset. There is a depth and segmentation map that corresponds to each RGB image. There are as many as 700 identified object categories. The number of photos in the training and testing sets is 5285 and 5050, respectively.

You can download this dataset from here

Related Papers for your reference

MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

by Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam

Fully Convolutional Networks for Semantic Segmentation Published on CVPR 2015 by Evan Shelhamer, Jonathan Long, Trevor Darrell

Implicit-PDF: Non-Parametric Representation of Probability Distributions on the Rotation Manifold by Kieran Murphy, Carlos Esteves, Varun Jampani, Srikumar Ramalingam, Ameesh Makadia

ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation by Adam Paszke, Abhishek Chaurasia, Sangpil Kim, Eugenio Culurciello

3. Scene Representation Transformer: Geometry-Free Novel View Synthesis Through Set-Latent Scene Representations

Author: Mehdi S. M. Sajjadi, Henning Meyer, Etienne Pot, Urs Bergmann, Klaus Greff, Noha Radwan, Suhani Vora, Mario Lucic, Daniel Duckworth, Alexey Dosovitskiy, Jakob Uszkoreit, Thomas Funkhouser, Andrea Tagliasacchi

Inferring a 3D scene representation from a small number of photos that can be utilised to render fresh views at interactive speeds is a classic computer vision challenge. Previous research has primarily concentrated on recreating pre-defined 3D representations, such as textured meshes, or implicit representations, such as radiance fields, and frequently calls for input photographs with exact camera poses and lengthy processing durations for each fresh scenario. In this paper, we introduce the Scene Representation Transformer (SRT), a technique that, in a single feed-forward pass, analyses posed or unposed RGB photographs of a new region, infers a "set-latent scene representation," and synthesises unique views.

They have demonstrated that, on synthetic datasets, including a brand-new dataset developed for the research, this technique surpasses current baselines in terms of PSNR and speed. Using Street View images, they have further shown that SRT scales provide interactive visualisation and semantic segmentation of actual outside environments.

The dataset:

A technique called Neural Radiance Fields (NeRF) uses a small number of input views to optimise a persistent volumetric scene function in order to create innovative perspectives of complex situations. The dataset is divided into three parts, the first two of which are synthetic renderings of items named Diffuse Synthetic 360 and Realistic Synthetic 360, and the third of which is a collection of actual photographs of intricate settings. Four straightforwardly shaped Lambertian objects make up Diffuse Synthetic 360.

Each item is displayed at 512x512 pixels using upper hemisphere viewpoint samples. Eight items with intricate geometry and realistic non-Lambertian materials make up Realistic Synthetic 360. The two on the left are rendered from viewpoints measured on the complete sphere, while the remaining six are rendered from viewpoints sampled on the top hemisphere, all at an 800x800 pixel resolution. The actual photographs of complicated scenes are made up of eight forward-looking photos taken with a cellphone at a resolution of 1008 x 756 pixels.

You can download this dataset from here

Related Papers for your reference

Zero-Shot Text-Guided Object Generation with Dream Fields·published by Ajay Jain, Ben Mildenhall, Jonathan T. Barron, Pieter Abbeel, Ben Poole

Light Field Neural Rendering published by Mohammed Suhail, Carlos Esteves, Leonid Sigal, Ameesh Makadia

Instant Neural Graphics Primitives with a Multiresolution Hash Encoding Published by Thomas Müller, Alex Evans, Christoph Schied, Alexander Keller

https://storage.googleapis.com/labellerr-cdn/top10-computer%20vision%20datasets%20/behave.webp

4. BEHAVE Dataset and Method for Tracking Human Object Interaction

Author: Bharat Lal Bhatnagar, Xianghui Xie, Ilya A. Petrov, Cristian Sminchisescu, Christian Theobalt, Gerard Pons-Moll ·

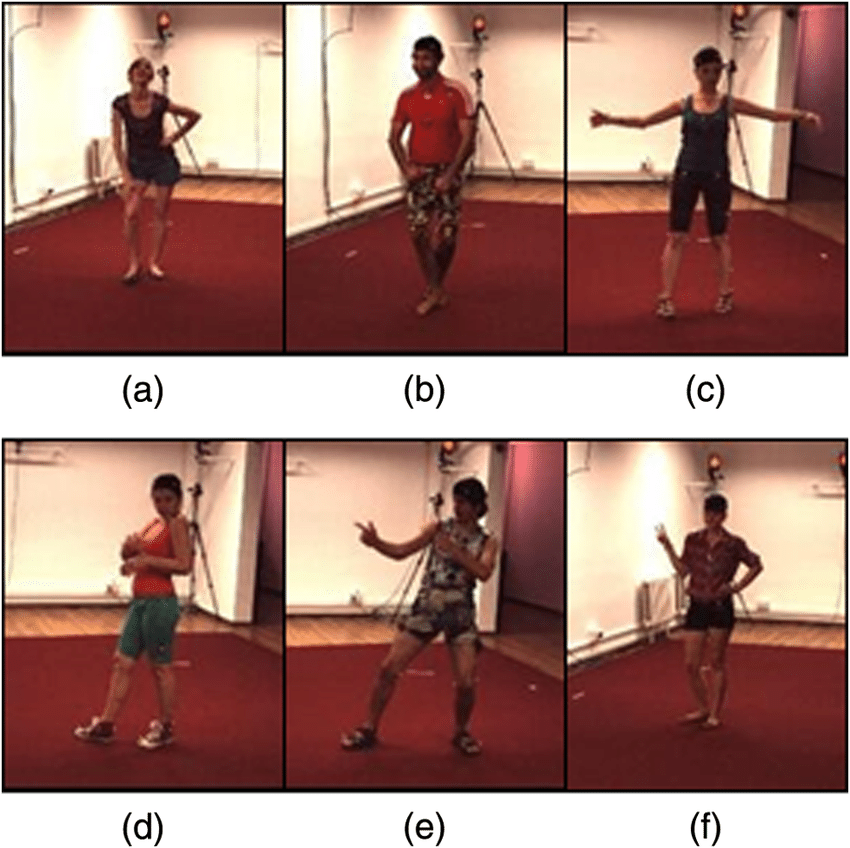

Many applications, including gaming, virtual and mixed reality, human behaviour analysis, and human-robot collaboration, all depend on models of interactions between people and things in natural settings. This difficult operation situation calls for generalisation to a huge variety of things, settings, and human behaviours. Unfortunately, there isn't a dataset like that. Additionally, as this data must be collected in a variety of natural settings, 4D scanners and marker-based capture techniques are out. In their BEHAVE dataset, which includes multi-view RGBD frames, associated 3D SMPL and object fits, as well as the contacts between them, they have demonstrated the first complete body human-object interaction dataset. They have taken approximately 15k pictures at 5 different locations with 8 subjects interacting with 20 common things in a variety of ways.

Using this data, they were able to develop a model that can collaboratively monitor people and objects in open spaces using a simple, portable multi-camera system. Their fundamental discovery is to forecast correspondences between the human and the object to a probabilistic body model in order to obtain human-object connections during encounters. Their method can capture and track not only people and objects but then also their 3D interactions, which are represented as surface touches.

The dataset:

Introduced in the paper: BEHAVE is a dataset of full-body human-object interactions that include multi-view RGBD frames, 3D SMPL and object fits, as well as the annotated contacts between them. The dataset consists of about 15k frames shot at 5 different places with 8 subjects interacting with 20 similar objects in a variety of ways.

Used in the paper: A dataset called GRAB shows full-body interactions and grasps of 3D objects. It includes precise hand and face movements as well as physical touch with the objects. It has 4 different motion purposes and 5 participants, 5 of them are women. Binary contact mappings between both the body and objects are also included in the GRAB dataset.

You can download this dataset from here

Related Papers for your reference

BEHAVE Dataset and Method for Tracking Human Object Interactions

Published by Bharat Lal Bhatnagar, Xianghui Xie, Ilya A. Petrov, Cristian Sminchisescu, Christian Theobalt, Gerard Pons-Moll

CHORE: Contact, Human and Object REconstruction from a single RGB image

Published by Xianghui Xie, Bharat Lal Bhatnagar, Gerard Pons-Moll

GRAB: A Dataset of Whole-Body Human Grasping of Objects

Published on ECCV 2020 by Omid Taheri, Nima Ghorbani, Michael J. Black, Dimitrios Tzionas

DexYCB: A Benchmark for Capturing Hand Grasping of Objects

Published on CVPR 2021 by Yu-Wei Chao, Wei Yang, Yu Xiang, Pavlo Molchanov, Ankur Handa, Jonathan Tremblay, Yashraj S. Narang, Karl Van Wyk, Umar Iqbal, Stan Birchfield, Jan Kautz, Dieter Fox

5. Capturing and Inferring Dense Full-Body Human-Scene Contact

Author: Chun-Hao P. Huang, Hongwei Yi, Markus Höschle, Matvey Safroshkin, Tsvetelina Alexiadis, Senya Polikovsky, Daniel Scharstein, Michael J. Black ·

Understanding how people interact with their surroundings begins with inferring human-scene contact (HSC). While there has been substantial advancement in the detection of 2D human-object interaction (HOI) and the reconstruction of 3D human pose and shape (HPS), it is still difficult to infer 3D human-scene contact from a single image. Existing HSC detection techniques frequently limit the body and scene to a limited number of primitives, only take into account a few predefined contact kinds, and even ignore visual evidence. From both a data and computational vantage point, they have solved the aforementioned restrictions to anticipate personal interactions from a single photograph. A brand-new dataset is known as RICH for "Real sceneries, Interaction, Contact and Humans" has been recorded.

They have developed a network that can forecast dense body-scene interactions from a single RGB image using RICH. Our major finding is that regions in touch are always obscured, necessitating the network's ability to search the entire image for proof. They have proposed a new Body-Scene contact TRansfOrmer after learning such non-local interactions via a transformer (BSTRO). There aren't many approaches that examine 3D contact; the ones that do tend to be solely foot-focused, foot contact is detected as a post-processing step, or contact is inferred from body pose without viewing the scene. As far as we are aware, BSTRO is the first technique to accurately estimate 3D body-scene interaction from a single image. We show that BSTRO performs noticeably better than the existing technology.

The dataset:

In addition to multiview, 4K indoor/outdoor video sequences, 3D body scans, ground-truth human models collected utilising markerless motion capture, and high-resolution 3D scene scans, RICH also includes ground-truth 3D human body models.

One of the biggest motion capture datasets, the Human3.6M dataset, contains 3.6 million human poses and related photographs that were recorded by a high-speed motion capture device. To gather video data at 50 Hz, there are 4 high-resolution progressive scan cameras. The dataset includes 11 professional actors' actions in 17 scenarios, such as talking on the phone, smoking, taking pictures, and discussing things. It also includes precise 3D joint locations and high-definition movies.

The 3D Poses in the Wild dataset is the first dataset containing precise 3D poses available for analysis in the wild. Even though there are alternative databases of the outdoors, they are all limited to a tiny recording capacity. The first to integrate video footage captured by a moving phone camera is 3DPW.

The dataset consists of

- 60 video clips.

- annotations for 2D poses.

- 3D poses were produced using the method described in the paper.

- Each sequence's frame features a different camera pose.

- 3D body scans and 3D models of individuals (re-poseable and re-shapeable). Each sequence includes the models that go with it.

- 18 3D models wearing various outfits.

You can download this dataset from here

Related Papers for your reference

View-Invariant, Occlusion-Robust Probabilistic Embedding for Human Pose

Published on 23 Oct 2020 by Ting Liu, Jennifer J. Sun, Long Zhao, Jiaping Zhao, Liangzhe Yuan, Yuxiao Wang, Liang-Chieh Chen, Florian Schroff, Hartwig Adam

XNect: Real-time Multi-Person 3D Motion Capture with a Single RGB Camera

Published on 1 Jul 2019 by Dushyant Mehta, Oleksandr Sotnychenko, Franziska Mueller, Weipeng Xu, Mohamed Elgharib, Pascal Fua, Hans-Peter Seidel, Helge Rhodin, Gerard Pons-Moll, Christian Theobalt ·

Capturing and Inferring Dense Full-Body Human-Scene Contact Published on CVPR 2022 by Chun-Hao P. Huang, Hongwei Yi, Markus Höschle, Matvey Safroshkin, Tsvetelina Alexiadis, Senya Polikovsky, Daniel Scharstein, Michael J. Black ·

Unified Simulation, Perception, and Generation of Human Behavior Published on 28 Apr 2022 by Ye Yuan

Unsupervised Learning for Physical Interaction through Video Prediction Published on NeurIPS 2016 by Chelsea Finn, Ian Goodfellow, Sergey Levine

MetaPose: Fast 3D Pose from Multiple Views without 3D Supervision published on CVPR 2022 by Ben Usman, Andrea Tagliasacchi, Kate Saenko, Avneesh Sud

Book our demo with one of our product specialist

Book a Demo