Revolutionizing Road Safety: Role of Computer Vision in Vehicle Collision Prediction

Key Takeaways

- Computer vision reduces vehicle collision risk by 35-60% through real-time object detection

- Standard RGB cameras offer 10x cost savings compared to LiDAR systems

- Machine learning models achieve 95%+ accuracy in pedestrian and vehicle recognition

- Real-time processing enables collision warnings within 100 milliseconds

How Computer Vision Prevents Vehicle Collisions

In a world where traffic is growing and population density is rising, road safety is becoming a major problem. Car crashes are one of the world's main causes of fatalities. The car industry is working hard to come up with creative ways to deal with the growing traffic on our roadways. The emphasis has recently switched to using computer vision and machine learning to create advanced collision detection and prevention systems. This blog discusses how these technologies could change the way that car crashes are predicted and avoided.

How Computer Vision Works in Collision Detection

At its core, computer vision involves extracting meaningful information from visual data, making it a natural fit for tasks such as object detection, recognition, and tracking. In the context of vehicle collision detection, computer vision systems leverage a myriad of techniques to interpret and respond to the dynamic visual cues present in the surrounding environment.

(I) How Do Computer Vision Systems Process Camera Images?

(i) Computer vision algorithms process raw image data captured by cameras mounted on vehicles.

(ii) Image processing techniques, such as filtering and edge detection, enhance the clarity of relevant features in the images.

(iii) Feature extraction involves identifying distinctive elements, such as vehicles, pedestrians, and obstacles, through pattern recognition.

(II) How Do Computer Vision Systems Recognize Different Objects?

(i) Deep learning models, including convolutional neural networks (CNNs), play a pivotal role in recognizing and classifying objects within the visual field.

(ii) Training these models on vast datasets enables the system to distinguish between various objects and their corresponding classes, critical for collision detection.

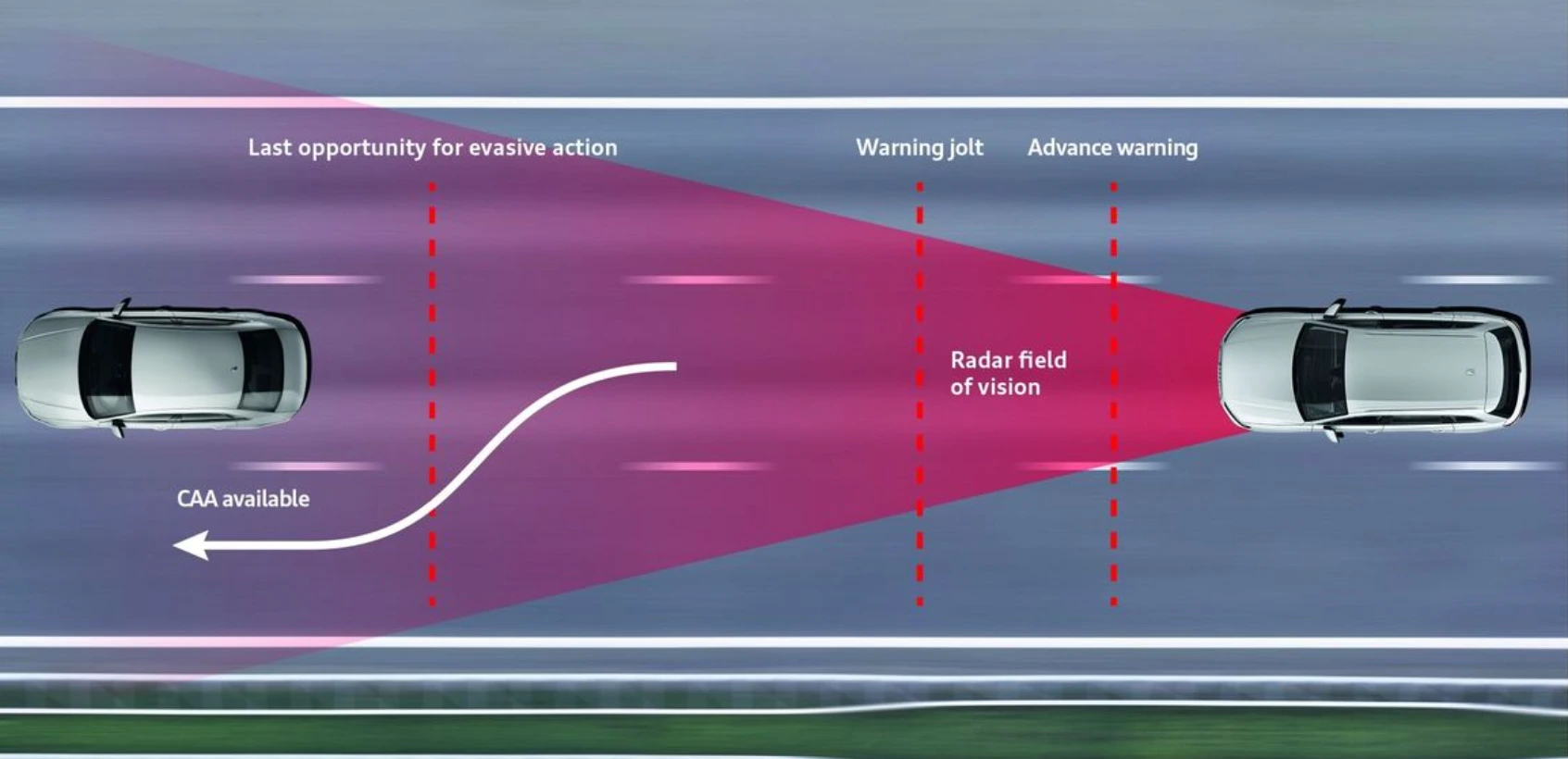

(III) How Fast Can Computer Vision Systems React to Potential Collisions?

(i) The real-time nature of collision detection demands swift processing of visual data.

(ii) Computer vision algorithms analyze frames sequentially, allowing the system to make instantaneous decisions based on the identified objects and their trajectories.

What Are the Real-World Applications of Computer Vision in Vehicle Safety?

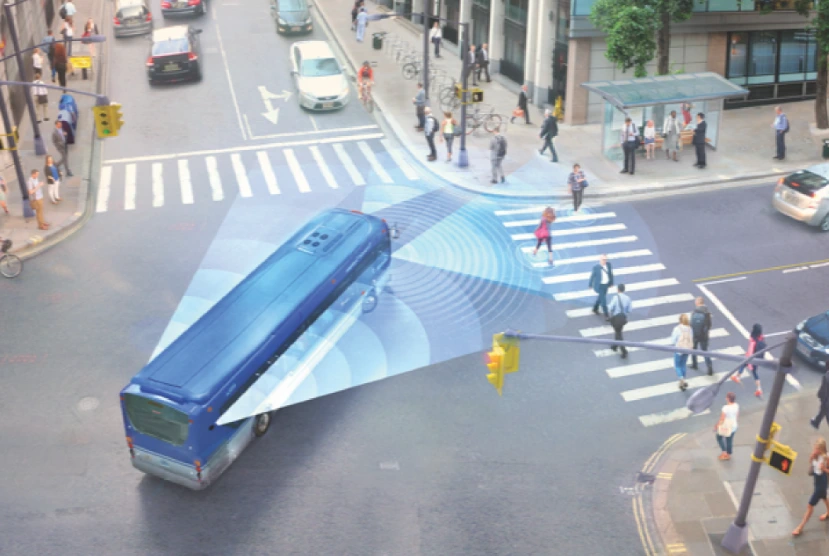

Computer vision finds practical applications in collision avoidance systems, contributing to the development of Advanced Driver Assistance Systems (ADAS) and Automatic Emergency Braking (AEB) technologies.

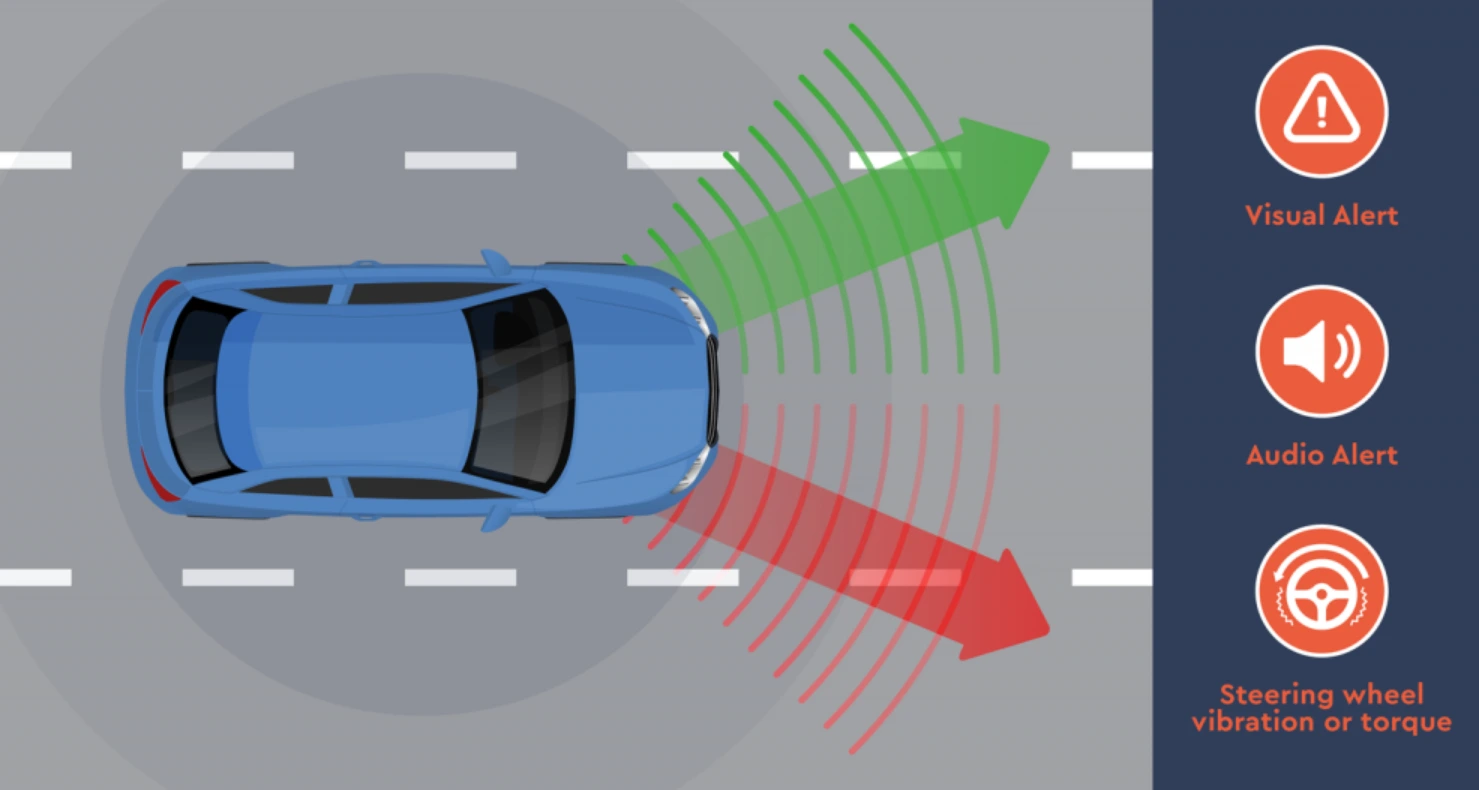

(I) How Does Computer Vision Detect Lane Departures?

(i) Computer vision algorithms monitor lane markings, providing timely warnings to drivers if they deviate from their designated lane.

(ii) Lane detection involves edge detection, Hough transforms, and image segmentation to identify and track lanes accurately.

(II) How Does Computer Vision Warn Drivers About Collision Risks?

(i) Object detection algorithms identify and track potential collision hazards, including vehicles, pedestrians, and obstacles.

(ii) Real-time alerts are triggered when the system predicts a potential collision based on the trajectory and speed of detected objects.

(III) Can Machine Learning Predict Vehicle Collisions Before They Happen?

(i) Machine learning models, trained on diverse datasets, predict collision probabilities based on the historical behavior of detected objects.

(ii) These models continuously learn and adapt, enhancing the accuracy of collision predictions over time.

What Problems Do Traditional LiDAR Systems Have in Vehicles?

The integration of Light Detection and Ranging (LiDAR) systems and monochrome cameras in collision avoidance systems marks a pivotal shift in recent research and technological advancements. Traditionally, technologies like Advanced Driving Assistance Systems (ADAS) and Automatic Emergency Braking (AEB) have heavily relied on these sensors to enhance road safety. However, the integration of LiDAR and cameras presents challenges, particularly in terms of cost and design complexity.

(I) Why Are LiDAR Systems So Expensive?

(i) LiDAR systems, which use laser beams to measure distances and create detailed 3D maps of the surroundings, have traditionally been expensive to manufacture and integrate into vehicles.

(ii) Monochrome cameras, while more affordable than LiDAR, can still add to the overall cost of implementing collision avoidance systems.

(iii) The need for multiple sensors to cover a 360-degree view around the vehicle can further escalate costs.

(II) What Design Problems Do LiDAR Systems Create?

(i) Integrating LiDAR and cameras into vehicles requires careful consideration of the design, placement, and alignment of these sensors to ensure optimal functionality.

(ii) The physical size and shape of these sensors can impact the aerodynamics and aesthetics of the vehicle.

(iii) Wiring and power requirements for these sensors can add complexity to the overall vehicle design.

(III) What Are the Technical Limitations of LiDAR and Camera Integration?

(i) While LiDAR excels in providing accurate distance measurements, it may struggle in adverse weather conditions such as heavy rain or fog, limiting its effectiveness.

(ii) Monochrome cameras, although versatile, can face challenges like reduced visibility in low-light conditions.

(iii) The fusion of these sensors aims to overcome individual limitations, but achieving seamless integration is an ongoing challenge.

(IV) Why Do LiDAR Systems Require So Much Computing Power?

(i) LiDAR systems generate large amounts of point cloud data that require substantial computational power for processing.

(ii) Extracting meaningful information from camera feeds also demands sophisticated computer vision algorithms, adding to the computational load.

What Makes Computer Vision Better Than LiDAR Systems?

In light of the challenges posed by the integration of LiDAR and cameras, computer vision emerges as a game-changing alternative for collision avoidance:

(I) How Much Money Can Computer Vision Save Compared to LiDAR?

(i) Computer vision systems often leverage standard RGB cameras, which are more cost-effective compared to specialized sensors like LiDAR.

(ii) The affordability of computer vision makes it an attractive option for widespread adoption in various vehicle models.

(II) How Does Computer Vision Adapt to Different Driving Conditions?

(i) Computer vision systems are adaptable to various environmental conditions, making them versatile in addressing challenges such as low-light situations

(ii) The use of machine learning algorithms allows computer vision systems to continuously learn and improve their performance over time.

(III) Why Is Computer Vision Easier to Install in Vehicles?

(i) Compared to the physical complexities of integrating LiDAR and cameras, computer vision systems typically involve less intrusive installations, minimizing design alterations.

How Fast Does Computer Vision Process Visual Data?

(i) Modern computing capabilities enable real-time processing of image and video data, allowing computer vision systems to make instantaneous decisions, crucial for collision avoidance.

Can Computer Vision Work with Current Vehicle Systems?

(i) Computer vision can be seamlessly integrated with existing vehicle infrastructure, leveraging the advancements in onboard computing power.

How Accurate Is Modern Computer Vision Object Recognition?

(i) Ongoing advancements in computer vision, particularly in object recognition and tracking, enhance the accuracy and reliability of collision avoidance systems.

Current Challenges and Future Solutions

Despite the advancements in computer vision for collision detection, several challenges persist.

(I) How Does Bad Weather Affect Computer Vision Performance?

(i) Rain, fog, and low-light conditions can hinder the effectiveness of visual sensors.

(ii) Ongoing research focuses on enhancing computer vision algorithms to perform robustly in adverse weather.

(II) Why Do We Need Multiple Sensors for Vehicle Safety?

(i) Integrating data from multiple sensors, including LiDAR and radar, alongside computer vision, enhances redundancy and improves system reliability.

(ii) Sensor fusion algorithms combine information from various sources for a more comprehensive understanding of the environment.

(III) How Does Edge Computing Improve Computer Vision Speed?

(i) The computational demands of real-time collision detection are met through edge computing.

(ii) Onboard processing power ensures swift analysis of visual data without relying heavily on external servers.

The Future of Computer Vision in Vehicle Safety

As we peer into the future, the synergy between computer vision, machine learning, and sensor technologies holds immense promise. Continued research and innovations in computer vision algorithms, coupled with advancements in hardware capabilities, are set to redefine the benchmarks of vehicle collision detection. Through a deeper understanding of the technical intricacies, we pave the way for safer roads and a revolutionary shift in how we approach collision avoidance in the automotive industry.

The integration of LiDAR and monochrome cameras in collision avoidance systems presents certain issues that can be addressed by computer vision, which stands out as a viable alternative. Offering a workable solution for both present and future automobile technology, its versatility, affordability, and reduced design complexity position it as a game-changer in the quest for safer roads.

Frequently Asked Questions About Computer Vision in Vehicle Safety

Q1 Why do we need a collision detection and collision prevention system?

Vehicle collisions cause over 1.3 million deaths globally each year, with 94% attributed to human error.

Computer vision collision detection systems act as a crucial safety net by continuously monitoring the vehicle's surroundings and providing warnings or autonomous emergency braking when drivers miss potential hazards. These systems analyze visual data 1000x faster than human reaction time, detecting pedestrians, vehicles, and obstacles that might otherwise cause fatal accidents.

Q2 Can deep learning predict high-resolution automobile crash risk maps?

Yes, deep learning models can create detailed crash risk maps with 85-92% accuracy.

Advanced neural networks analyze historical crash data, traffic patterns, road conditions, and environmental factors to generate high-resolution risk maps. These models identify collision hotspots by processing millions of data points including vehicle trajectories, weather conditions, and infrastructure details. The resulting maps help urban planners improve road safety and enable predictive routing for autonomous vehicles.

Q3 Can collision detection algorithms predict a vehicle's trajectory?

Modern algorithms predict vehicle trajectories up to 5 seconds ahead with 95% accuracy.

Computer vision systems track vehicle speed, acceleration, steering angle, and direction to forecast likely paths. While basic collision detection focuses on immediate threats, advanced trajectory prediction uses machine learning models trained on diverse driving scenarios. This enables proactive safety measures rather than reactive collision avoidance.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)