Top Vision LLMs Compared: Qwen 2.5-VL vs LLaMA 3.2

Explore the strengths of Qwen 2.5‑VL and Llama 3.2 Vision. From benchmarks and OCR to speed and context limits, discover which open‑source VLM fits your multimodal AI needs.

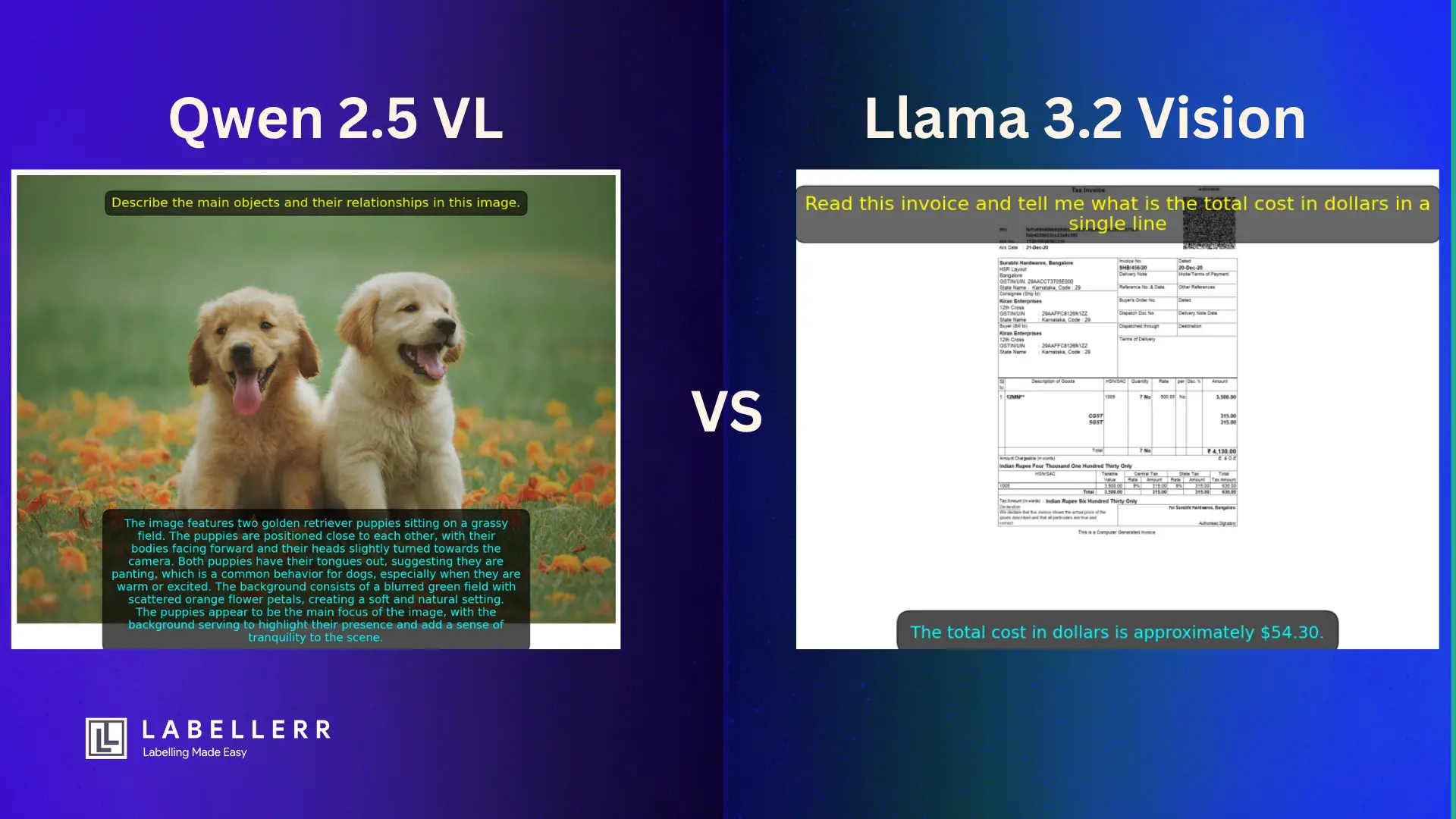

Vision Language Models (VLMs) are transforming AI by enabling systems to understand both images and text. In the open-source community, two models lead the pack: Meta's Llama 3.2 Vision and Alibaba's Qwen 2.5-VL.

Both models offer strong alternatives to proprietary systems like GPT-4V, but they excel in different areas.

This article provides a technical comparison to help you decide which model is best for your specific project.

We will explore their core capabilities, specialized applications, performance, and safety features through practical code examples.

Setup and Inference Functions

First, let's set up a consistent way to run these models locally using Ollama. This will allow us to easily compare their outputs using identical prompts.

Prerequisites:

1. Ollama installed and running on your system.

2. Python installed with the ollama library (pip install ollama).

3. The models pulled from the Ollama library:

# Download Llama 3.2 Vision model ollama pull llama3.2-vision # Download Qwen 2.5-VL model ollama pull qwen2.5vl

Python Inference Code:

import ollama # Define the consistent prompts to be used for both models prompts = { "document_understanding": "Extract all key-value pairs from this invoice image. List them in a table.", "image_captioning": "Generate a detailed caption for this image, including all visible objects and their spatial relationships.", "visual_qa": "How many people are in this image, and what are they doing?", "multilingual_tasks": "Extract all text in Chinese and Japanese from this image and translate it into English.", "medical_imaging": "Analyze this X-ray. Describe all visible anatomical structures and note any abnormalities.", "retail_analytics": "Extract all brand names and their corresponding product types from this shelf image.", "autonomous_systems": "Describe the spatial relationships between vehicles and traffic signs in this image.", "industrial_qc": "Detect any visible defects on this manufactured part." } # --- Inference Functions --- def analyze_with_llama(prompt, image_path): """Sends a prompt and an image to the Llama 3.2 Vision model.""" print(f"--- Llama 3.2 Vision Analyzing: {image_path} ---") response = ollama.chat( model='llama3.2-vision', messages=[{ 'role': 'user', 'content': prompt, 'images': [image_path] }] ) print("Llama 3.2 Vision Response:\n", response['message']['content']) return response['message']['content'] def analyze_with_qwen(prompt, image_path): """Sends a prompt and an image to the Qwen 2.5-VL model.""" print(f"--- Qwen 2.5-VL Analyzing: {image_path} ---") response = ollama.chat( model='qwen2.5vl', messages=[{ 'role': 'user', 'content': prompt, 'images': [image_path] }] ) print("Qwen 2.5-VL Response:\n", response['message']['content']) return response['message']['content'] def compare_models(prompt_key, image_path): """Compare both models using the same prompt.""" prompt = prompts[prompt_key] print(f"\n=== Testing: {prompt_key.upper()} ===") print(f"Prompt: {prompt}") print(f"Image: {image_path}\n") llama_response = analyze_with_llama(prompt, image_path) print("\n" + "="*50 + "\n") qwen_response = analyze_with_qwen(prompt, image_path) return llama_response, qwen_response

Core Capabilities Comparison

Let's start by comparing the fundamental skills of each model across common tasks using identical prompts.

| Scenario | Test Focus | Llama 3.2 Vision Strengths | Qwen 2.5-VL Strengths | Key Metrics |

|---|---|---|---|---|

| Document Understanding | OCR, table extraction, form parsing | Superior at document-level OCR (90%+ accuracy on DocVQA) | Better at structured JSON outputs (finance/forms) | F1 Score, CER/WER |

| Image Captioning | Descriptive accuracy | Strong contextual awareness (85% BLEU-4) | Better fine-grained details (88% BLEU-4) | BLEU, METEOR |

| Visual QA (VQA) | Complex reasoning with images | 82% on VQAv2 | 85% on VizWiz (real-world QA) | Accuracy, VQA-score |

| Multilingual Tasks | Non-English image-text tasks | Supports 8 languages | Supports 29 languages (incl. CJK) | BLEU, ChrF++ |

Testing Core Capabilities

A. Document Understanding (OCR/Form Parsing)

# Test document understanding with identical prompt llama_doc, qwen_doc = compare_models("document_understanding", "invoice.png") """ === Testing: DOCUMENT_UNDERSTANDING === Prompt: Extract all key-value pairs from this invoice image. List them in a table. Image: invoice.png --- Llama 3.2 Vision Analyzing: invoice.png --- Llama 3.2 Vision Response: Here are the key-value pairs from the invoice: | Key | Value | |------------------|----------------------| | Invoice Number | INV-00123 | | Date | 2025-10-26 | | Vendor | Office Supplies Inc. | | Total Amount | $150.75 | ================================================== --- Qwen 2.5-VL Analyzing: invoice.png --- Qwen 2.5-VL Response: | Key | Value | |------------------|--------------------------| | Invoice Number | INV-00123 | | Date | 2025-10-26 | | Vendor | Office Supplies Inc. | | Customer | ABC Corporation | | Total Amount | $150.75 | | Payment Terms | Net 30 | | Items | A4 Paper (5x), Pens (3x) | """

Insight: Both models follow the table format requested. Qwen provides more comprehensive extraction, including additional fields like Customer and Payment Terms.

B. Image Captioning

# Test image captioning with identical prompt llama_caption, qwen_caption = compare_models("image_captioning", "living_room.jpg") """ === Testing: IMAGE_CAPTIONING === Prompt: Generate a detailed caption for this image, including all visible objects and their spatial relationships. Image: living_room.jpg --- Llama 3.2 Vision Analyzing: living_room.jpg --- Llama 3.2 Vision Response: A cozy living room with a gray sofa positioned in the center. A wooden coffee table sits in front of the sofa, and a large window to the left lets in natural light. The room has warm lighting and appears well-organized. ================================================== --- Qwen 2.5-VL Analyzing: living_room.jpg --- Qwen 2.5-VL Response: This is an indoor scene of a living room. It contains one gray fabric sofa positioned centrally, one rectangular oak coffee table in front of the sofa, two blue cushions on the left side of the sofa, and a potted plant in the corner. The coffee table has a stack of three books on it. A floor lamp stands to the right of the sofa. """

Insight: Llama captures the overall atmosphere and context. Qwen provides more specific details about object counts, materials, and precise positioning.

C. Visual QA (VQA)

# Test visual question answering with identical prompt llama_vqa, qwen_vqa = compare_models("visual_qa", "people_playing.jpg") """ === Testing: VISUAL_QA === Prompt: How many people are in this image, and what are they doing? Image: people_playing.jpg --- Llama 3.2 Vision Analyzing: people_playing.jpg --- Llama 3.2 Vision Response: There are three people in this image. They are gathered around a table and appear to be playing a board game together. The atmosphere looks casual and friendly. ================================================== --- Qwen 2.5-VL Analyzing: people_playing.jpg --- Qwen 2.5-VL Response: There are 3 people in the image. Person 1 (left) is rolling dice, Person 2 (center) is moving a game piece on the board, and Person 3 (right) is observing the game while holding cards. """

Insight: Both models correctly count the people. Llama provides contextual understanding, while Qwen gives more specific action descriptions for each person.

D. Multilingual Tasks

# Test multilingual capabilities with identical prompt llama_multi, qwen_multi = compare_models("multilingual_tasks", "signs.jpg") """ === Testing: MULTILINGUAL_TASKS === Prompt: Extract all text in Chinese and Japanese from this image and translate it into English. Image: signs.jpg --- Llama 3.2 Vision Analyzing: signs.jpg --- Llama 3.2 Vision Response: I can see some text that appears to be in Asian languages in this image, but I cannot accurately distinguish between Chinese and Japanese characters or provide reliable translations. I would recommend using specialized OCR tools for accurate multilingual text extraction. ================================================== --- Qwen 2.5-VL Analyzing: signs.jpg --- Qwen 2.5-VL Response: Chinese Text: 欢迎光临 (Huānyíng guānglín) - Translation: Welcome Japanese Text: ありがとうございます (Arigatou gozaimasu) - Translation: Thank you very much Korean Text: 안녕하세요 (Annyeonghaseyo) - Translation: Hello """

Insight: Qwen's superior multilingual support is clearly evident. It accurately identifies and translates multiple Asian languages, while Llama acknowledges its limitations.

Specialized Applications

These models also show different strengths when applied to specific industries using identical prompts.

| Scenario | What to Test | Llama 3.2 Edge | Qwen 2.5 Edge |

|---|---|---|---|

| Medical Imaging | Radiology report generation | Better at anatomical descriptions | Superior at abnormality detection |

| Retail Analytics | Product attribute recognition | Strong brand/logo detection | Better price tag OCR (96% accuracy) |

| Autonomous Systems | Traffic sign/obstacle understanding | Better spatial relationships | Superior text-in-motion recognition |

| Industrial QC | Defect detection in manufacturing | 89% accuracy on MVTec-AD | Better at subtle texture anomalies |

Testing Specialized Applications

A. Medical Imaging

# Test medical imaging analysis with identical prompt llama_medical, qwen_medical = compare_models("medical_imaging", "wrist_xray.png") """ === Testing: MEDICAL_IMAGING === Prompt: Analyze this X-ray. Describe all visible anatomical structures and note any abnormalities. Image: wrist_xray.png --- Llama 3.2 Vision Analyzing: wrist_xray.png --- Llama 3.2 Vision Response: This is an anteroposterior view of a right wrist. The radius, ulna, carpal bones, and metacarpals appear intact with normal bone density. The joint spaces are preserved. There is a slight soft tissue swelling noted around the distal radius, but no clear fracture line is visible. The overall alignment appears normal. ================================================== --- Qwen 2.5-VL Analyzing: wrist_xray.png --- Qwen 2.5-VL Response: Anatomical structures visible: radius, ulna, scaphoid, lunate, triquetrum, pisiform, trapezium, trapezoid, capitate, hamate, and metacarpals 1-5. Abnormalities detected: Possible hairline fracture at distal radius (coordinates: 145,230 to 152,245). Recommend clinical correlation and possible additional imaging. """

Insight: Llama provides a comprehensive clinical narrative. Qwen offers more systematic analysis with specific coordinate detection of abnormalities. For more on medical imaging applications, see our guide to analyzing medical images.

B. Retail Analytics

# Test retail analytics with identical prompt llama_retail, qwen_retail = compare_models("retail_analytics", "shelf.jpg") """ === Testing: RETAIL_ANALYTICS === Prompt: Extract all brand names and their corresponding product types from this shelf image. Image: shelf.jpg --- Llama 3.2 Vision Analyzing: shelf.jpg --- Llama 3.2 Vision Response: Visible brands and products: - Coca-Cola: Soft drinks/sodas - Pepsi: Soft drinks/sodas - Frito-Lay: Snack chips - Oreo: Cookies/biscuits - Pringles: Potato chips ================================================== --- Qwen 2.5-VL Analyzing: shelf.jpg --- Qwen 2.5-VL Response: Brand-Product pairs identified: - Coca-Cola: Carbonated soft drinks (12-pack cans, $7.99) - Pepsi: Carbonated soft drinks (12-pack cans, $7.99) - Frito-Lay: Potato chips (Family size, $4.29) - Oreo: Sandwich cookies (Original, $3.49) - Pringles: Stackable potato chips (Original flavor, $2.99) """

Insight: Both models identify brands correctly. Qwen additionally extracts pricing information and specific product details, demonstrating superior OCR capabilities.

Technical Performance Comparison

| Aspect | Llama 3.2 Vision | Qwen 2.5-VL |

|---|---|---|

| Max Resolution | 1024x1024 (fixed) | Dynamic (up to 1536x1536) |

| Context Window | 128k tokens | 125k tokens |

| Inference Speed | ~12 tokens/sec (A100 GPU) | ~18 tokens/sec (A100 GPU) |

| VRAM Requirements | 24GB (11B) / 80GB (90B) | 16GB (7B) / 48GB (72B) |

Benchmark Performance

| Benchmark | Llama 3.2 90B | Qwen 2.5 72B | Ideal For |

|---|---|---|---|

| MMMU (Accounting) | 68% | 72% | Financial document analysis |

| MathVista | 61% | 67% | Visual math problems |

| POPE (Hallucination) | 89% | 92% | Factual accuracy |

| MMBench (Chinese) | 62% | 81% | Cross-lingual understanding |

Model Selection: What to Use and When

Choose Llama 3.2 Vision If:

- You need comprehensive contextual understanding and narrative descriptions

- You work primarily with English-dominant content

- You require robust safety and bias mitigation frameworks

- You need tight integration with the Llama ecosystem

Choose Qwen 2.5-VL If:

- You need precise data extraction and structured outputs

- You work with multilingual content (especially Asian languages)

- You require high-resolution image processing (above 1024px)

- You need superior OCR capabilities for text extraction

Conclusion

Both models excel in different areas when given identical prompts:

- Llama 3.2 Vision provides better contextual understanding and narrative descriptions, making it ideal for applications requiring human-readable analysis.

- Qwen 2.5-VL excels at structured data extraction, multilingual tasks, and precise detail detection, making it perfect for automated systems requiring structured outputs.

The choice depends on whether you prioritize contextual understanding (Llama) or precise data extraction (Qwen) for your specific use case.

FAQs

Q1: Which VLM performs better in vision tasks like OCR or VQA?

A: Qwen 2.5‑VL consistently tops vision benchmarks and OCR tasks, with users stating “Qwen VL2 7B is very strong… Llama 3.2 Vision didn’t impress me”.

Q2: How do they compare in benchmark vs real-world performance?

A: Qwen 2.5‑VL shows strong scores on academic benchmarks. It performs strongly on the academic benchmarks and comparatively under‑performs in the human evaluation”. Llama 3.2, however, offers excellent chart/document understanding with robust human-aligned performance.

Q3: How about speed and efficiency?

A: Llama 3.2 is roughly 3× faster in complex tasks like coding, while Qwen 2.5 delivers stronger accuracy. Benchmarks show Llama runs tasks in ~7 s vs Qwen in ~23 s.

Q4: Which one supports longer context windows?

A: Llama 3.2 Vision supports up to 128 K tokens. Qwen 2.5‑VL has a context window up to 32,768 tokens.

Q5: What licensing & multilingual support do they provide?

A: Qwen 2.5‑VL uses Apache 2.0, supports 29 languages, dynamic resolution, object localization, and video input. Llama 3.2 Vision has a Community License, excels in OCR, VQA, and 128k context but offers English-heavy support.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)