Object tracking in autonomous vehicles: How it works?

Autonomous vehicles (AVs) have received immense attention in recent years due to their potential to improve driving comfort and reduce injuries from vehicle crashes as human error is the key reason for more than 94% of accidents.

Autonomous driving has moved from the realm of science fiction to a very real possibility during the past twenty years, largely due to rapid developments in sensor technology and Artificial Intelligence (AI), Machine Learning (ML) fields.

To drive like humans (or even better), the car needs to see and understand its surroundings just like we do. A very crucial part towards this is to detect & track objects around it, to judge their motion and identify possible obstacles, so that a safe journey can be ensured.

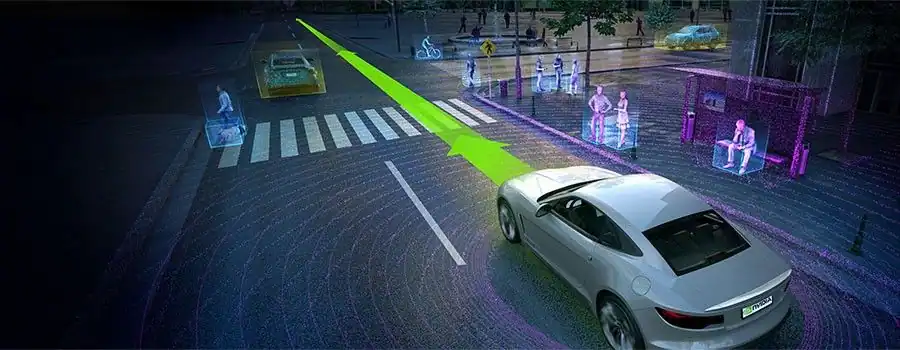

Today’s autonomous vehicles use a combination of cameras and other sensors like radars, lidars that collect relevant information about road conditions and their surroundings. These systems can detect and classify objects like other cars, pedestrians or other obstacles, thereby giving the vehicle enough information to choose the path of least resistance and ensure safe driving behavior, as we can also see in the image below.

![]()

Img: Object tracking in Autonomous Vehicles

Introduction

Object tracking is one of the critical components to support autonomous driving. Autonomous vehicles rely on the perception of their surroundings to ensure safe and robust driving performance. This perception system uses object detection algorithms to accurately determine objects such as pedestrians, vehicles & traffic signs in the vehicle's vicinity and object tracking algorithms to then track & predict the motion of these objects with time.

The goal of object tracking is to track the position and motion of one or more objects (such as car or pedestrian) across various frames. To do this, the state of objects is estimated over time. Typically, the state of an object is represented by its location, velocity and acceleration at a certain time.

In contrast, object detection means the object is detected and located in a single frame, each frame is typically processed independently and no associations over time are established. You can read more about object detection in our other blog.

Autonomous vehicles have benefited from recent progress in computer vision and deep learning. Deep learning-based object detectors and trackers play a vital role in finding and tracking these objects in real-time. We have discussed more about Deep Learning for Object Detection in our other blog. (Link to blog)

Let us begin by understanding object tracking-

What is object tracking?

Object tracking is a process where the algorithm tracks the movement of an object. In other words, it is the task of estimating or predicting the positions and other relevant information of moving objects in a video.

Object tracking refers to the ability to estimate or predict the position of a target object in each consecutive frame in a video once the initial position of the target object is defined. It means estimating the state of the target object present in the scene from previous information. We can see an example in the image below.

![]()

On the other hand, object detection is the process of detecting a target object in an image or a single frame of the video. Object detection will only work if the target image is visible on the given input. If the target object is hidden by any interference it will not be able to detect it.

Object tracking is trained to track the trajectory of the object despite the occlusions.

To achieve this, generally, the detection of objects is done first. Then, detections of the same object are associated across time.

Generally, object tracking is divided into various categories based on input type or number of objects:

1. Based on Input Type:

- Image Tracking: The process of detecting and locating objects in photos. To achieve this, features for objects are identified and stored, which can later be matched to identify the same object in other photos.

- Video Tracking: The process of detecting and locating objects in videos. This can be applied to any type of video input, including live or recorded footage. To achieve this, the videos are divided into multiple frames and image tracking is applied to each frame.

2. Based on Number of Objects:

- Single Object Tracking: A single object is detected and tracked across multiple frames.

- Multiple Object Tracking: Multiple objects are detected and tracked across multiple frames simultaneously.

Refer to this link to learn more: Overview | Object Tracking

The applications of object tracking in computer vision are extensive and diverse, including surveillance cameras that can monitor traffic flows or people walking down the street. It also plays a very important role in self-driving cars. Let us try to understand why.

Why do we need object tracking in autonomous vehicles?

Object tracking is used in a variety of ways to help with the recognition and understanding of objects within the footage. This includes both still images as well as videos, whether they’re live streams or pre-recorded clips from cameras positioned around an environment that you want to analyze for activity such as finding people walking through it!

Tracking of other traffic participants is a very important task for autonomous driving. Consider for instance, the braking distance of a vehicle which increases quadratically with its speed. Because of the braking distance it is necessary to detect possible collisions with other traffic participants early on. This is only possible with good predictions of future trajectories, which can be achieved using Object Tracking.

Tracking in combination with the classification of traffic participants allows adapting the speed of the vehicle accordingly. In addition, tracking of other cars can be used for automatic distance control and to anticipate possible driving maneuvers of other traffic participants (such as takeovers) early on.

Now, let’s try to understand how object tracking is achieved.

How is object tracking done?

Object tracking usually involves the process of object detection first. Here’s an overview of the steps involved:

1. Object detection:

- In the first step, the algorithm defines the object of interest, classifies and detects the object by creating a bounding box around it in the initial frame of the video.

- The tracker must then estimate or predict the object’s position in the remaining frames while simultaneously drawing the bounding box simultaneously, as we can also see in the image below.

![]()

Img (GIF): Object Detection using Computer Vision

2. Appearance Modeling:

- This step deals with modeling the visual appearance of the object. It involves extracting features of objects and assigning unique identification for each object (ID).

- When the targeted object passes through various scenes like the lighting condition, angle, speed, etc., they may change the appearance of the object, and it may lead to misinformation and the algorithm losing track of the object.

- Appearance modeling has to be conducted in such a way that modeling algorithms can capture various changes and distortions introduced when the target object moves.

- Appearance modeling consists of two components:

- Visual representation: It focuses on constructing robust features and representation that can describe the object

- Statistical modeling: It uses statistical learning techniques to build mathematical models for object identification effectively.

3. Tracking the object:

- This step involves tracking the detected object as it moves through frames while storing the relevant information.

- This can be decided in two steps:

- Motion estimation: Usually infers the predictive capability of the model to predict the object’s future position accurately. To do this, the object is detected across various frames, the change in position with respect to time is observed and the direction and velocity of the object are predicted.

- Target positioning: Motion estimation approximates the possible region where the object could most likely be present. Once the location of the object is approximated, we can then use a visual model to lock down the exact location of the target.

Although object detectors can be used to track objects if it is applied frame-by-frame, this is a computationally limiting and, therefore, a rather inefficient method of performing object tracking. Instead, object detection should be applied once, and then the object tracker can handle every frame after the first. This is a more computationally effective and less cumbersome process of performing object tracking.

Object Tracking has strongly benefited from the success of deep learning in object detection. Recently, several approaches proposed end-to-end learning of multi-object tracking as well. Convolutional Neural Networks (CNN) remain the most used and reliable network for object tracking.

To know more: Object Tracking from scratch with OpenCV and Python Object Tracking with Opencv and Python

Tracking systems must cope with a variety of challenges such as cluttered backgrounds, the variety and complexity of motion, and occlusions. We’ll discuss a few such challenges in the next section.

Object tracking software is a vital component in autonomous vehicle technology, enabling real-time detection and prediction of objects’ movements on the road. By leveraging advanced algorithms and deep learning models, this software ensures precise tracking even in complex scenarios like crowded environments. High-quality annotated data is crucial for training these models to recognize diverse objects under varying conditions.

Challenges

The problem of associating instances of the same object over time becomes particularly challenging due to the resemblance of different objects, especially of the same class. In addition to the lack of discriminative information due to similarities with other objects, instances of the same object might not look similar enough for association in different time steps.

Often objects are partially or fully occluded by other objects or themselves. The interaction of objects, especially in the case of pedestrians, further increases the amount of occlusions and makes it difficult to track each individual object. We can see an example of the same in the image below.

![]()

Img: Object Detection in Crowded Scenes

Tracking of pedestrians is of particular importance for autonomous driving. However, the identification of pedestrians remains difficult, especially because of their complex motion, small size, various shapes and false positives of detection systems.

In the case of pedestrians and bicyclists, it is particularly difficult to predict their future behavior because they can abruptly change the direction of their movements. Therefore, humans tend to drive more carefully around pedestrians and bicyclists. Similarly, tracking in combination with the classification of traffic participants allows adapting the speed of the vehicle accordingly.

Difficult lighting conditions and reflections in mirrors or windows pose additional challenges.

Background distractions are another common source of error. With a blurry or single color background, it is easier for an AI system to detect and track objects. Backgrounds that are too busy, have the same color as the object, or that are too cluttered can make it hard to track results for a small object or a lightly colored object.

Algorithms for tracking objects are supposed to not only accurately perform detections and localize objects of interest but also do so in the least amount of time possible. Enhancing tracking speed is especially imperative for real-time object tracking models. This is why deep learning algorithms, like CNNs, were introduced to solve the problem.

But another big challenge, particularly for deep learning algorithms, is the scarcity of labeled data. That is why several such models are trained on synthetic data and lack accuracy. One solution to this is to train the model initially on large synthetic and later fine-tune the model using small but real data.

Summary

Reliable tracking-by-detection can only be achieved by using very accurate object detections.

Similar to detection, tracking pedestrians is typically more challenging than cars. The reason is the complex motion of pedestrians which is hard to predict, in contrast to the rigid motion of cars which are bound by the road region and follow a less erratic behavior due to their large mass and dynamical constraints.

In traffic scenes, detectors frequently fail for partially or fully occluded objects. In these cases, the tracking system needs to re-identify the tracked objects later in time but this can be difficult due to changes in lighting conditions or similarity to other objects in the proximity.

Furthermore, most tracking systems comprise complex pipelines and very few end-to-end multiple target tracking algorithms have been proposed. Bridging this gap from detection to tracking with the goal of a generic and end-to-end trainable model is an important direction for future research.

The main challenge is to hold a balance between computational efficiency and performance.

Despite all the hype around self-driving cars, there’s very little realistic chance that full-on self-driving will be available before 2030, and then only in a tiny number of top-of-the-range sedans and SUVs, most probably.

How Labellerr Supports Object Tracking Software Development

Labellerr provides precise and scalable data annotation solutions specifically designed to enhance object tracking software for autonomous vehicles, helping developers train more accurate and reliable AI models. Contact us today for a free demo and accelerate your project’s success.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)