ML Expert's Guide To Build Object Recognition AI Model

Table of Contents

Introduction

The home services industry is facing a major problem with the accuracy and efficiency of object recognition in its operations. Home services include cleaning, maintenance, repair, and installation, which require various tools, equipment, and products.

However, due to the high number of items used in these services, it can be difficult for service providers to identify and track them accurately, leading to errors and delays in the delivery of services.

The impact of inaccurate object recognition in-home services can be significant in time and money.

For example, consider a scenario where a cleaning service provider fails to recognize a specific type of stain remover and uses an inappropriate one, worsening the stain and the need for additional cleaning services. This can result in an increase in service time, costs, and customer dissatisfaction.

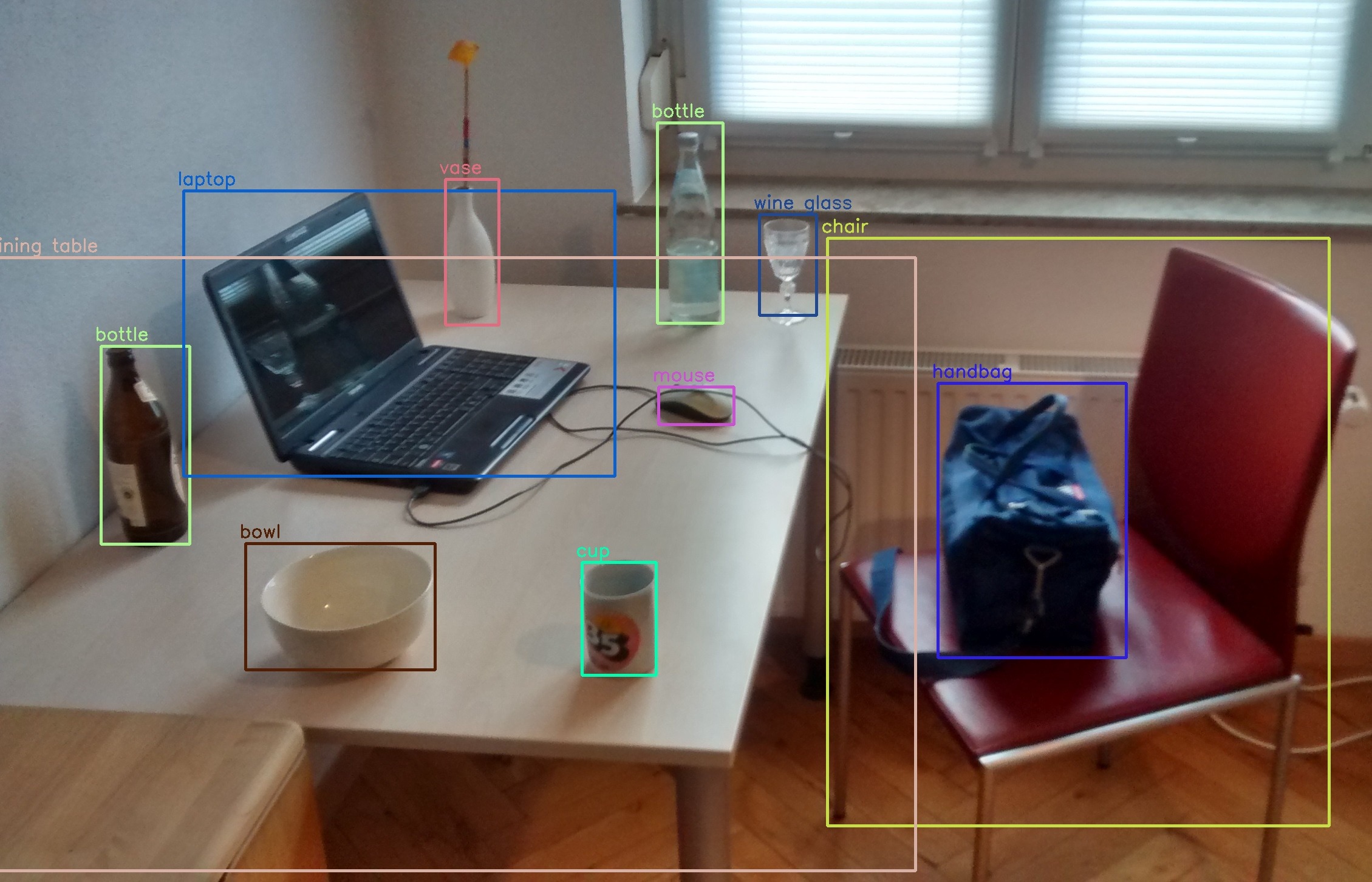

Figure: Object detection utilization for detecting home objects

According to a report by MarketsandMarkets, the global computer vision market size is expected to grow from $10.9 billion in 2019 to $17.4 billion by 2024, at a CAGR of 7.8%. This indicates the growing demand for computer vision technology in various industries, including home services, where it can save time and money.

Computer vision can help solve the problem of object recognition in-home services by providing a more accurate and efficient way to identify and track objects. Using machine learning algorithms and deep neural networks, computer vision models can be trained to recognize and classify objects used in-home services, such as cleaning products, tools, and equipment.

One such company that uses machine learning and computer vision to solve the problem of home services is STREEM.

Streem is a technology company specializing in remote communication and collaboration solutions, primarily for the on-site services industry. Their platform combines augmented reality (AR) and computer vision technologies to enable remote experts to diagnose and resolve customer issues without being physically present.

.webp)

Figure: Streem's Webpage

Prerequisites

To proceed further and understand CNN based approach for detecting home objects, one should be familiar with the following:

- Python: We have used Python in the below tutorial.

- Tensorflow: TensorFlow is a free, open-source machine learning and artificial intelligence software library. It can be utilized for various tasks but is most commonly employed for deep neural network training and inference.

- Keras: Keras is a Python interface for artificial neural networks and is open-source software. Keras serves as an interface for the TensorFlow library.

- Kaggle: Kaggle is a platform for data science competitions where users can work on real-world problems, build their skills, and compete with other data scientists.

Apart from the above-listed tools, there are certain other theoretical concepts one should be familiar with to understand the below tutorial.

Transfer Learning

Transfer learning is a machine learning technique that adapts a pre-trained model to a new task.

This technique avoids the need to start the training process from scratch, as it takes advantage of the knowledge learned from solving the first problem that has already been trained on a large dataset.

The pre-trained model can be a general-purpose model trained on a large dataset like ImageNet or a specific model trained for a similar task. The idea behind transfer learning is that the learned features in the pre-trained model are highly relevant to the new task and can be used as a starting point for fine-tuning the model on the new Dataset.

Transfer learning has proven highly effective in various applications, including computer vision, natural language processing, and speech recognition.

EfficientNetB4

EfficientNetB4 is a convolutional neural network architecture introduced by Mingxing Tan and Quoc V. Le in their 2019 paper "EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks." It is part of a family of EfficientNets designed to balance model size, computational resources, and accuracy.

EfficientNetB4 has 19.6 million parameters and achieves state-of-the-art performance on the ImageNet Dataset, a large-scale benchmark for image classification. It uses a compound scaling method that scales up network width, depth, and resolution in a balanced way, allowing for improved performance without requiring excessive computational resources.

Figure: EfficientNetb4 architecture

GELU Activation Function

GELU (Gaussian Error Linear Unit) is a smooth approximation of the ReLU function. It is defined as the product of the input and the cumulative distribution function (CDF) of the Gaussian distribution with mean 0 and variance 1.

The main advantage of Gelu over other activation functions like ReLU or sigmoid is that it has a non-monotonic curve, meaning it can learn more complex patterns in the data. It also has a bounded range between -1 and 1, which helps with regularization and prevents the saturation of gradients.

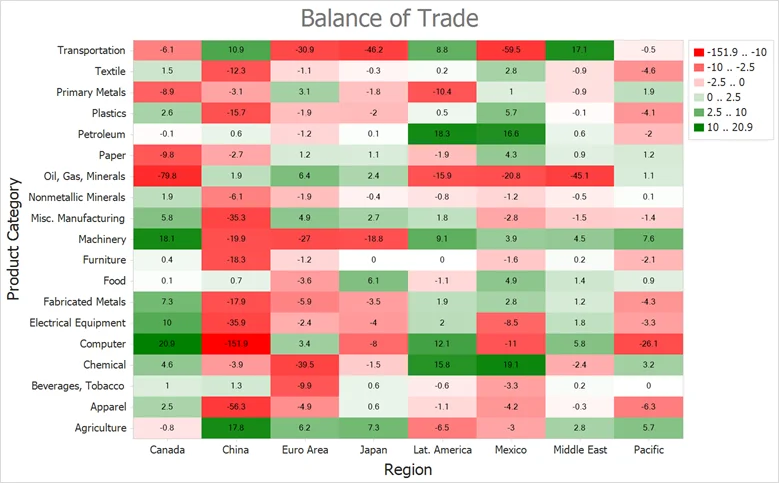

For analysis of our model's performance, we have used HeatMaps.

HeatMaps

A heatmap is a data visualization technique that represents the magnitude of a variable as a color in a two-dimensional (2D) matrix. In a heatmap, each row and column of the matrix represents a different variable, and the cells are colored according to the value of the corresponding variable. The color scale used in the heatmap is chosen to highlight patterns or trends in the data.

Heatmaps are commonly used to visualize correlations between variables, identify clusters or patterns in data, and highlight areas of high or low activity in a dataset. They are widely used in various fields, including biology, finance, and data science.

Figure: Example image of Heatmaps

Methodology

To proceed with our object detection system for improved home services:

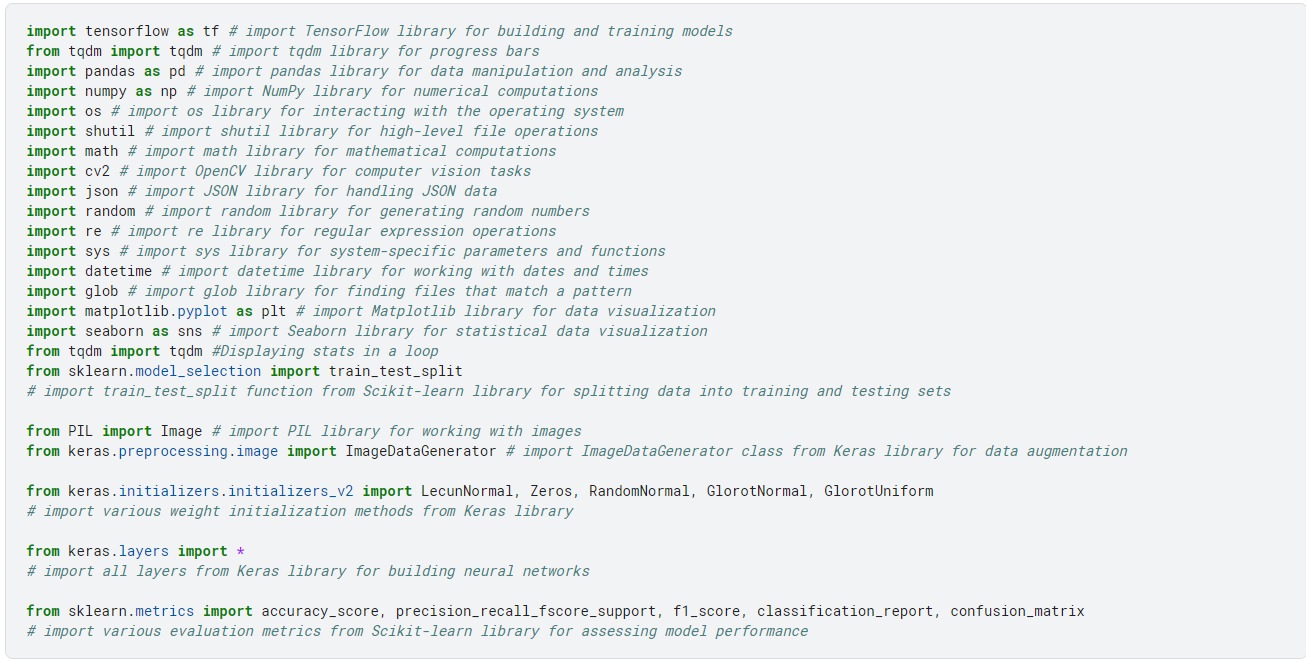

- We begin with importing all the required libraries.

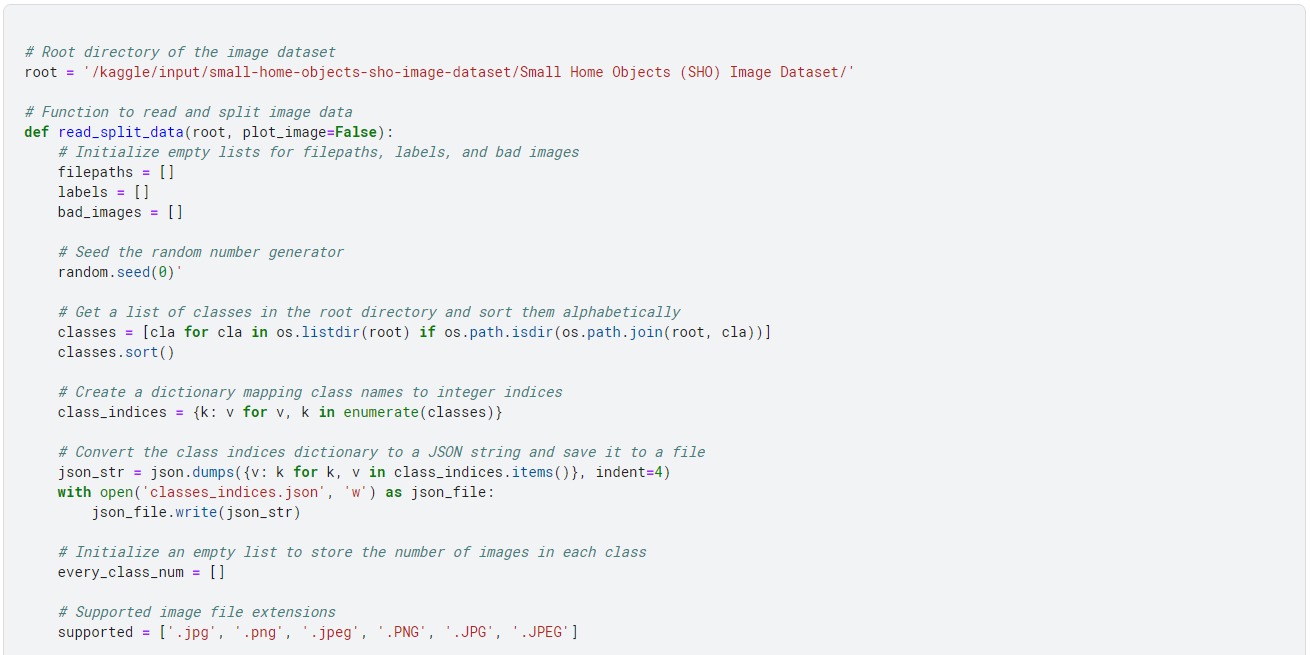

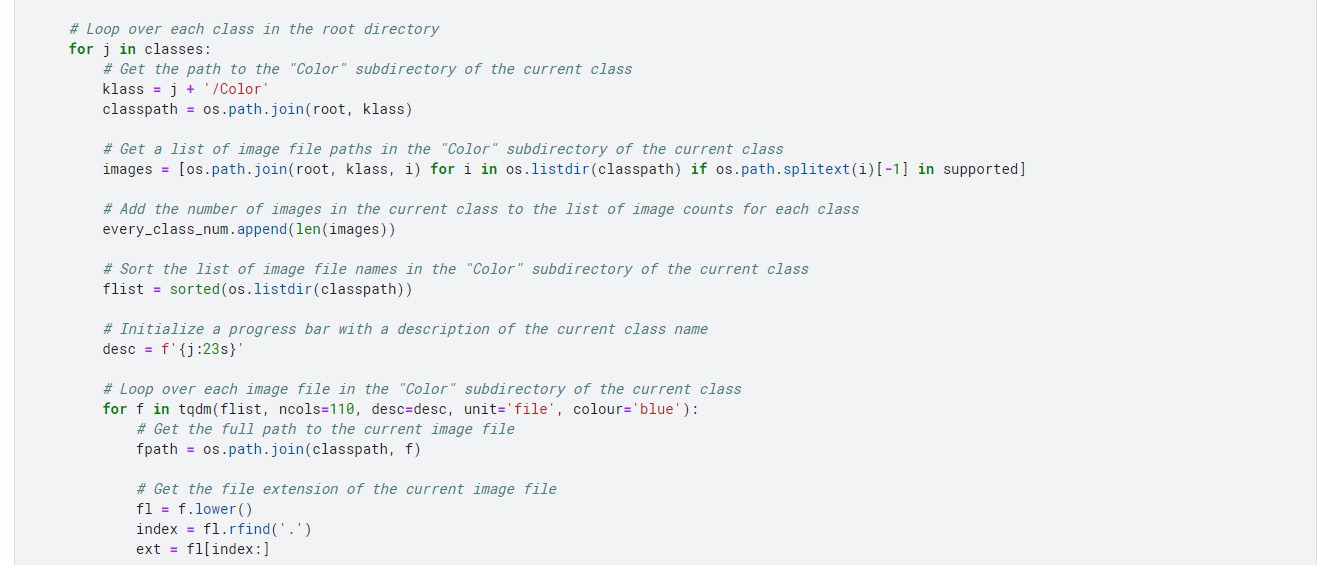

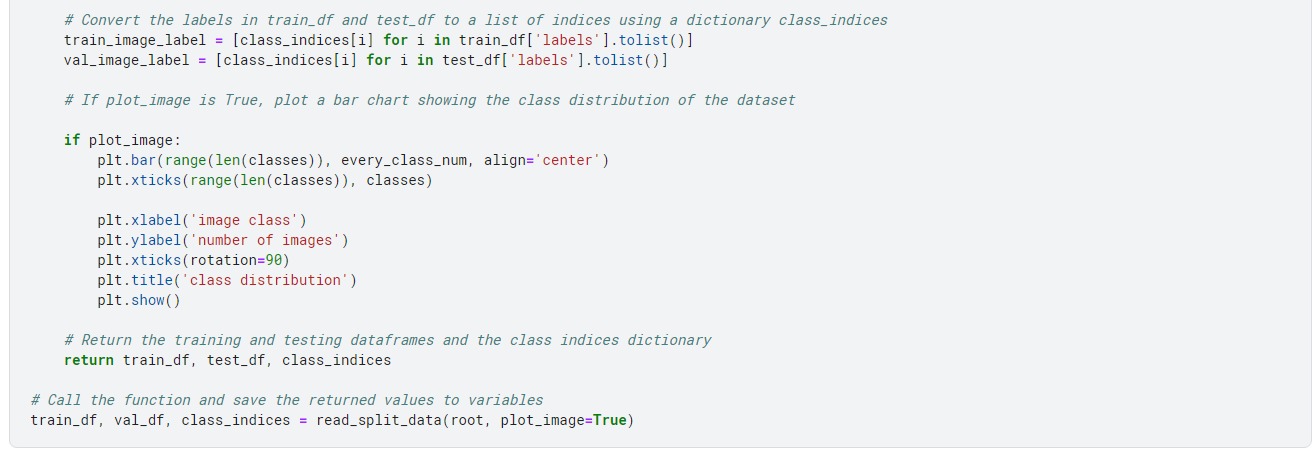

- Next, we read and split image data for machine learning tasks by importing libraries, defining a function to read and split the data, initializing lists and dictionaries, looping over each class to get the image files, and creating a panda data frame with the file paths and labels.

- We then split the Dataset into train and test sets, convert the labels to a list of indices, and create a sample data frame.

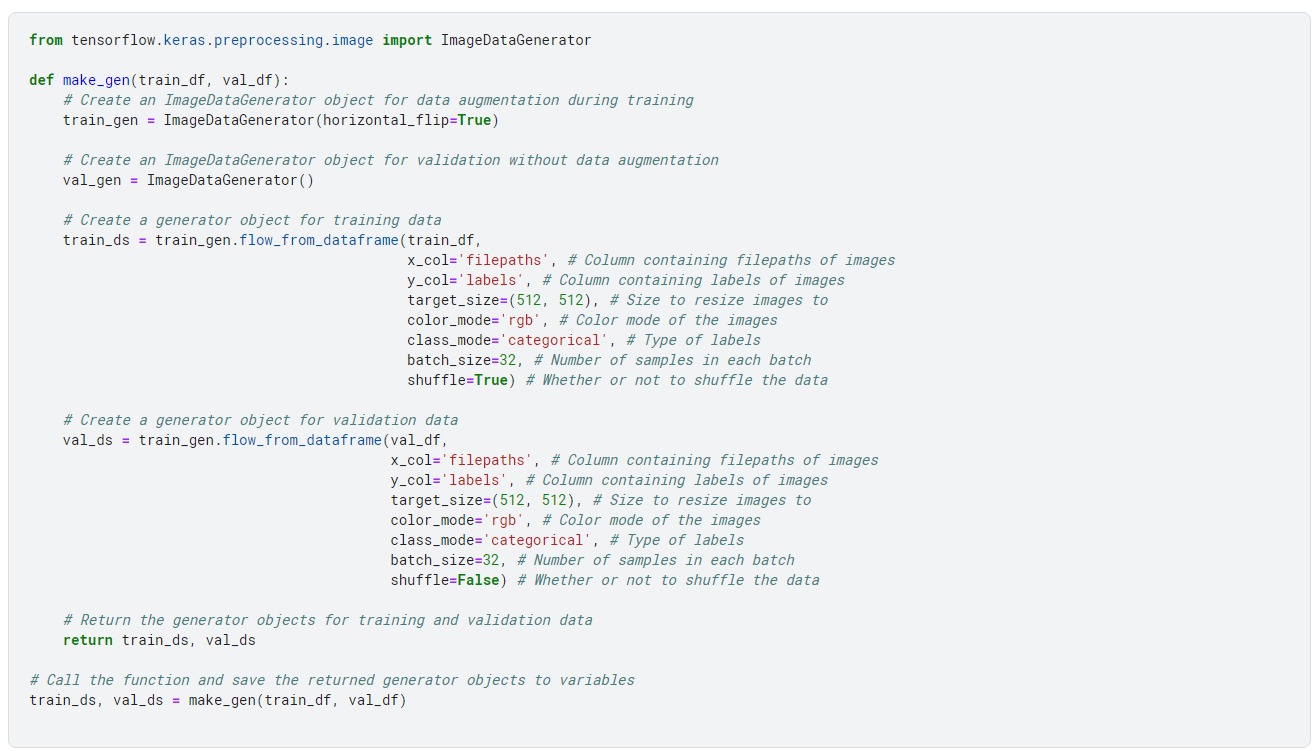

- Next, we create generator objects for training and validation data using the Keras ImageDataGenerator with specified image augmentation techniques and parameters.

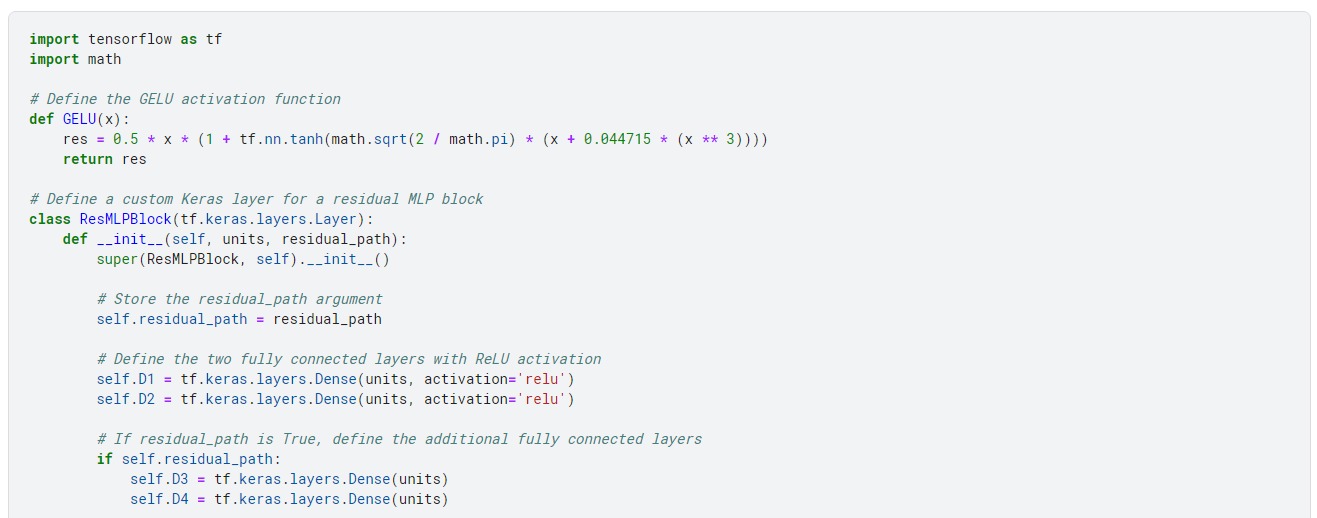

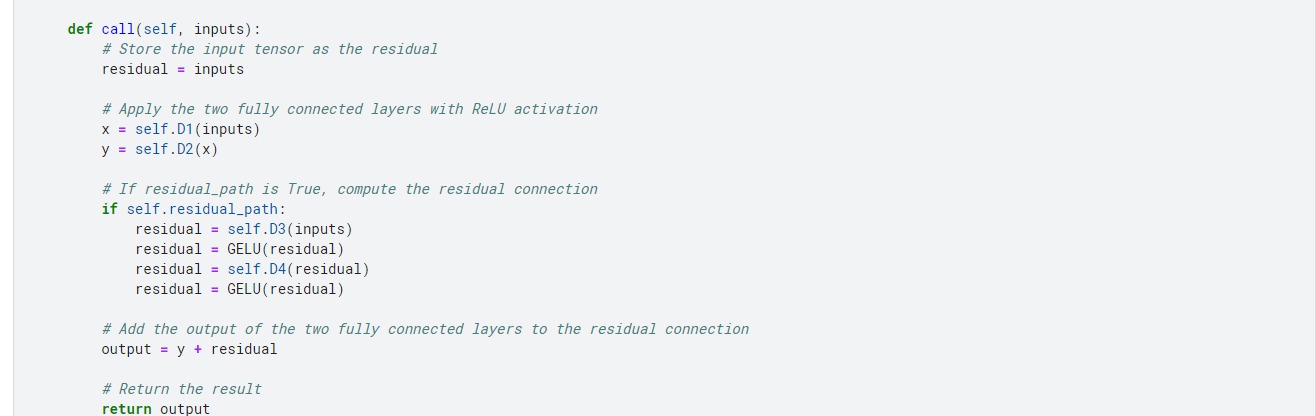

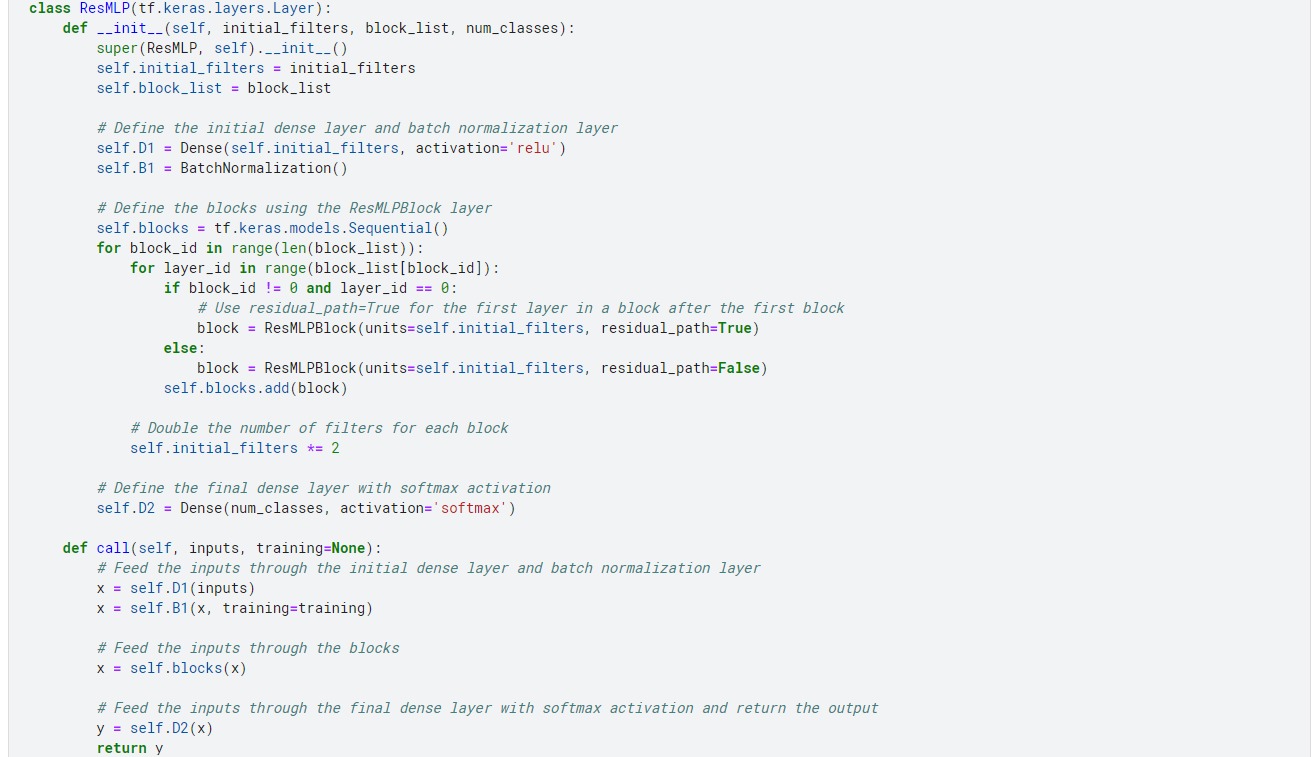

- Next, we create our model for defining a custom ResMLP model in TensorFlow, consisting of a series of ResMLPBlock layers with Gaussian Error Linear Unit activation function and residual connections and a final dense layer with softmax activation.

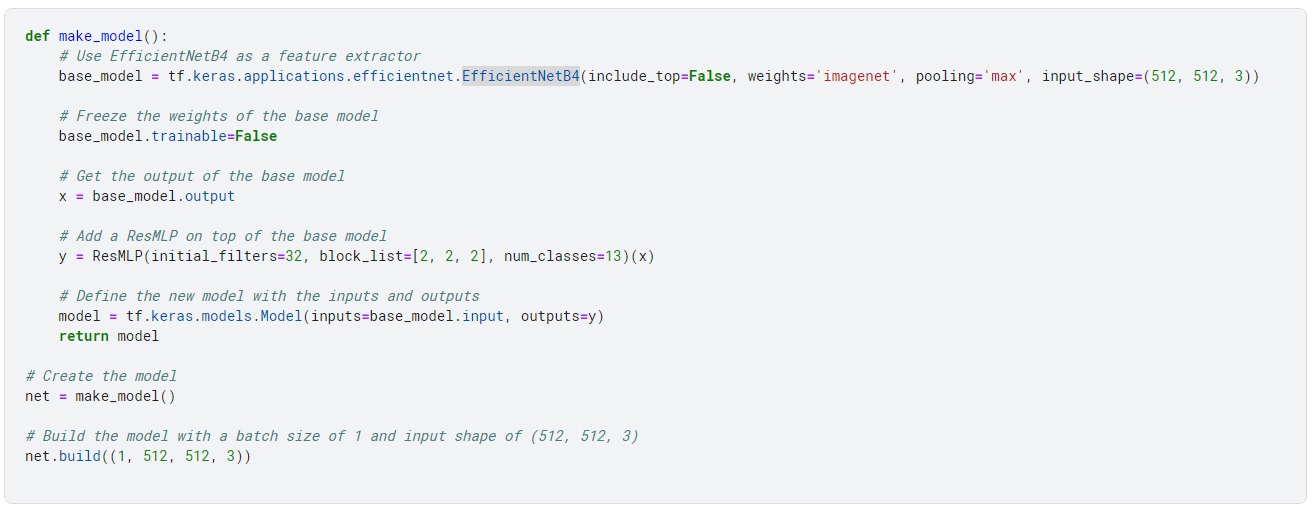

- We import the base model EfficientNetb4 architecture to overlay the residual multilayer perceptron network.

- Finally, we train our model and perform analysis over test images.

_11zon.webp)

Figure: Flowchart for Methodology (Image by author)

Dataset Selection

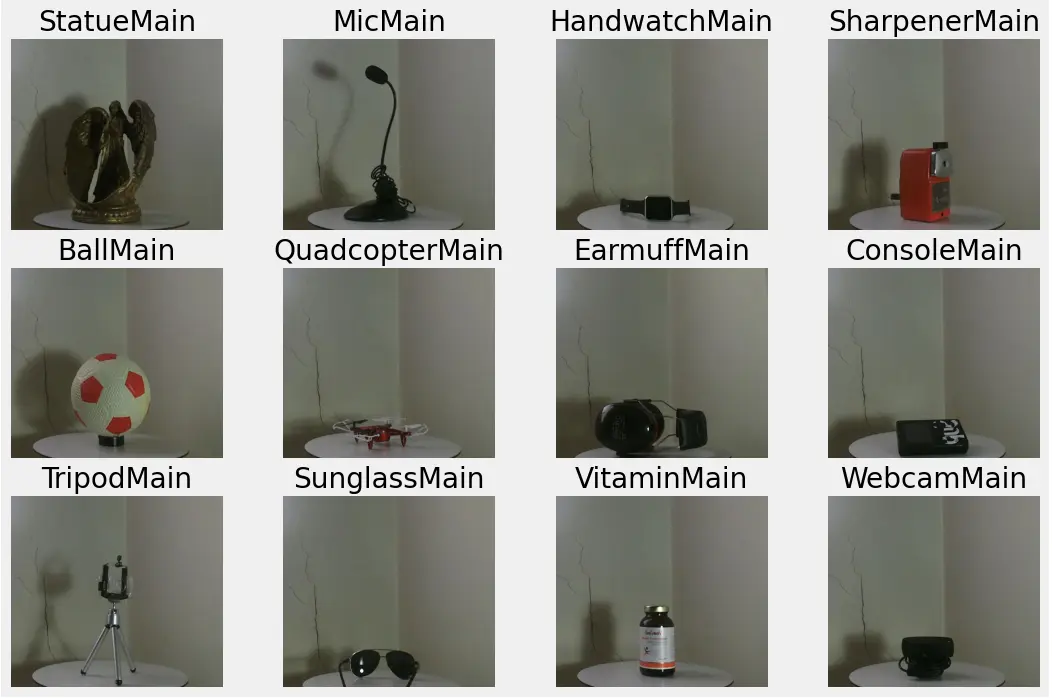

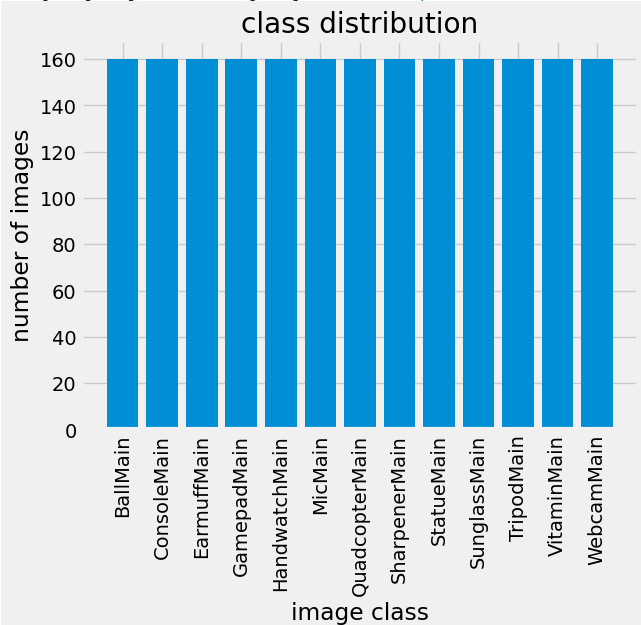

For this project, we have used the Small Home Objects (SHO) Image Dataset provided at Kaggle. This Dataset contains 4160 distinct color and depth photos from 13 categories, classes, or items. Each class object has 320 samples (160 colour and 160 depth). The Dataset was captured using the Microsoft Kinect sensor V.2. The following categories: Ball, Console, Earmuff, Gamepad, Hand watch, Microphone, Quadcopter, Sharpener, Statue, Sunglass, Tripod, Vitamin,, and Webcam.

Figure: Sample images from Dataset

.png)

.png)

Further, we analyzed the class distribution. All 13 classes contain an equal number of images, i.e., 320 per class. So it's a uniform distribution of data.

Implementation

Environment Setup

The below points are to be noted before starting the tutorial.

- The below code is written in my Kaggle notebook. For this, you first need to have a Kaggle account. So, if not, you need to sign up and create a Kaggle account.

- Once you have created your account, visit Small home object detection and create a new notebook.

- Run the below code as given. For better results, you can try hyperparameter tuning, i.e., changing the batch size, number of epochs, etc.

Hands-on with Code

We begin by importing the required libraries.

Next, we prepare our Dataset. For this:

- We iterate over our dataset folder.

- Store each image in a numpy array filepaths and corresponding labels in labels.

- We then perform a train-test split of 80-20 over the Dataset. It means 20% of our Dataset is reserved for testing and 80% for training.

Next, we create our data generators, which helps develop batches of image dataset with real-time Data augmentation.

Now, we create the residual layer with GELU activation, which acts as a model overlaid over a pre-trained base model. Only this part of the model participates during the training process, while the base model remains untouched.

Next, we create our model. For this, we first import the EfficientNetB4 model and then attach the above output residual layer on top of it.

From the snippet below, we are not training the base model (EfficientNetB4) or the residual layer.

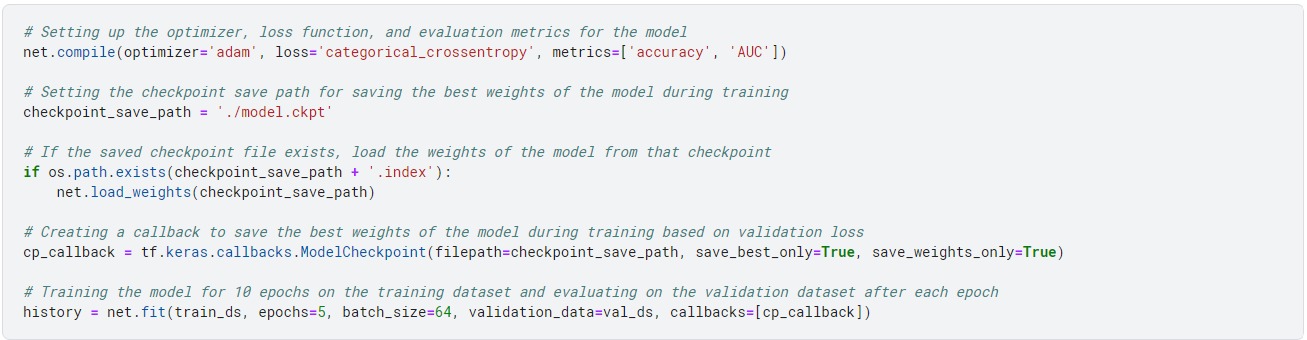

Next, we compile our model and perform the training process.

- We have used the categorical_crossentropy loss function, which is just logistic loss over multiple classes, along with the Adam optimizer.

- For training, we have used five epochs with a batch size of 64. These hyperparameters can be tuned accordingly to see the change in model training.

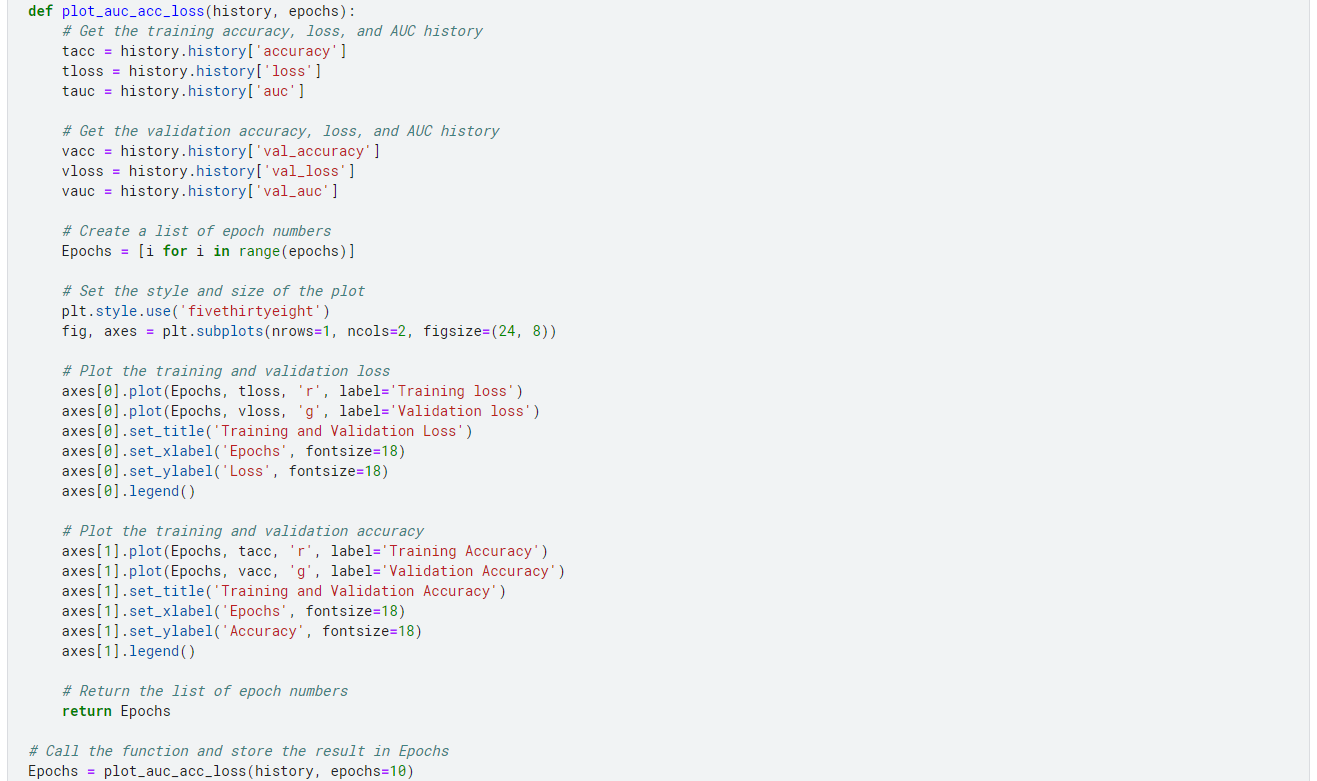

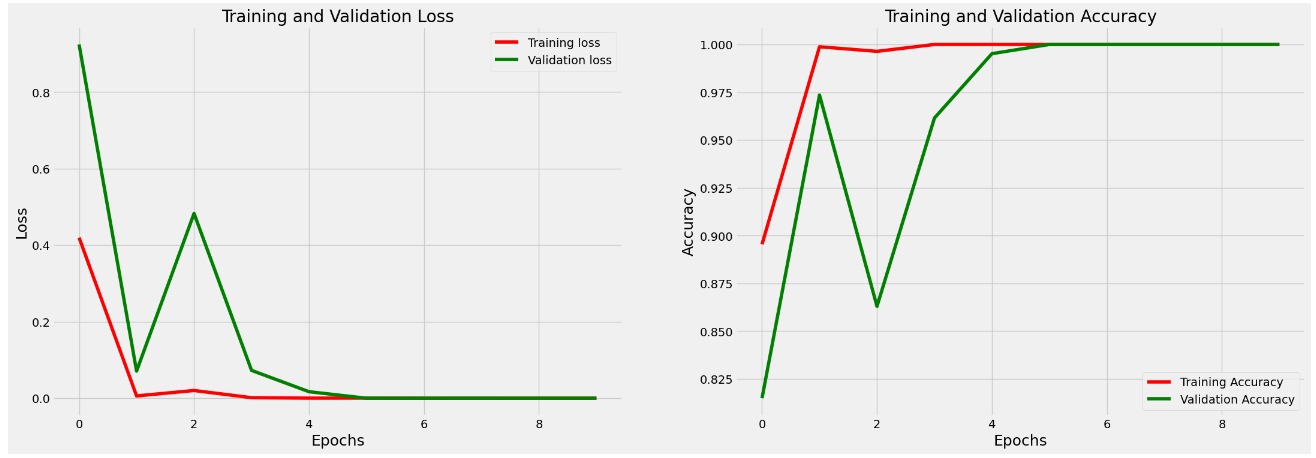

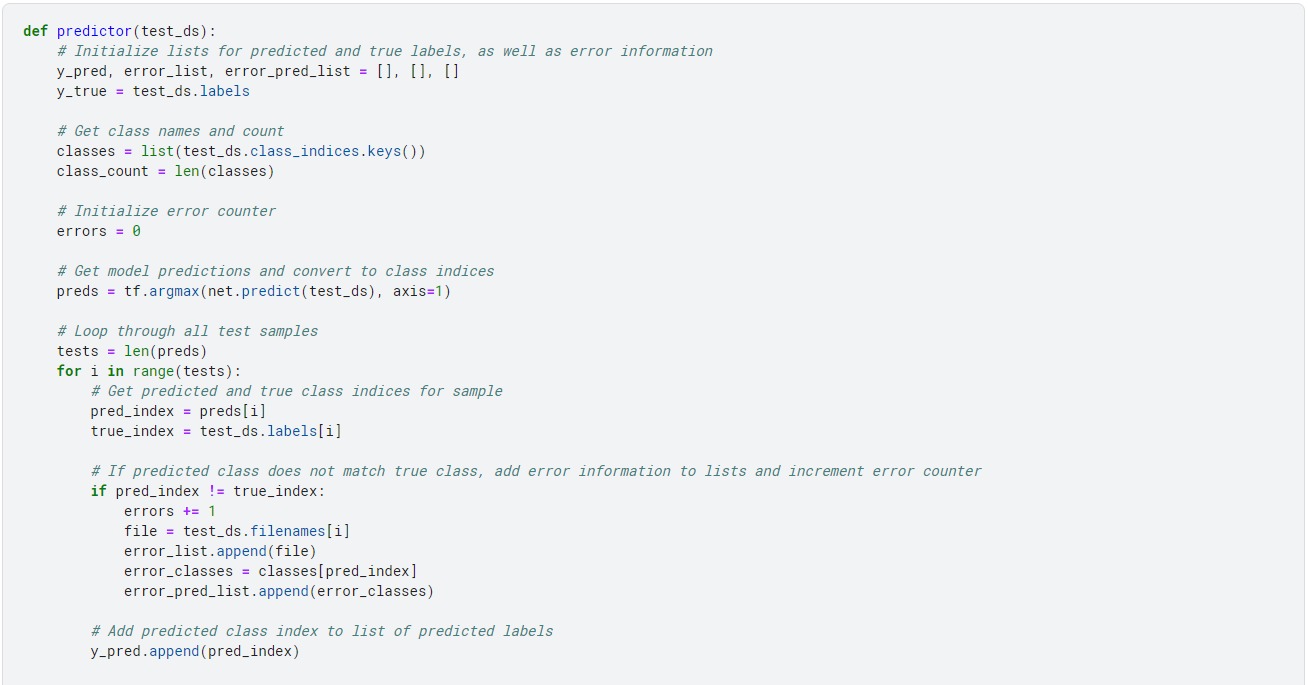

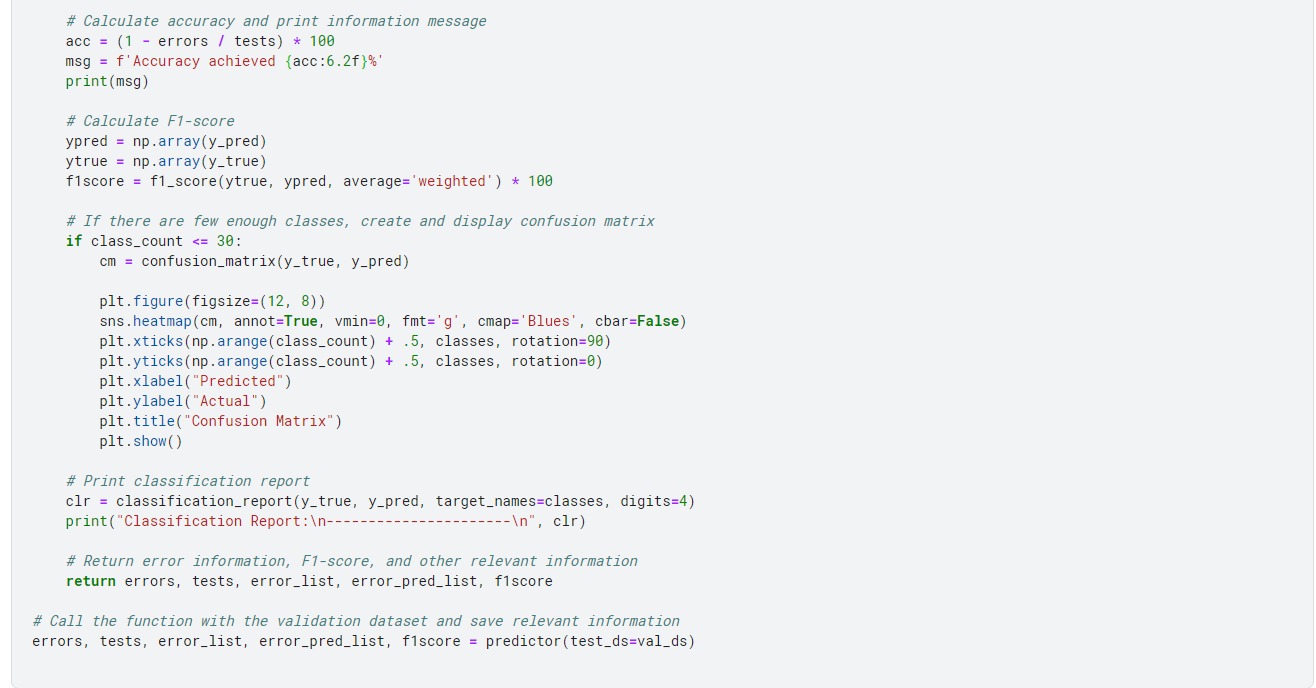

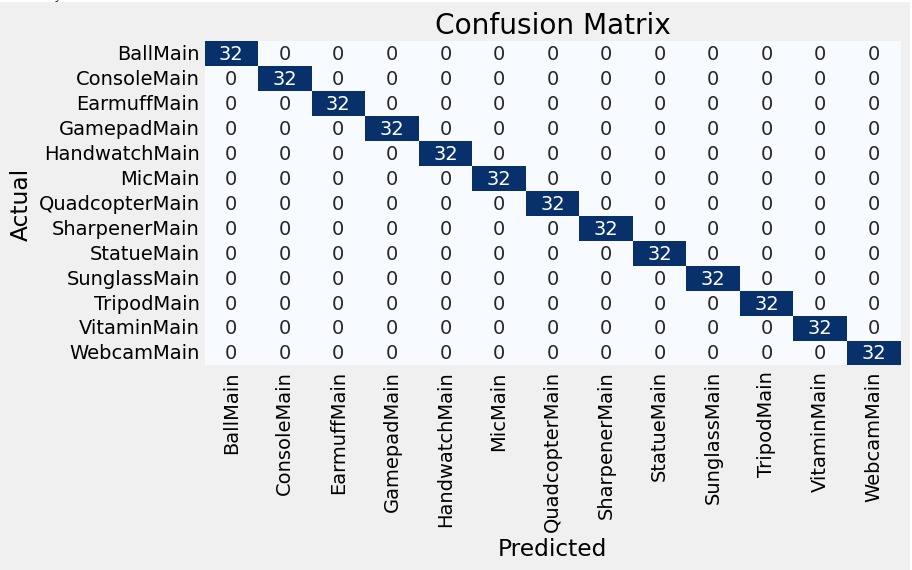

After the training of the model is complete, we perform the analysis of our trained model. For this, we begin by plotting loss and accuracy curves.

Output

From the above plots, our model converges to a loss of 0 and an accuracy of 100%. Generally, when we achieve an accuracy of 100% on training data, it means that our model may be overfitted.

But, as in this case, the Dataset is too small, so it may be possible that our model has been trained without overfitting. For testing it out, we also plot a heatmap over test images.

Output

Figure: Heat map of actual and predicted output (Image by author)

Conclusion

In this tutorial, we have explored a CNN-based approach for detecting home objects using transfer learning with EfficientNetB4 and GELU activation function. We have used Kaggle's small home object detection dataset for this purpose.

Transfer learning helps in reducing the data and computing resources required to train a model, and EfficientNetB4, with its balanced scaling method, achieves state-of-the-art performance on the ImageNet Dataset. The GELU activation function allows learning more complex patterns in the data and prevents the saturation of gradients.

To analyze the performance of our model, we have used heat maps, which help visualize correlations between variables, identify clusters or patterns in data, and highlight areas of high or low activity in a dataset.

Overall, this tutorial has highlighted the importance of object recognition in the home services industry and how computer vision technology can be leveraged to enhance the efficiency and accuracy of home services.

In this blog, we focused mainly on Streem. However, if we see, numerous such companies work on providing remote communication and collaboration solutions using augmented reality (AR) and computer vision technology. These include Help Lightning, XMReality, Librestream, and Vuforia.

Labellerr's computer vision workflow management software helps organizations of all sizes automate data preparation, curation, and quality control at scale. It helps ML teams prepare their AI for production in weeks rather than months.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)