Transforming Clinical Trials: The Promise of Computer Vision

Table of Contents

- Introduction

- The Importance of Computer Vision

- Implementation

- Challenges

- How Labellerr Helps

- Conclusion

- Frequently Asked Questions

Introduction

In the healthcare industry, the path from medication discovery to market approval is full of difficulties, hurdles, and uncertainty.

Clinical development, the most crucial phase of this journey, is distinguished by long delays, shocking costs, and high turnover rates.

According to recent studies, the challenging road to drug approval reveals troubling statistics.

Only 33% of products successfully advance to the third phase of clinical trials, and bringing a new medication to market requires an immense investment of $1.5-2.0 billion, which can take up to 10-15 years in total.

Despite the scale of these investments and the careful efforts of researchers, another harsh reality emerges: clinical studies frequently finish prematurely, with 30% of trials discontinued due to a worrying lack of patient involvement.

Against these major obstacles there is an urgent need for creative solutions that could change the current state of clinical trials and research by simplifying procedures, increasing efficiency, and, ultimately, improving patient outcomes.

In light of these serious concerns, computer vision integration shows up as a sign of hope—a breakthrough technology set to reshape clinical development approaches.

Lets dive deep through this blog to fully understand the potential of computer vision in clinical trials and research, a complete evaluation of its capabilities, limitations, and consequences etc.

The Importance of Computer Vision

Computer vision is rapidly being used in clinical trials and research across a variety of medical fields.

Its applications span from image analysis and interpretation to data extraction and automation, with substantial promise for increasing efficiency, accuracy, and consistency in medical research and healthcare.

Here are some examples of how computer vision is used in clinical trials and research:

- Medical Imaging Analysis: Computer vision algorithms are applied to analyze medical images such as X-rays, MRIs, CT scans, and histopathology slides. These algorithms can assist in detecting abnormalities, segmenting organs or tissues, and quantifying disease progression or response to treatment.

- Disease Diagnosis and Screening: Computer vision systems can aid in diagnosing diseases and screening patients for early detection. For instance, in dermatology, computer vision algorithms can analyze images of skin lesions to detect melanoma or other skin cancers.

- Drug Discovery and Development: Computer vision techniques help automate the analysis of cellular and molecular imaging data in drug discovery and development processes. This includes identifying potential drug targets, assessing drug efficacy, and monitoring cellular responses to treatment.

- Patient Monitoring and Management: Computer vision systems can be used to monitor patients in clinical trials, track their movements, analyze facial expressions, and detect physiological signals. This information can provide insights into patient behavior, adherence to treatment regimens, and overall health status.

- Surgical Guidance and Navigation: In surgical settings, computer vision technologies are used to assist surgeons during procedures by providing real-time feedback, enhancing precision, and minimizing risks. Augmented reality (AR) and virtual reality (VR) systems, powered by computer vision, offer surgeons detailed anatomical information and navigation guidance.

- Clinical Trials Optimization: Computer vision helps automate various aspects of clinical trials, including patient recruitment, eligibility screening, and data collection. By analyzing medical records, images, and other clinical data, computer vision systems can identify suitable candidates for trials and streamline the trial enrollment process.

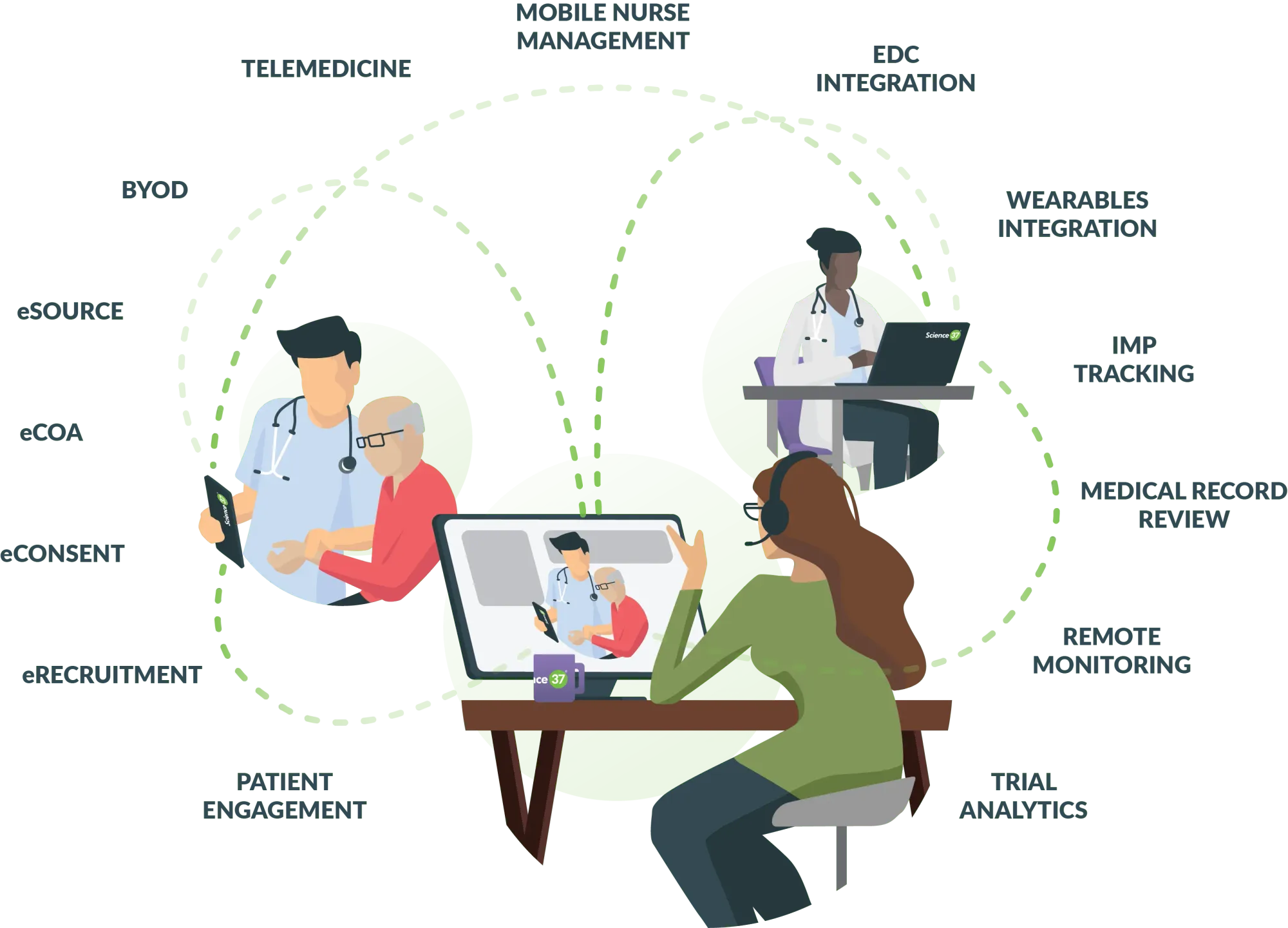

- Remote Patient Monitoring: With the rise of telemedicine and remote healthcare, computer vision enables remote patient monitoring through wearable devices and home-based sensors. These devices can capture vital signs, monitor physical activity, and assess overall wellness, providing healthcare providers with valuable data for personalized care and intervention.

- Quality Control and Assurance: Computer vision algorithms can assist in quality control and assurance processes by detecting errors or anomalies in medical imaging data, ensuring the accuracy and reliability of diagnostic results.

Overall, computer vision shows great promise for revolutionising clinical trials and research; let's look at how it can be implemented.

Implementation

For better understanding let's take a case study - Monitoring Wound Healing Progress after Surgery.

Model Architecture

Data Collection

- Capture standardized high-resolution wound images using consistent lighting and positioning throughout the trial. Consider various wound types and healing stages to ensure model generalizability.

- Collect wound characteristics (size, depth, exudate), patient demographics, treatment details, and other relevant clinical data. Ensure high-quality labeling and adherence to data privacy regulations.

Data Preprocessing

- Apply denoising techniques to address sensor noise, lighting variations, or artifacts.

- Normalize pixel values or apply image standardization methods to facilitate consistent input for the ML model.

- Extract the wound region using color-based, thresholding, or deep learning segmentation methods.

- Carefully label wound boundaries and relevant features (e.g., granulation tissue, necrotic tissue). Employ expert annotations for complex tasks(like Labellerr etc).

Feature Engineering

- Calculate wound area, perimeter, shape descriptors (circularity, eccentricity), and depth (if applicable) based on segmentation results.

- Extract Haralick features, Gabor filters, or deep learning-based textural descriptors to quantify tissue characteristics.

- Utilize color histograms, color moments, or color-invariant descriptors to capture color variations associated with healing.

- Integrate relevant clinical data into feature vectors using appropriate feature representation techniques.

Model Training

- Consider deep learning architectures (e.g., CNNs, DenseNet, U-Nets) well-suited for image processing tasks.

- Experiment with different architectures and hyperparameters to identify the best fit for your dataset.

- Split data into training, validation, and test sets.

- Employ cross-validation to prevent overfitting and ensure model generalizability.

- Choose a loss function (e.g., mean squared error, Dice loss) that aligns with your evaluation metrics.

- Apply L1/L2 regularization or dropout to mitigate overfitting and improve model generalization.

- Monitor training progress, track loss, accuracy, and other metrics, and make adjustments as needed.

Model Evaluation

- Assess performance using metrics like mean squared error, Dice coefficient, Jaccard index, or other wound-specific metrics aligned with your goals.

- Visualize model predictions, compare them to ground truth, and involve clinical experts to assess interpretability and agreement with their insights.

- Conduct statistical tests (e.g., paired t-tests) to compare model performance to baselines or other approaches.

Model Deployment and Interpretation

- Integrate the ML model into a user-friendly platform for clinical use. Consider cloud-based deployment, on-premise servers, or mobile apps for accessibility.

- Utilize techniques like saliency maps, attribution methods, or LIME to explain model predictions and make them interpretable for healthcare professionals.

- Continuously monitor model performance in real-world use, track data quality, and update the model as needed to maintain accuracy and robustness.

Challenges

- Integrating computer vision into clinical trials and research presents unique challenges, despite its immense potential.

- Ensuring the integrity, reliability, and security of medical imaging data is essential as errors or biases in the analysis can have serious consequences for patient treatment.

- The complex legal framework governing medical imaging and data protection complicates the implementation of computer vision technologies in healthcare settings.

To maximize the benefits of computer vision while minimizing hazards, these difficulties must be addressed through cross-disciplinary collaboration, rigorous validation processes, and adherence to ethical principles.

How Labellerr Helps

Imagine you have lots of pictures and videos, and you want the computer to learn what is in each of them. Labellerr makes this easy!

Here's how it works:

(i) Connect your pictures and videos: It can take your pictures and videos from places like AWS, GCP, or Azure and put them in one easy-to-use platform.

(ii) Create and manage projects: It helps you organize your work. You can tell it what to focus on, like wounds in pictures.

(iii) Labeling engine: It has a great labeling engine. It helps you mark and describe things in the pictures, like wounds.

It can do this super fast, saving a lot of time and money.

(iv) Smart quality check: It also checks if the labels (like wounds) are correct.

It does this using its smart brain and the information it learned from your labeled pictures.

(v) Connect to AI engines: Once it finishes, you can use what it learned with other computer programs to make them smarter in recognizing wounds.

You can export the information in different formats like CSV or JSON.

So, Labellerr is a platform that helps you teach computers to recognize faces easily and quickly!

Conclusion

Finally, computer vision has enormous potential for boosting healthcare through its use in clinical trials and research.

By combining artificial intelligence and image processing, computer vision allows healthcare practitioners to gain new insights, accelerate discoveries, and evolve patient care.

While challenges still exist, the potential benefits far exceed the drawbacks, opening the path for a future in which precision medicine and personalized treatment techniques are the standard.

As we continue to innovate and explore the applications of computer vision in healthcare, the path to better health outcomes for all becomes increasingly attainable.

Frequently Asked Questions

Q1.How will AI change clinical trials?

AI can better match patients to clinical trials, increasing recruitment and trial success rates. Safety Signal Prediction. Predictive AI algorithms can foresee safety risks, perhaps preventing negative outcomes before they occur.

Q2.What is the future of clinical trials with respect to technology?

Future trials would be more decentralized, virtualized, and include digitalized endpoints to allow for more realistic, internationally harmonized, standardized real-world tracking of patient experiences as well as remote monitoring.

Q3.How is digital technology used in clinical trials?

Digital health technologies (DHTs) have made substantial advancements to clinical trials, allowing for real-world data collecting outside of the traditional clinical setting and more patient-centered approaches. Wearable and other DHTs enable the long-term collecting of individual personal data at home.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)