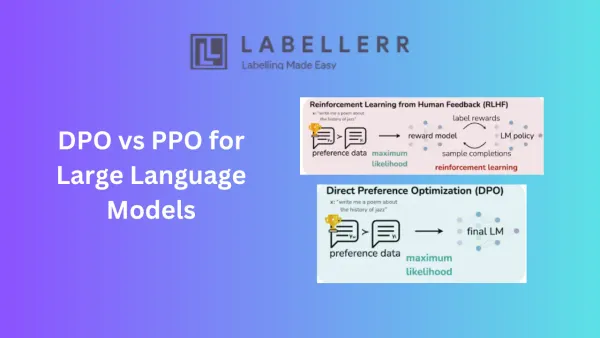

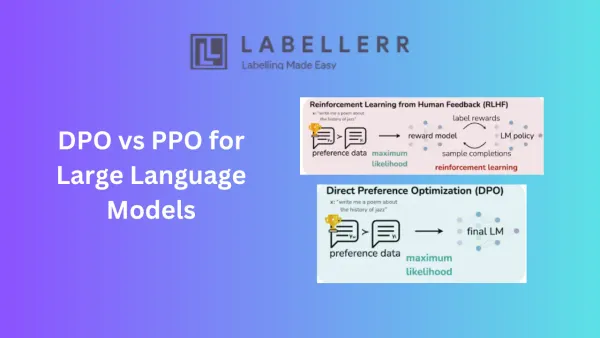

Direct Preference Optimization (DPO) and Proximal Policy Optimization (PPO) are two approaches to align Large Language Models with human preferences. DPO focuses on human feedback to optimize models directly, while PPO uses reinforcement learning for iterative improvements.