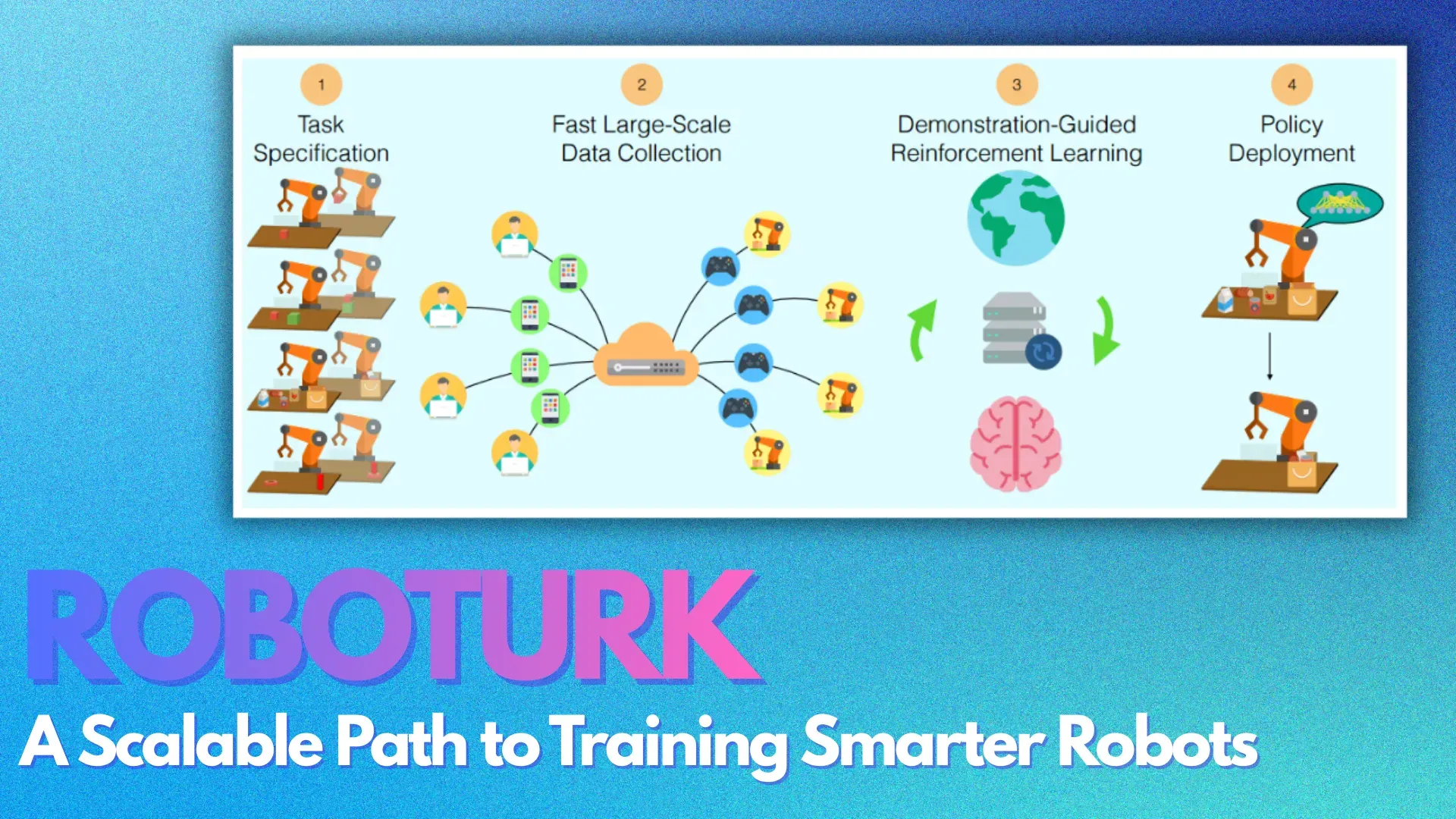

ROBOTURK Explained: A Scalable Path to Training Smarter Robots

ROBOTURK solves the core bottleneck in robot learning by enabling large-scale, high-quality demonstrations through smartphones and cloud simulation. It offers a scalable way to teach robots complex manipulation skills without expensive lab hardware.

Why is it so difficult to program a robot to perform a task that seems simple for a human, like picking up a specific block or placing a nut on a peg?

While we see impressive advances in AI for digital tasks like image recognition, teaching robots to interact with the physical world remains a monumental challenge.

The core of the problem is data: robots need massive amounts of high-quality examples to learn from where traditional methods fall short.

Reinforcement Learning (RL), where a robot learns through trial and error, can be incredibly slow and tedious, as it requires complex reward specification and faces the challenge of inefficient exploration in vast state spaces.

A more direct approach, Imitation Learning (IL), uses demonstrations from human experts to guide the robot. However, IL is data-hungry, often requiring hundreds or even thousands of demonstrations to train a reliable AI.

Collecting this data using specialized lab equipment is a slow, expensive process that has limited the scale of robotics research. To break this data collection bottleneck, researchers at Stanford University have developed "ROBOTURK," an innovative platform designed to crowdsource robot training.

By leveraging technology most of us already own, ROBOTURK offers a new, scalable way to teach robots complex physical skills.

Roboturk Infographics

Problem with Current Robot Training Approach

Before the introduction of platforms like ROBOTURK, robot training especially for manipulation tasks faced several deep-rooted problems.

These challenges made it difficult to scale Imitation Learning (IL) and limited the progress of data-driven robotics research.

The core issue was simple: robots learn best from large-scale, high-quality demonstrations, but collecting such data was slow, expensive, and technically restrictive.

1. Limitations in Existing Learning Methods

Robotics research has traditionally relied on three major learning paradigms: Reinforcement Learning (RL), Self-Supervised Learning (SSL), and Imitation Learning (IL). Each offered value, but each came with constraints that prevented practical, scalable robot training.

Reinforcement Learning (RL): RL promises autonomous skill learning, but in practice it struggles with:

- Reward specification complexity

Defining a precise reward function for real-world manipulation tasks is often difficult. - Exploration inefficiency

Robots must explore large state spaces, leading to slow and expensive learning cycles. - Massive interaction requirements

RL requires millions of interactions, which is impractical for real robots.

Self-Supervised Learning (SSL): SSL enables large-scale data collection, but:

- Most collected data is noisy because it relies on random exploration.

- While SSL is powerful for tasks like grasping, the signal-to-noise ratio is too low for structured tasks such as multi-step manipulation.

Imitation Learning (IL): IL is often more sample-efficient because it learns directly from expert demonstrations. However:

- Its power is bottlenecked by the availability of high-quality demonstrations.

- Collecting such demonstrations at scale was extremely difficult.

2. Inefficient and Inaccessible Demonstration Collection Methods

A major obstacle to IL was the lack of a scalable way to collect robot demonstrations. The methods available before ROBOTURK each had significant downsides.

Kinesthetic Teaching: This involves physically guiding the robot through a task. Although intuitive:

- It is slow and tiring for the operator.

- Researchers could typically gather only tens of demonstrations, whereas hundreds or thousands are required for robust IL.

Teleoperation via Keyboard, Mouse, or Gaming Controllers: These interfaces are accessible but technically limiting because:

- They only allow control of a subset of robot actions at a time.

- This results in unnatural, axis-aligned trajectories, lower-quality data, and inefficient motions.

Advanced Interfaces (e.g., VR controllers): Free-space teleoperation systems like VR offer:

- Natural 6-DoF control

- High-quality, smooth demonstrations

However, they come with significant limitations:

- Costly hardware

- Dependence on dedicated compute resources

- Cannot be deployed widely on crowdsourcing platforms like Amazon Mechanical Turk because typical workers do not own VR headsets.

As a result:

- VR systems offered high-quality data but in very limited quantities.

- Keyboard/gamepad systems offered scalable data but lower quality.

How ROBOTURK Solves These Problems ?

ROBOTURK was designed to directly address the core limitations of current robot training approaches: the lack of scalable, high-quality demonstration data and the barriers that prevented crowdsourcing from being used effectively for robot teleoperation.

System Overview of Roboturk

The platform introduces a new way to collect expert-like demonstrations at scale using everyday mobile devices instead of specialized hardware. This fundamentally changes what is possible in imitation-based robot learning.

1. A Scalable Crowdsourcing Framework for Robot Demonstrations

The primary contribution of ROBOTURK is that it makes high-quality robot teleoperation accessible to a massive, global pool of users.

Instead of relying on VR headsets or expensive controllers, ROBOTURK turns an ordinary smartphone into a 6-DoF motion controller. Since nearly every potential crowd worker already owns a smartphone, the platform removes the biggest accessibility barrier.

System Diagram of Roboturk

2. High-Quality, Natural Demonstrations Through Free-Space Phone Control

ROBOTURK mirrors the smooth, natural behavior seen in VR teleoperation by using the phone’s pose-tracking capabilities:

- ARKit tracks the phone’s 3D position and orientation

- These signals are translated into real-time robot end-effector control

This allows users to:

- move the robot intuitively in 6-DoF space,

- produce more natural trajectories,

- avoid the axis-aligned, unnatural motions seen with keyboards and game controllers.

User studies in the paper show that the phone interface performs similarly to VR controllers, meaning workers can generate high-quality trajectories without any specialized hardware.

UI Camparision

3. Cloud-Based Simulation Removes Hardware Burden and Ensures Consistency

A key insight of ROBOTURK is to offload all simulation and computation to the cloud.

This solves several problems at once:

- Users do not need a powerful local computer.

- Everyone receives consistent performance, regardless of device.

- The platform can scale to support many users at the same time.

The cloud backend manages:

- real-time video streaming,

- low-latency communication via WebRTC,

- dedicated teleoperation sessions for each user.

As a result, the system avoids the unreliability or variability that normally arises when letting remote users control robots over the Internet.

Cloud Based Simulations

4. Robust Real-Time Interaction Over Global Networks

One of the biggest historical challenges in remote robot teleoperation is maintaining responsiveness under varying network conditions. ROBOTURK addresses this using:

- WebRTC-based low-latency communication for control commands and video streaming

- Intelligent handling of network inconsistencies.

The authors tested ROBOTURK under:

- low-bandwidth connections,

- high-latency connections,

- and even trans-Pacific distances (California ⇄ China).

Across all tests, users were still able to perform the manipulation tasks successfully with only moderate increases in completion time.

System Stress Test Result

5. Enabling Large, Diverse, High-Value Demonstration Datasets

Because ROBOTURK is accessible, scalable, and high-quality, it enables the collection of datasets that were previously impossible to obtain.

In the pilot deployment:

- 2200+ successful demonstrations were collected

- 137.5 hours of teleoperated trajectories were recorded

- All of this was done in just 22 hours of total system usage

These datasets directly impact policy learning:

- More demonstrations improved RL performance

- Learning became more consistent across seeds

- Higher demonstration counts led to near-optimal policies

ROBOTURK solves the fundamental bottleneck of imitation learning: availability of large, high-quality demonstrations.

Conclusion

The ROBOTURK platform demonstrates that by cleverly applying accessible, everyday technology, we can solve one of the biggest bottlenecks in robotics and artificial intelligence.

It bridges the gap between the need for massive datasets and the difficulty of collecting them, proving that high-quality robot training doesn't require an expensive lab.

The research suggests that the key to smarter, more capable robots may not lie in a single brilliant algorithm, but in building systems that allow many people to contribute their physical intelligence at scale.

By democratizing the ability to teach robots, we can accelerate the pace of innovation in the field. The platform's success in simulation lays the groundwork for the next frontier: extending ROBOTURK to support the remote teleoperation of real physical robots, further bridging the gap between digital training and real-world application.

If anyone with a smartphone could teach a robot a new skill, what is the first thing you would teach it?

FAQs

Why is large-scale data essential for training robots with Imitation Learning?

Robots need thousands of high-quality demonstrations to learn precise manipulation tasks. Small datasets lead to poor generalization, making Imitation Learning unreliable without scale.

How does ROBOTURK make robot training more scalable?

ROBOTURK uses smartphones as 6-DoF controllers and cloud-based simulation, enabling anyone to collect expert-like demonstrations without specialized hardware.

Why are traditional teleoperation methods ineffective for large-scale data collection?

Methods like kinesthetic teaching, game controllers, or VR are slow, costly, or limited in motion control, preventing the collection of large, diverse, high-quality trajectories.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)