How Computer Vision Based ATTOL System Helps Air Crafts in Landing & Takeoff

ATTOL (Autonomous Taxi, Takeoff, and Landing) enables planes to autonomously navigate runways, reducing pilot workload and reliance on costly ILS systems. By leveraging computer vision and machine learning, ATTOL enhances safety, efficiency, and future air travel.

For decades, landing and taking off have relied heavily on skilled pilots and costly systems like the Instrument Landing System (ILS).

While these methods have worked, they come with significant challenges: ILS equipment is expensive, not available at all airports, and pilots often face tough conditions during landings, especially in poor visibility.

The pain of managing these complexities can be immense—higher costs for airport equipment, added stress for pilots, and potential safety risks in critical moments.

In the rapidly evolving world of aviation, the quest for safer, more efficient flight operations has driven innovations.

One such groundbreaking development is the ATTOL (Autonomous Taxi, Takeoff, and Landing) system.

Launched in June 2018, ATTOL is one of Airbus's test projects designed to explore how autonomous technology can change the way planes operate.

By using onboard cameras and advanced computer vision, ATTOL can guide an aircraft through takeoff and landing autonomously, reducing reliance on traditional systems and enhancing safety and efficiency.

Curious about how this technology works and what it means for the future of aviation?

Keep reading to discover how ATTOL is set to revolutionize air travel and what it could mean for the skies ahead.

Existing Methods and Their Challenges

Pilots rely mostly on their eyes, skills or ATC (Air Traffic Controllers) for landing.

ILS (Instrument Landing System) Installed in some Airports

Auto landing is mostly used as an assist. Full Autonomy is not present yet.

ILS can support autonomous operations, but it's quite rare. Essentially, it’s like having the airport control the aircraft’s flight path as if it were flying the plane by wire.

A team of German Researchers from TUM (Technische Universität München) did a 100% autonomous landing in 2019 in single passenger aircraft carrying only the pilot.

They used visible light and infrared cameras on the nose of the plane to detect the airstrip.

A computer vision processor, trained to recognize and analyze runways, was utilized. The cameras identified the runway from a considerable distance, and the system directed the aircraft during its landing approach.

This system is still in the initial testing phases.

Understanding ILS (Instrument Landing System)

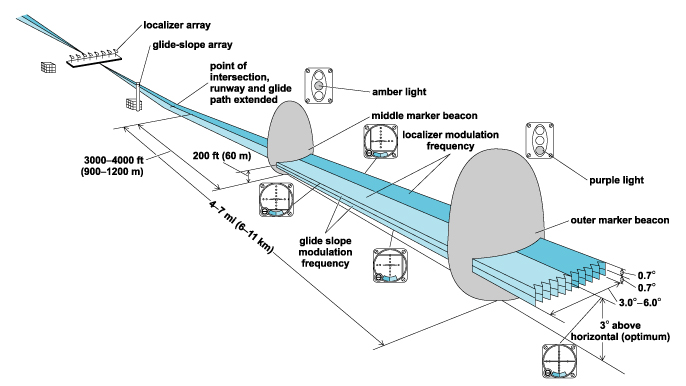

An Instrument Landing System (ILS) is a precision tool that helps pilots safely land an aircraft by using two radio beams.

One beam, called the localizer, guides the plane horizontally to keep it aligned with the runway.

The other, called the glideslope, provides vertical guidance to ensure the plane follows the correct descent path.

In some cases, marker beacons or bright runway lights may also be used to assist with the landing, though many airports now use a Distance Measuring Equipment (DME) system instead.

Img: ILS and its different components

Pros:

1. Can help in low visibility landings

Cons:

1. Very expensive

2. Installed at only a limited number of airports, and even fewer are equipped with the latest versions.

Why do we need a new method?

We need a new method because the current ILS system is expensive and only available at a few airports.

It's not practical for all locations, and it's outdated in many places. Plus, existing sensors like GNSS are less accurate at low altitudes, making landings more challenging.

Proposed solution (ATTOL):

- A suite of sensors, actuators and computers will be equipped in an aircraft to help use Computer Vision and Machine Learning for autonomy.

- ATTOL will help teach an aircraft to see and navigate the runway just like a pilot.

Img: Airbus’ world’s first autonomous takeoff

Using CNNs (Convolutional Neural Networks):

Why Modern Aviation Need ATTOL

Case 1

Problem:

Requires a lot of data for all cases. Lots of landings with a variety of runways, weather and lighting conditions.

Manual annotation is also not possible as we cannot annotate distance, localizer or glideslope values.

Solution:

Geometric Projection method to automate annotation using aircraft telemetry data.

How:

1. Commercial air crafts continuously determine their own position and altitude from which the camera’s position and orientation in world coordinates are computer.

Then, 3D world coordinates of runways & markings are geometrically transformed, which are extracted from satellite imagery into 2D pixel coordinates where they should appear in image.

Then, runway distance, localizer & glide slope values can be computed from the position of the aircraft.

2. Photo realistic synthetic data is generated using flight simulators. To mimic real cameras, simulators are configured to match sensor features like dynamic range, saturation & exposure control is turned via post-processing shaders.

Like this, all scenarios and approaches can be covered using simulators, even those which can be life threatening during real world scenarios.

Case 2

Problem:

We need detection (classification) for detecting landing strips and regression for estimating distance, localizer and glide slope values.

Solution:

Used Multi-Task Learning to implement both in a single network.

When different tasks rely on similar underlying understanding of data, sharing feature extraction layers can help the network generalize better, since features extracted in shared layers have to support multiple output tasks.

How:

Creating highly modified version of SSD (Single Shot Multibox Detector) where a number of shared layers extract features about runway environments followed by multiple task specific heads that output bounding boxes, bounding box classes, distance to runway, localizer deviation and glideslope deviation.

Case 3

Problem:

The current system can only process one frame at a time

Solution:

Techniques from Neural Network may allow us to leverage temporal information across sequences at a time.

This system can detect a runway from several miles and calculate runway distance. Localizer and glideslope deviation estimates are more challenging. Even an error of 0.1 degree proves a lot.

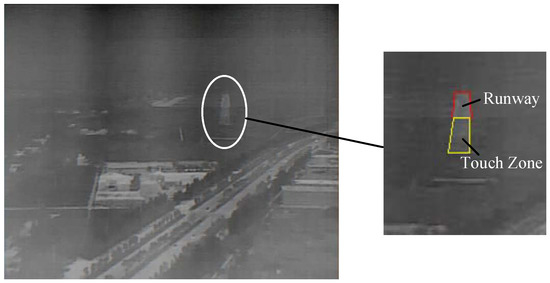

Img: Wayfinder’s Computer Vision Software detecting airstrip

Even after being trained on synthetic images, the network performed well on real images. Collecting more data can help improve the network more and help reduce the distinction between synthetic and real data.

Also, injecting side-channel information like airport layouts, runway dimensions or non-standard glideslopes can help improve the performance of the network.

Also, vision-based sensors are a good option for runway track alignment and ground taxi. They can also serve as redundant sensors along with existing GNSS sensors during ground operations.

Case 4

Problem:

Estimating 3D position of aircraft with respect to a threshold / touchdown point on a runway.

Proposed Solutions:

1. Using feature detection on runway lines and appropriate scaling using depth perception of a single camera. (Depth perception is the ability to see in 3D and estimate distance)

2. By computing depth from the defocus of the camera (Defocus / Improper focus. Objective is to estimate 3D surface of a scene from a set of images with different parameters, like focal setting or image plane axial position)

3. By using calibrated stereo (Two or more lenses/cameras, finding/adjusting their parameters. Finding out the rotation/translation between both cameras and finding correspondences in left & right image planes)

Img: Wayfinder Team doing camera calibration before test flight

Indoor Setup Required to Solve this Problem: Scaled down model of runway. Also, pictures need to be taken while simulating landing of aircraft which will be used to fulfill objectives and compare with real data.

Pictures required from at least 30 known camera positions. Error Band Allowed for the solution is ± 10%.

Img: Detection of Runway and Touch Zone using Aerial Image

More Information

- In a world where annual air travel volume is expected to double by 2036 and the number of pilots cannot scale quickly enough to meet that demand, adaptable, autonomous systems may provide an alternative solution.

- Advanced computer vision and machine learning from sensors to cameras are rapidly enabling major advances in the development of autonomous technologies and aircrafts.

- The aim is to develop scalable, certifiable autonomy systems to enable self-piloted aircraft applications.

These systems can be usable from small urban air vehicles such as air taxis to large commercial airplanes for which teams are already investigating single pilot operations. - The ATTOL project was initiated by Airbus in June 2018 with collaboration with Project Wayfinder with the ambition to revolutionize autonomous flight.

The team is building scalable, certifiable autonomy systems to power self-piloted aircraft applications from small urban air vehicles to large commercial planes.

Main objective was to explore how autonomous technologies, including the use of machine learning algorithms and automated tools for data labeling (annotation), processing and model generation, could help pilots focus less on aircraft operations and more on strategic decision-making and mission management.

Hundreds of thousands of data points were used to train such algorithms, so the system can understand how to react to each and every event it could encounter.

It can also detect any potential obstacles including birds and drones (Also known as sense and avoid system).

The team is trying to create a reference architecture that includes hardware and software systems, which after applying a data-driven development process enables aircraft to perceive and react to its environment.

Airbus aims to build scalable, certifiable autonomy systems to power the next generation of aircraft. - After doing extensive tests for around 2 years, airbus concluded the ATTOL project with fully autonomous flight tests.

Airbus was able to achieve autonomous taxiing, take-off and landing of a commercial aircraft through fully automatic vision-based flight tests using on-board image recognition technology.

In total, over 500 test flights were conducted.

Approximately 450 of those flights were dedicated to gathering raw video data, to support and fine tune algorithms, while a series of six test flights, each one including five take-offs and landings per run, were used to test autonomous flight capabilities. - The rapid development and demonstration of ATTOL’s capabilities was made possible due to a cross-divisional, cross-functional global team comprising of Airbus engineering and technology teams.

- The test flights were done with the A350-1000 aircraft at Toulouse Blagnac airport. The test crew comprised of two pilots, two flight test engineers and a test flight engineer.

- Airbus was able to achieve autonomy in takeoffs first (around Jan 2020), then they worked on autonomous landings and autonomy in the taxiway was achieved during final rounds of testing (around June 2020).

The reason it was conducted in the end was because it was getting very difficult for the ATTOL system to differentiate between the runway and the taxiway.

Also, runways are mostly just straight paths but there are certain rules during the taxiway that the camera needs to be able to follow. Training the system to do that proved to be a difficult task.

Img: Airport Runway, Taxiway & Aprons

To achieve the task, an advanced computer vision system was used with monitoring from the pilots.

This was done with the help of onboard cameras, radars and lidars which helps in light based detection and ranging.

The information from these sources were sent to onboard computers which process this information to steer the aircraft and control thrust. Several cameras were installed in different parts of the plane including the tails, nose and landing gears.

Img: Experimental sensor configuration on demonstrator vehicle including cameras, radar, and lidar.

For autonomous systems the technology is divided between software and hardware:

- Software enables the aircraft to perceive the environment around it and decide how best to proceed.

At the core of software are perception methods based on both machine learning algorithms and traditional computer vision.

The best techniques that have been developed for other applications such as image recognition and self-driving cars are used and expanded to the requirements of autonomous flights.

A decision-making software is also developed to safely navigate this world that is sensed around the aircraft. - Hardware includes the suite of sensors and powerful computers required to feed and run the software. The rigorous requirements on hardware are met to ensure safe and reliable operation in the air.

- The development of autonomous aircraft will be an iterative process. Starting with an application of the autonomous system in a limited environment, the system will be trained to fully understand that environment, and then expand the environmental envelope over time.

The iterations will include a cycle of massive data collection followed by system testing, subsequent development, and then verification of system safety.

Once each new iteration has been verified, it will be deployed to start the next cycle of data collection and continuous improvement of the system performance. Safety is also a major concern.

Everything being worked on must pass rigorous testing to ensure what is created is not only revolutionary but certifiable and secure. - Rather than relying on an Instrument Landing System (ILS), the existing ground equipment technology currently used by in-service passenger aircraft in airports around the world where the technology is present.

This automatic take-off was enabled by image recognition technology installed directly on the aircraft. - One of Airbus Test Pilot Captain Yann Beaufils said “The aircraft performed as expected during these milestone tests.

While completing alignment on the runway, waiting for clearance from air traffic control, we engaged the auto-pilot. We moved the throttle levers to the take-off setting and we monitored the aircraft.

It started to move and accelerate automatically maintaining the runway centre line, at the exact rotation speed as entered in the system.

The nose of the aircraft began to lift up automatically to take the expected take-off pitch value and a few seconds later we were airborne”. - Can help airlines increase efficiency & safety, reduce costs, reduce pilot workload, improve traffic management, address the shortage of pilots and enhance operations in the future.

- Technology is still under testing. The main aim is not to remove the pilot or co-pilot from the cockpit but to assist them better during landings, takeoffs and taxiways and maybe in some point in future enable the aircraft to do all this by itself without any additional assist, if required.

Autonomous technologies will support pilots, enabling them to focus less on aircraft operation and more on strategic decision-making and mission management.

The pilot will be responsible for managing the aircraft and decision making during critical times.

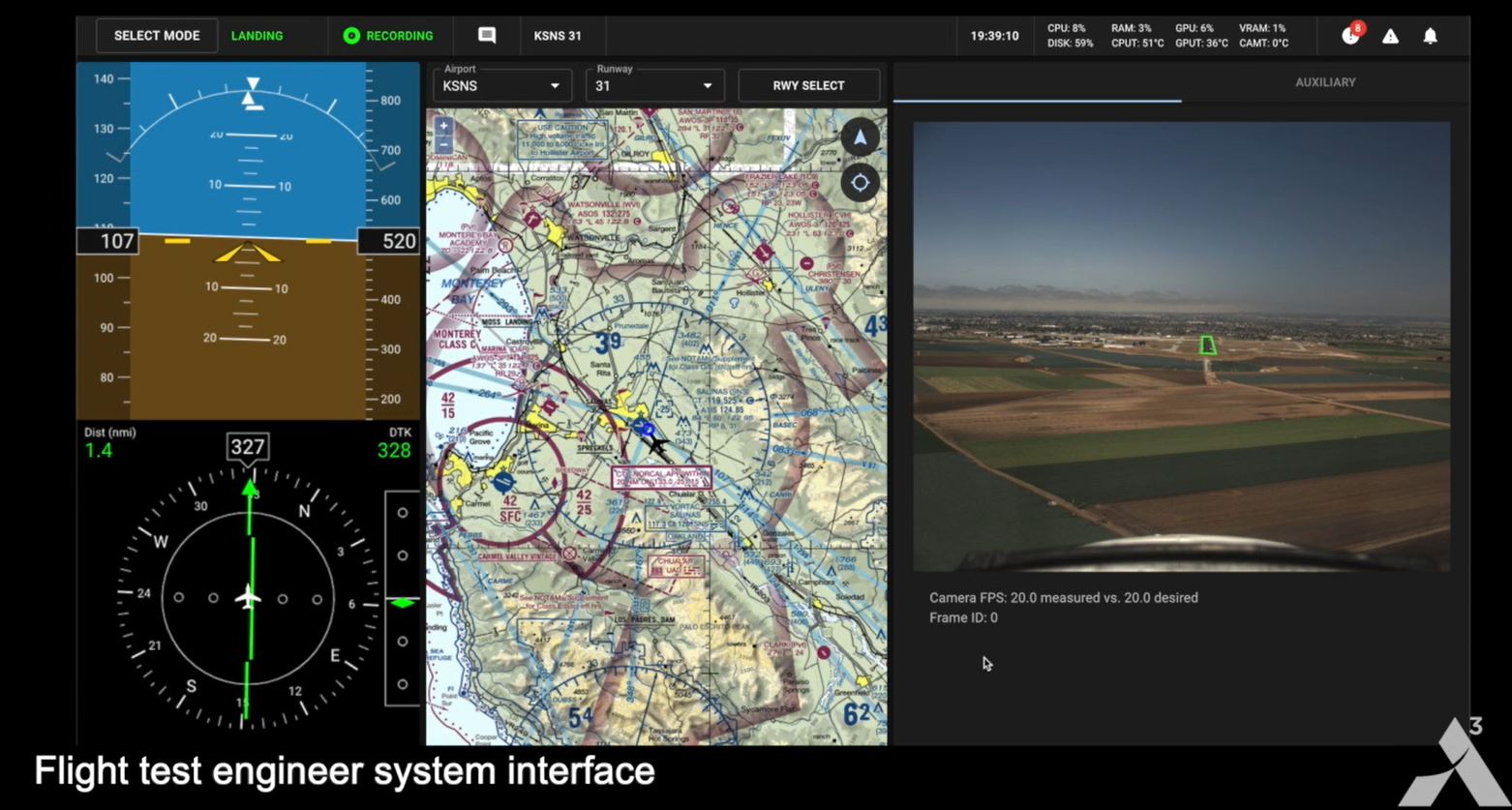

Img: First Data Collection Test Flight by Wayfinder

After the successful testing of ATTOL, wayfinder team is now working for data collection from real flights to improve the machine learning software and data collection pipeline.

The three aims they are working on right now:

- Developing the hardware platform i.e. a data collection platform to collect camera imagery for runway approach from an aircraft at various different airports.

- Collect data from all that imagery and feed machine learning algorithms being developed by wayfinder team to create autonomous takeoff and landing capabilities.

- Run the algorithms in real time on the aircraft and demonstrate the working in real time.

Img: Onboard Computer for Data Collection on Wayfinder test aircraft

The team is continuously working towards making progress in autonomous aviation revolution by:

- Architecting dedicated Machine Learning models

- Creating realistic simulations

- Developing safety critical software and hardware

- Advancing flights, testing systems with a flight test aircraft

- Scaling data collection capabilities and advancing algorithms

Img: Flight test engineer system interface

Scenarios this technology may help in :

- If any/both pilots got any health related emergency / faint / fatal injuries mid flight, this system may help land the aircraft automatically and safely.

- In military operations in / near enemy territory involving some kind of risk, using this and improved technology the aircraft can complete a mission without carrying a pilot or risking human life.

Conclusion

In conclusion, the ATTOL system represents a major leap in aviation technology, offering safer, more efficient flight operations through computer vision and machine learning.

By enabling autonomous takeoff, landing, and taxiing, ATTOL reduces pilot workload, enhances safety, and addresses challenges like pilot shortages. While still in testing, it holds the potential to revolutionize the future of air travel.

FAQ

What is the ATTOL system?

The ATTOL system is a project that uses machine learning and computer vision to enable aircraft to navigate autonomously.

The system uses a combination of sensors, including cameras, radar, and LiDAR, to help the aircraft detect its surroundings and calculate how to navigate.

What are the goals of the ATTOL system?

The ATTOL system aims to improve aircraft safety and operational efficiency.

It also aims to reduce the need for external infrastructure, such as GPS signals or instrument landing systems, to enable automatic landings.

How was the ATTOL system tested?

The ATTOL system was tested on a full-sized Airbus A350-1000 airliner. The aircraft was flown through a series of human-controlled flights to gather video data and fine-tune the control algorithms.

The aircraft then successfully taxied, took off, and landed using the ATTOL system.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)