How camera sensors help machine vision applications?

Camera sensors are light-sensitive detectors that convert incident photons into an electrical signal that can be read by a digital device. Most cameras use 2D array detectors, and choosing the right sensor for a given application is often a trade-off between cost, desired final image resolution, and required readout speed.

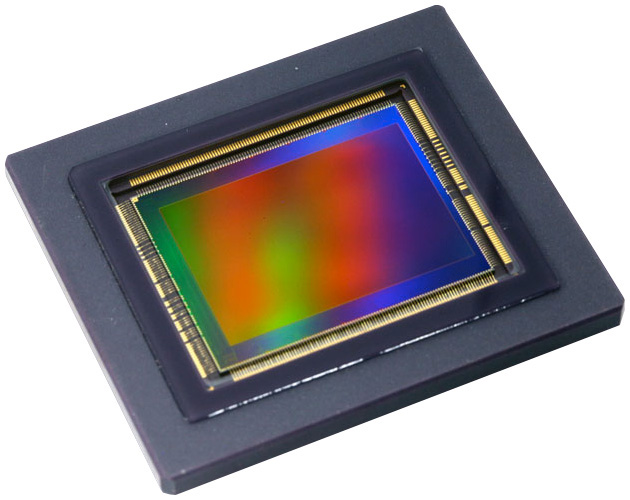

The heart of any camera is the sensor. Modern sensors are solid-state electronics containing up to millions of separate photodetector locations called pixels. While there are many camera manufacturers, the vast majority of sensors are made by only a handful of companies. However, two cameras with the same sensor can have very different performance and characteristics due to the design of the electronic interface. In the past, cameras used optical tubes like Vidicons and Plumbicons as image sensors.

Although they are no longer in use, their mark on nomenclature related to sensor size and format remains to this day. Today, almost all machine vision sensors fall into one of two categories: charge-coupled devices (CCDs) and complementary metal-oxide semiconductor imaging devices (CMOS).

Types of Camera Sensors

CMOS camera sensor

The CMOS sensor has become one of the key technologies to facilitate the development of many machine vision applications. Many automation tasks require the recognition of 3D objects, not just 2D. Therefore, it is important to use multiple camera sensors to provide different viewing angles for full 3D view recognition.The cost-effective nature of CMOS technologies has made more complex machine vision applications economically viable.

Quantum efficiency improvements in CMOS sensors have also helped, as images that require shorter exposure times and harsher low-light environments are no longer an impossible challenge.The quality of the camera sensor is key to the camera's overall optical performance. Therefore, while optical focus and aperture can be used to help produce sharper images, chip quality will often determine the final resolution.

Because CMOS sensors are very sensitive and have very short read times, they can be used to quickly capture a series of images to cope with dynamically changing situations. Although it is difficult from a data processing point of view if multiple images are analyzed as part of a sequence, in terms of total information content, these image stacks provide a large amount of potential material for analysis. The ability to take snapshots also helps to fix issues like motion blur.Machine vision is becoming a wide application area for CMOS sensors, although choosing the right sensor for the application is very important.

One way to reduce the cost of camera sensors is to use sensors that are only sensitive to a single color. However, for applications such as fruit ripeness assessment that often use fruit color as useful information in the image interpretation step, a single color detector may not be suitable.

Developing faster frame rates for imaging and sensors that can operate outside the visible light region are two key areas for machine vision camera sensor development. Faster shooting means fewer motion blur issues. Many applications will also benefit from the types of spectral information that can be obtained from the wavelengths in the infrared.

Charge-coupled device (CCD)

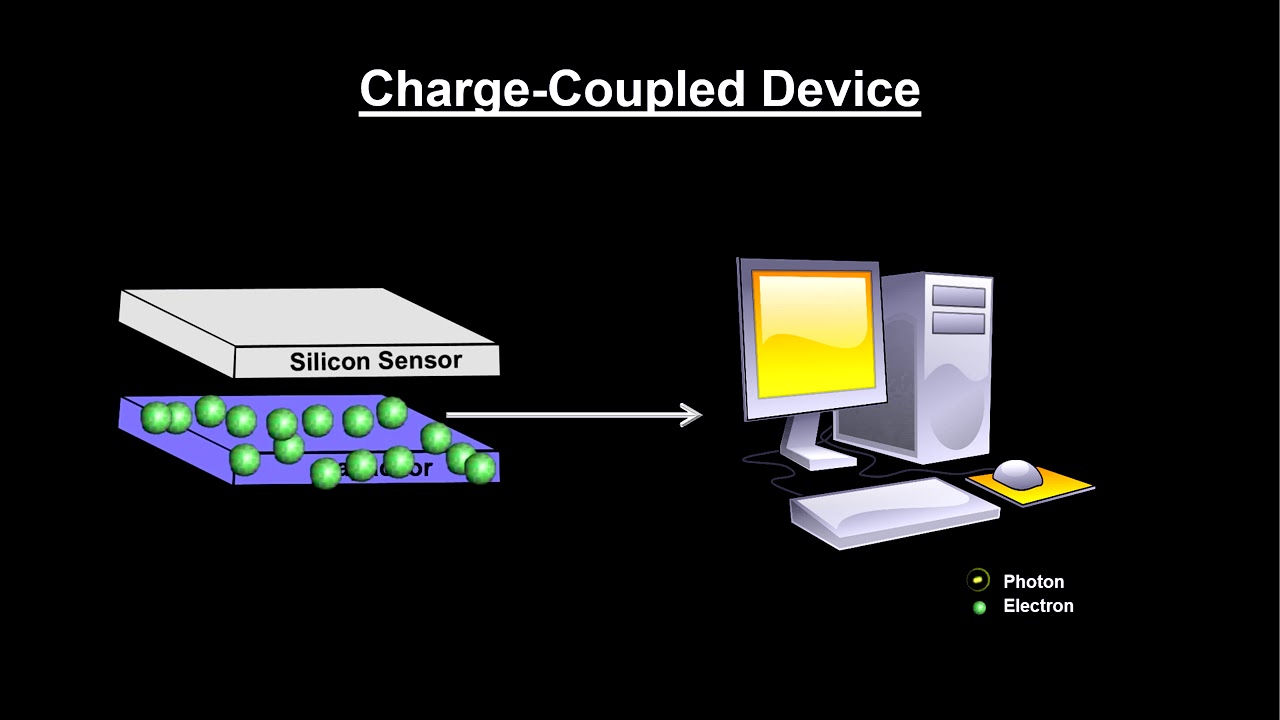

The charge-coupled device (CCD) was invented in 1969 by scientists at Bell Laboratories in New Jersey, USA. For many years, it was the dominant technology for photography, from digital astrophotography to machine vision inspection. The CCD sensor is a silicon chip that contains a series of photosensitive locations.

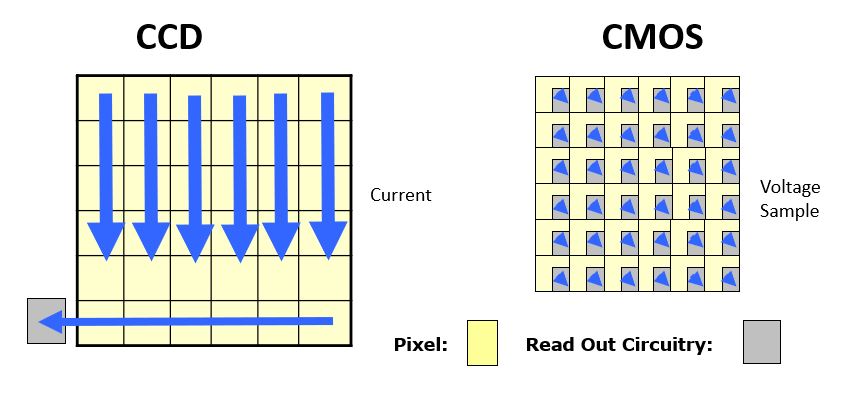

The term "charge-coupled device" actually refers to the method by which charge packets are moved through the chip from the imaging locations to the read head, a shift register, similar to the concept of a bucket team. The clock pulses create potential wells to move charge packets on the chip before being converted to voltage by a capacitor. The CCD sensor itself is an analogue device, but the output is immediately converted to a digital signal by an analog-to-digital converter (ADC) in a digital camera, on-chip or off-chip. In analogue cameras, the voltage at each location is read in a specific sequence, with timing pulses added at some point in the signal sequence to reconstruct the image.

Charged packets are limited by the rate at which they can be transmitted, so transmission is the main cause of the CCD's speed drawback but also leads to high sensitivity and consistency between pixels of the CCD. Since each charge pack sees the same voltage transition, the CCD is very uniform across its photosensitive sites. Charge transfer also leads to the "bloom" phenomenon, where charge from the photosensitive site overflows to neighboring sites due to finite well depth or charge capacitance, placing an upper limit on the useful dynamic range of the sensor. This phenomenon is represented by the blurring of bright spots in the image of the CCD camera.

To compensate for the shallow well depth in the CCD, micro lenses are used to increase the fill factor, or effective photosensitive area, to make up for the space on the chip occupied by the electrically coupled shift registers. accumulate. This improves pixel efficiency but increases angular sensitivity to incident light rays, requiring them to hit the sensor near its normal frequency for effective collection.

Understanding Camera Vision Inspection Systems

Camera vision inspection systems use cameras combined with image processing software and algorithms to inspect products or processes automatically. They capture images or videos in real time and analyze them against predefined quality standards to detect defects, deviations, or anomalies with high accuracy. These systems enhance quality control, increase efficiency, and reduce costs by automating inspection tasks that were traditionally done manually.

Key factors in implementing these systems include selecting appropriate camera resolution and speed, ensuring consistent lighting, integrating with existing production lines, and planning for maintenance and support. Proper calibration and testing are essential to maintain accuracy and reliability in dynamic production environments.

Conclusion

The traditional battle between CCD and CMOS sensors is almost over, with CMOS sensors winning. When it comes to key decisions in the design of machine vision systems, we now need to turn to some key sensor parameters that will have a greater influence on the final system performance. In this article, we'll explore these settings and analyze which types of vision applications will benefit the most from each.

Computer vision is a rapidly growing $3.23 billion sector in 2021, with expected growth of 7.7% year-over-year.Vision computers involve collecting visual information, possibly with camera sensors, and using image recognition so that computers can "interpret" this visual information as useful data.

The success and rapid growth of computer vision are due to its usefulness in many applications, including industrial automation, the creation of autonomous vehicles and robots, and in general "intelligent" systems where devices can respond to external stimuli in their environment.

Enhance Your Camera Vision Inspection System with Labellerr!

Labellerr offers expert data annotation solutions to boost the accuracy and reliability of your camera vision inspection systems. Contact us today for a free demo and elevate your machine vision capabilities.

If you find this blog informative, then gain some more information here!

.

Simplify Your Data Annotation Workflow With Proven Strategies

.png)