Data augmentation: train deep learning models with less data

Construction of reliable models that can extrapolate generalisations from distribution data is one of the primary objectives of deep learning. A huge dataset is one of the key ingredients for creating these models. Large datasets like the image-net, which has numerous images, and the text datasets, which contain more than a billion words, are available for training computer vision and natural language processing (NLP) systems, respectively. This has allowed deep learning to advance recently.

When using deep learning, it's usual to run into issues with the amount of the available data. You'll need extra data, which is when data augmentation comes in, in order for the data in your model to be correctly generalised. Additionally, Data Augmentation is a strategy used to enhance model performance in cases where it is subpar. If you just have a limited dataset, this blog will clearly describe data augmentation and explain how it is done for deep learning.

Let’s first clear our understanding about deep learning and data augmentation

Deep learning: what is it?

A branch of machine learning called deep learning uses artificial neural networks (ANNs), which are modelled after the neurons in the human brain, to create systems and models that are able to learn and perform at the cutting edge in specific tasks.

What is data augmentation?

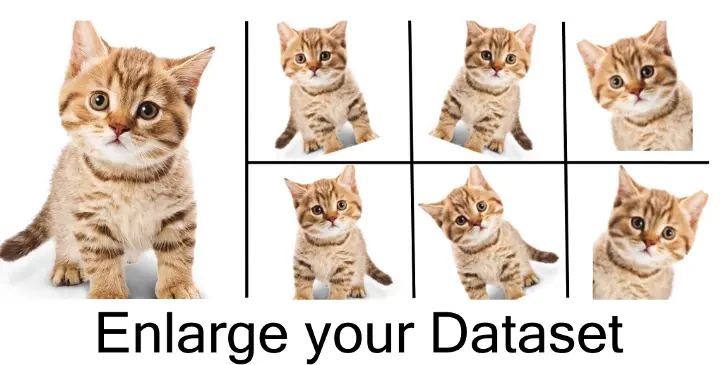

Data augmentation is the technique of creating additional data points from current data in order to artificially increase the amount of data. In order to amplify the data set, this may involve making small adjustments to the data or utilizing machine learning methods to produce new sets of data in the generative model of the original data.

Data augmentation and data augmentation: train deep learning models with limited data are related

Because there are so many variables that need to be modified during training, many deep learning models need big datasets in order to perform well.

Data collecting takes time and money since it requires money, labor, computational power, and time to gather and analyze the information.

It would be quite difficult to gather more data whenever your data set, as you have seen, has little data. Cloning and modifying your current data allowed you to carry out data augmentation, which more than made up for the expense of further data acquisition.

Importance of data augmentation

Here are a few of the factors that have contributed to the rise in popularity of data augmentation approaches in recent years.

- It enhances the effectiveness of machine learning models (more diverse datasets)

Practically every state-of-the-art deep learning application, including object identification, picture classification, image recognition, understanding of natural language semantic segmentation, and others, makes extensive use of data augmentation techniques.

By creating fresh and varied examples for training datasets, augmented data is enhancing the efficiency and outcomes of deep learning models.

2. Lowers the expense of running the data collection operations

Deep learning models may require time-consuming and expensive operations for data collecting and data labeling. By adopting data augmentation techniques to change datasets, businesses can save operating costs.

How data augmentation helps you when you have limited data for deep learning?

With the aid of data augmentation, new images can be tweaked (made slightly better).

It utilizes previously created samples and modifies them in certain ways to increase the amount of training samples while also producing new samples usually applied to image data. These image processing choices are frequently employed as pre-processing methods to ensure that CNN-based image classification models are reliable.

Below are some most commonly used techniques of data augmentation that you can consider:

Most used techniques for data augmentation

Here, we'll go through a few straightforward but effective augmentation methods that are frequently employed. Let's assume anything before we investigate these methods for the sake of simplicity. The underlying premise is that we don't need to take the area outside of the image into account. To ensure that the assumption is accurate, we'll apply the strategies listed below.

Flip method

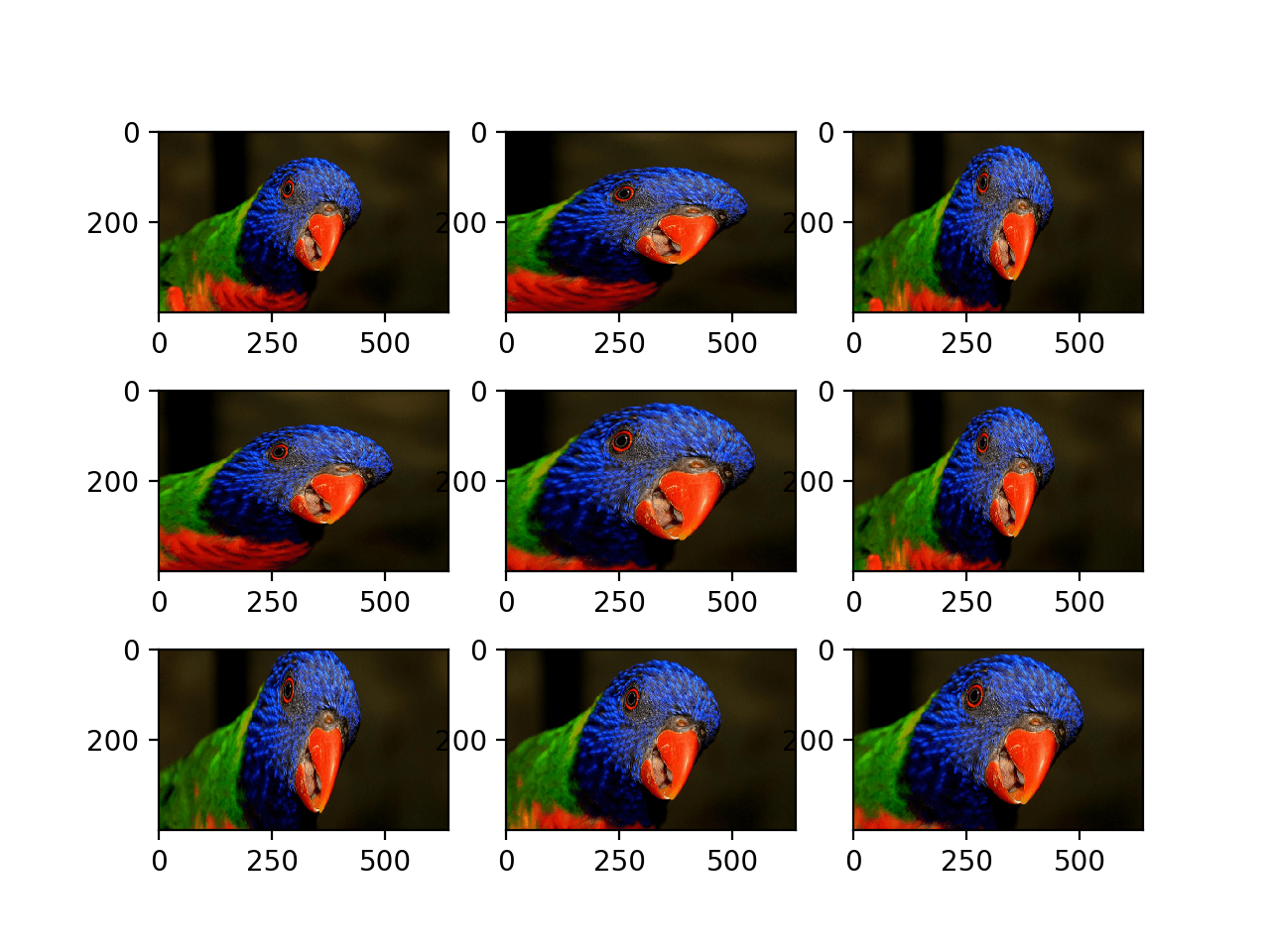

Images can be rotated both horizontally and vertically. Certain frameworks don't support vertical flips. However, executing a horizontal flip first, then a 180-degree rotation of the image, is identical to a vertical flip. Here are some instances of images that have been reversed.

Rotation method

It's important to keep in mind that following rotation, image dimensions might not be maintained. Right-angle rotation will maintain the image size if the image is a square. If it's a rectangle, a 180° rotation would maintain the shape's dimensions.

The size of the final image will alter as the image is rotated at smaller degrees. In the part after this, we'll look at how to approach this problem. The square images in the examples below have been rotated in a perpendicular direction.

Scale Method

You can zoom in or out of the image. The image size after scaling outward will be greater than the initial image size. The majority of image frameworks remove a portion of the new image that is the same size as the old image. In the part after this one, we'll discuss scaling inward, which shrinks the image and compels us to assume certain things about the area beyond the boundary. Examples of scaled-down photos are shown below.

Crop Method

Users simply randomly sample a portion of the original image, as opposed to scaling. This part is then scaled back to its original size. This technique is frequently referred to as random cropping. Here are a few instances of random cropping. If you look carefully, you can see that this approach and scaling are different.

Translation Method

Simply shifting the image in X or Y direction constitutes translation (or both). The image in the example below is translated suitably under the assumption that there is a black background outside its limit. Due to the fact that most things can be found practically everywhere in the image, this kind of augmentation is highly helpful. The convolutional neural network must search everywhere as a result.

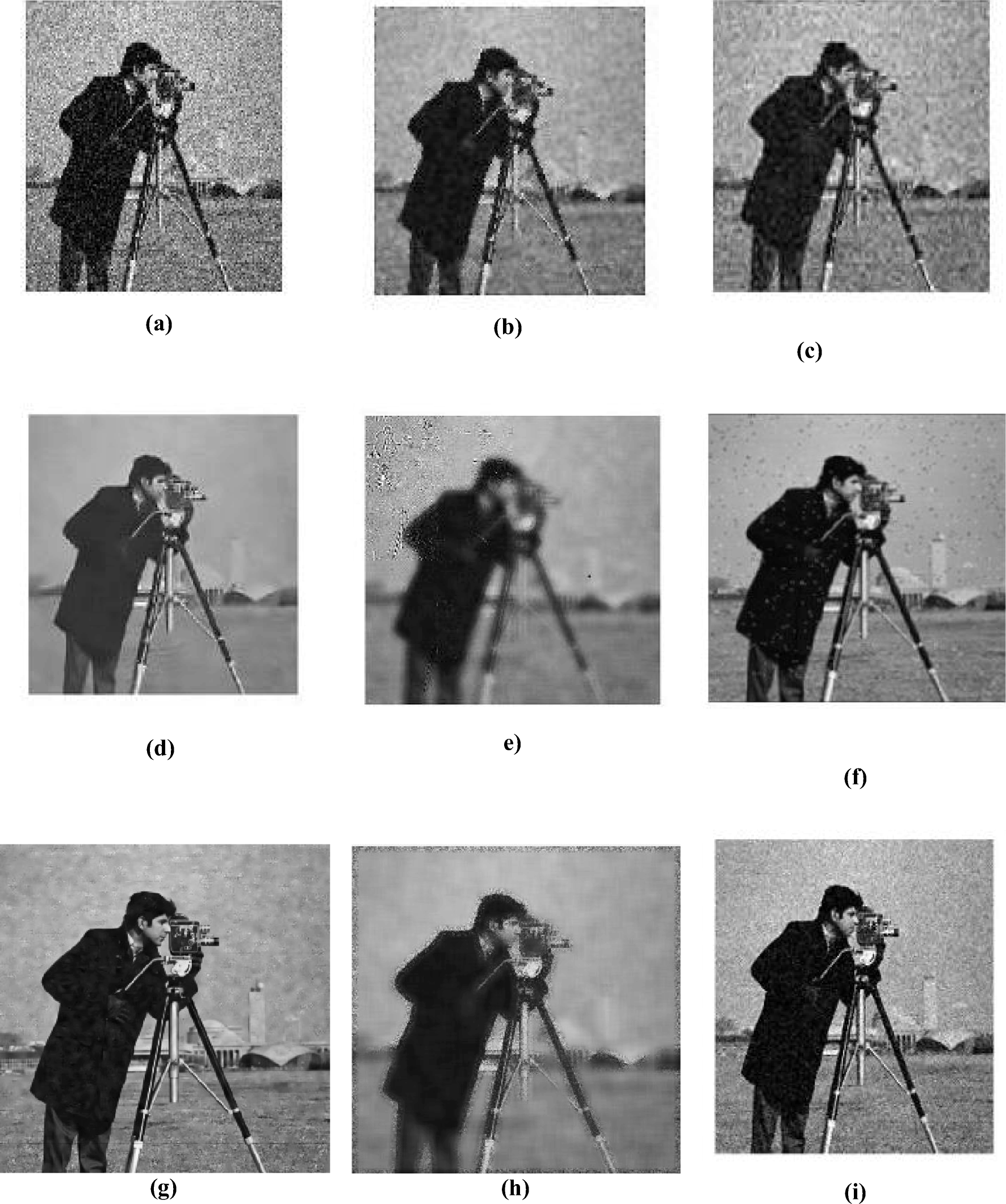

Gaussian Noise Method

When the neural network attempts to learn frequent patterns (high frequency features), which may or may not be relevant, over-fitting typically occurs. The high frequency features are actually distorted by gaussian noise, with zero mean and ultimately has sets of data in all frequencies. As a result, lower frequency components—typically, your intended data—are similarly affected, but the neural network may train itself to ignore that. The ability to learn can be improved by adding just the appropriate amount of noise.

This picture illustrates on how Gaussian noise method helps in bringing quality by distortion

Conclusion

By supplying more prediction errors and more variability in a deep learning model, data augmentation lessens data over fitting.It improves the capacity for generalization

It addresses issues of class disparity. It is one of the most effective methods when you have limited data for your deep learning model. But, it is always recommended to go for high-quality and high-quantity datasets as they are more efficient in delivering the right results.

We are a training data platform that helps in training high-quality data. We have a team of high-skilled annotation experts. Alongside, we are also proficient with large scale custom data collections covering audio, image, testing, sentiment and point of interest. We help you generate data artificially, giving you access to hard-to-find or edge-case

data. If you are looking for a platform that can serve you the best purpose in your data training project, then we are here to help.

Visit our website and learn more about us!

Simplify Your Data Annotation Workflow With Proven Strategies

.png)