Building a Pill Counting System with Labellerr and YOLO

Fine-tuning YOLO for pill counting enables accurate detection and tracking of pills in pharmaceutical setups. Learn how to customize YOLO for your dataset to handle overlapping pills, varied lighting, and real-time counting tasks efficiently.

In the pharmaceutical industry, packaging is not merely about containment; it is a critical final step in the quality control chain, where precision is paramount.

The primary challenge extends far beyond simply getting the right number of pills into a bottle.

While automated mechanical counters have achieved high speeds, they operate with a fundamental limitation: they are blind. They can tally objects passing a sensor, but they cannot assess the quality of those objects.

This creates a significant gap where defects like broken, chipped, or discolored pills, foreign contaminants, or incorrect products can pass through undetected.

Why it is necessary

The stakes for this process are exceptionally high, governed by three critical factors:

- Patient Safety: The foremost priority is ensuring every patient receives the correct medication at the precise dosage. An inaccurate count can lead to underdosing, rendering a treatment ineffective, or overdosing, which can cause toxic effects and severe adverse reactions.

- Regulatory Compliance: Global regulatory bodies mandate strict Good Manufacturing Practice (GMP) standards. Any deviation, including count or quality errors, can result in severe penalties, product recalls, and production shutdowns.

- Operational Integrity: Counting errors disrupt inventory management, leading to costly overstocking or critical shortages. A single product recall can inflict immense financial and reputational damage, eroding trust between the manufacturer, healthcare providers, and patients.

Traditional systems, while fast, cannot address the qualitative aspect of inspection. This is the problem domain where computer vision provides a transformative solution.

Computer Vision: Enabling Biotech to See and Inspect

Computer vision is an application of artificial intelligence that allows systems to interpret and understand visual information from images or videos.

In a pharmaceutical context, it upgrades the packaging line from a simple counting station to an intelligent, real-time quality assurance checkpoint.

This enables the system to not only count but also perform a comprehensive inspection, identifying issues that are invisible to mechanical counters:

- Defect Detection: The model can identify and flag tablets that are broken, cracked, or chipped.

- Quality Verification: It can detect discoloration or surface imperfections that may indicate a problem with the batch.

- Contaminant Identification: The system can spot foreign objects within the product flow.

- Product Verification: By analyzing shape, size, color, and even imprinted markings, the AI can ensure that the correct product is being packaged, preventing dangerous mix-ups.

This technology represents a paradigm shift from reactive quality control where defects are hopefully caught later, to proactive, integrated quality assurance that prevents errors at their source.

How Labellerr helps Biotechnology

The "intelligence" of a computer vision model is not inherent; it is learned from data. An AI model is only as accurate and reliable as the dataset it was trained on.

This is where the critical process of data annotation becomes the foundation of the entire system. To teach an AI to recognize a "broken pill," it must be shown thousands of examples of broken pills, each one meticulously labeled by a human.

This is the core function of a data annotation platform like Labellerr.

Labellerr enable the development of robust pharmaceutical AI by providing:

- Advanced Annotation Tools: A suite of tools for drawing precise bounding boxes, polygons, and segmentation masks around objects in an image, which is essential for teaching the model exactly what to look for.

- Workflow and Quality Management: Features to manage large teams of annotators, enforce consistent labeling guidelines, and run automated quality checks to ensure the accuracy of the final dataset. This includes review cycles and consensus mechanisms to minimize human error.

- AI-Assisted Labeling: Using existing models to suggest initial labels, which human annotators then review and correct. This dramatically speeds up the annotation process without sacrificing quality.

In an industry where errors are unacceptable, the certainty provided by a meticulously annotated dataset is non-negotiable.

How to Create Your Own Solution Using YOLO and Labellerr

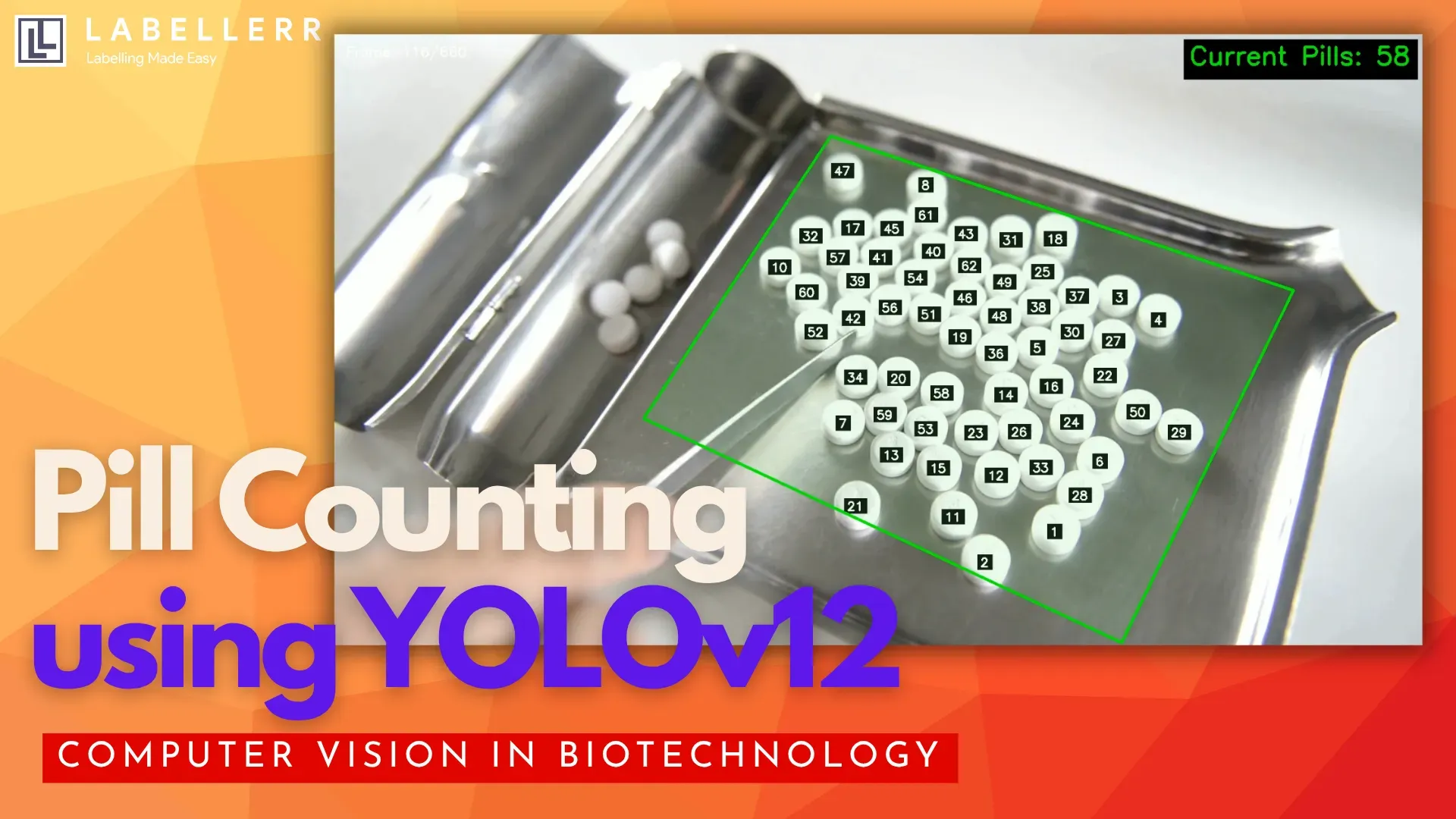

You can build a functional pill counting system using widely available tools. The following steps outline the process, using the popular YOLO (You Only Look Once) object detection model and a data annotation platform like Labellerr.

Step 1: Dataset Creation and Annotation with Labellerr

To build accurate detection models, having real-world, industry-specific data is essential.

Collecting large image datasets manually can be challenging, but with Labellerr, you can generate thousands of annotated images from just a few videos in one seamless process.

Simply create a video annotation project on Labellerr, upload your videos, and define your target class (for example, Pill). Then, annotate the first frame using the SAM Magic Wand to label all visible objects.

Once done, start the SAM2 tracking, it automatically tracks and annotates the same objects across all frames in the video.

This single automated workflow transforms your videos into a large, high-quality image dataset.

When complete, you can export your annotations in JSON format.

Step 2: Model Training with YOLOv12

Once your annotated dataset is ready, the next step is to train YOLOv12 for pill detection and counting.

YOLOv12 provides high accuracy and real-time performance, making it ideal for healthcare automation tasks like pill counting.

Before that, we need clone some utils functions to convert our annotations to YOLO format.

!git clone https://github.com/Labellerr/yolo_finetune_utils.git

Now, convert your annotation json to YOLO format.

from yolo_finetune_utils.video_annotation.yolo_converter import convert_to_yolo_segmentation

ANNOTATION_FILE = "path/to/annotations.json"

VIDEOS_DIRECTORY = "path/to/video/dir"

OUTPUT_DATASET_DIR = "path/to/output/dir"

convert_to_yolo_segmentation(

annotation_path=ANNOTATION_FILE,

videos_dir=VIDEOS_DIRECTORY,

use_split=True,

split_ratio=(0.7, 0.2, 0.1),

output_dir=OUTPUT_DATASET_DIR

)

Parameters:

annotation_path: Path to the JSON file containing video annotationsvideos_dir: Directory containing the source video filesuse_split: Boolean flag to enable dataset splittingsplit_ratio: Tuple defining train/validation/test split (70%/20%/10%)output_dir: Destination directory for the converted YOLO dataset

Now, start our YOLO model training.

First install ultralytics library,

!pip install ultralytics

Now start YOLOv12 model training,

# Load a model

model = YOLO("yolo12n-seg.yaml")

# Train the model

results = model.train(

data=r"yolo_dataset\data.yaml", # Path to your dataset YAML file

epochs=300, # Number of training epochs

imgsz=640, # Image size

batch=-1, # Batch size

device=0, # GPU device (0 for first GPU, 'cpu' for CPU)

workers=4, # Number of dataloader workers

project="yolo12_segmentation-2", # Project folder name

name="train_run", # Experiment name

)

Training Parameters:

data: Path to the data.yaml configuration fileepochs: Number of training iterations (200 for thorough training)imgsz: Input image size (640x640 pixels)batch: Batch size (-1 enables auto-batch sizing based on GPU memory)device: GPU device ID (0 for first GPU, 'cpu' for CPU training)workers: Number of parallel data loading threadsproject: Output directory for training artifactsname: Specific run name for organization

Output: The model will be trained and checkpoints saved to yolo12_segmentation-2/train_run/weights/. Key files include:

best.pt: Best performing model weightslast.pt: Weights from the final epoch- Training curves and validation metrics

Training Tip: The auto-batch feature (batch=-1) automatically determines the optimal batch size based on available GPU memory. Monitor the training output for metrics like box_loss, seg_loss, cls_loss, and mAP scores.

Step 3: Implement Tracking Logic to Ensure Accurate Counts

Now, create a class that tracks and count the no of pills in the given region.

Run this class with your custom trained model on a video to track and count the pills

import cv2

import numpy as np

from ultralytics import YOLO

from collections import defaultdict, deque

class RealTimePillCounter:

def __init__(self, model_path='best.pt', conf_threshold=0.6, iou_threshold=0.5):

"""

Initialize the real-time pill counter with YOLO model

Args:

model_path: Path to the trained YOLO model

conf_threshold: Confidence threshold for detections (default: 0.6)

iou_threshold: IOU threshold for NMS (default: 0.5)

"""

self.model = YOLO(model_path)

self.conf_threshold = conf_threshold

self.iou_threshold = iou_threshold

self.roi = None

self.track_history = defaultdict(lambda: deque(maxlen=30))

def select_roi(self, frame):

"""

Interactive ROI selection on the first frame using fullscreen and point input

Creates a polygon from selected vertices

Args:

frame: First frame of the video

Returns:

roi: Selected region vertices as list of points [(x1,y1), (x2,y2), ...]

"""

clone = frame.copy()

points = []

def mouse_callback(event, x, y, flags, param):

if event == cv2.EVENT_LBUTTONDOWN:

points.append((x, y))

# Create fullscreen window

window_name = 'Select ROI - Fullscreen'

cv2.namedWindow(window_name, cv2.WINDOW_NORMAL)

cv2.setWindowProperty(window_name, cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

cv2.setMouseCallback(window_name, mouse_callback)

print("\n=== ROI Selection Instructions ===")

print("1. Click on the screen to add vertices of the region")

print("2. Add at least 3 points to form a polygon")

print("3. Press SPACE to confirm selection")

print("4. Press 'r' to reset points")

print("5. Press ESC to exit")

print("===================================\n")

while True:

display = clone.copy()

# Draw all clicked points

for i, point in enumerate(points):

cv2.circle(display, point, 8, (0, 255, 255), -1)

cv2.putText(display, f'V{i+1}', (point[0]+15, point[1]-15),

cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 255), 2)

# Draw polygon if we have at least 3 points

if len(points) >= 3:

# Draw semi-transparent polygon

overlay = display.copy()

pts = np.array(points, np.int32)

pts = pts.reshape((-1, 1, 2))

cv2.fillPoly(overlay, [pts], (0, 255, 0))

cv2.addWeighted(overlay, 0.2, display, 0.8, 0, display)

# Draw polygon outline

cv2.polylines(display, [pts], True, (0, 255, 0), 3)

# Draw lines between consecutive points

if len(points) >= 2:

for i in range(len(points) - 1):

cv2.line(display, points[i], points[i+1], (0, 255, 0), 2)

# Draw instructions

instructions = [

f'Vertices: {len(points)} | Click to add vertices',

'SPACE: Confirm | R: Reset | ESC: Exit'

]

y_offset = 40

for i, text in enumerate(instructions):

# Background for text

(text_w, text_h), _ = cv2.getTextSize(text, cv2.FONT_HERSHEY_SIMPLEX, 1, 2)

cv2.rectangle(display, (10, y_offset - 30 + i*50),

(text_w + 30, y_offset + 10 + i*50), (0, 0, 0), -1)

cv2.putText(display, text, (20, y_offset + i*50),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2)

cv2.imshow(window_name, display)

key = cv2.waitKey(1) & 0xFF

if key == 32: # SPACE key

if len(points) >= 3:

self.roi = points.copy()

print(f"ROI polygon selected with {len(points)} vertices: {self.roi}")

break

else:

print("Please select at least 3 vertices to form a polygon!")

elif key == ord('r') or key == ord('R'): # Reset

points.clear()

print("Vertices reset")

elif key == 27: # ESC key

if len(points) >= 3:

self.roi = points.copy()

print(f"ROI polygon selected with {len(points)} vertices: {self.roi}")

break

else:

print("Exiting without ROI selection")

break

cv2.destroyWindow(window_name)

return self.roi

def point_in_roi(self, point):

"""

Check if a point is inside the ROI polygon

Args:

point: (x, y) coordinates

Returns:

bool: True if point is inside ROI polygon

"""

if self.roi is None:

return True

# Convert ROI to numpy array for polygon check

pts = np.array(self.roi, np.int32)

result = cv2.pointPolygonTest(pts, point, False)

return result >= 0 # Returns >= 0 if inside or on the polygon

def generate_color(self, track_id):

"""

Generate a unique color for each track ID

Args:

track_id: Tracking ID

Returns:

color: BGR color tuple

"""

np.random.seed(int(track_id))

color = tuple(map(int, np.random.randint(0, 255, 3)))

return color

def process_video(self, video_path, output_path='output_pill_count.mp4',

show_labels=True, show_segmentation=True, use_tracking=True):

"""

Process video for CURRENT pill counting (not cumulative)

Args:

video_path: Path to input video

output_path: Path to save output video

show_labels: If True, display track IDs on pills (default: True)

show_segmentation: If True, display segmentation masks and contours (default: True)

use_tracking: If True, use ByteTrack for stable counting (default: True)

"""

cap = cv2.VideoCapture(video_path)

if not cap.isOpened():

raise ValueError(f"Cannot open video: {video_path}")

# Get video properties

fps = int(cap.get(cv2.CAP_PROP_FPS))

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

# Read first frame for ROI selection

ret, first_frame = cap.read()

if not ret:

raise ValueError("Cannot read first frame")

# Select ROI

print("Select the region of interest...")

self.select_roi(first_frame)

print(f"ROI selected with {len(self.roi)} vertices")

# Reset video to beginning

cap.set(cv2.CAP_PROP_POS_FRAMES, 0)

# Video writer

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

out = cv2.VideoWriter(output_path, fourcc, fps, (width, height))

frame_count = 0

# For statistics

max_pills_seen = 0

frame_counts = []

print("Processing video...")

print(f"Total frames: {total_frames}")

print(f"Confidence threshold: {self.conf_threshold}")

print(f"IOU threshold: {self.iou_threshold}")

print(f"Tracking enabled: {use_tracking}")

print("-" * 50)

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

frame_count += 1

# CRITICAL: Run YOLO with tracking or detection based on mode

if use_tracking:

# Use tracking for stable IDs

results = self.model.track(

frame,

persist=True,

conf=self.conf_threshold,

iou=self.iou_threshold,

verbose=False

)

else:

# Use detection only (no tracking)

results = self.model(

frame,

conf=self.conf_threshold,

iou=self.iou_threshold,

verbose=False

)

# CURRENT FRAME: Count pills in ROI for THIS frame only

current_pills_in_roi = set() # Track IDs currently in ROI

current_count = 0 # Current detection count

if results[0].boxes is not None and len(results[0].boxes) > 0:

boxes = results[0].boxes.xyxy.cpu().numpy()

# Get track IDs if tracking is enabled

if use_tracking and results[0].boxes.id is not None:

track_ids = results[0].boxes.id.cpu().numpy().astype(int)

else:

# Generate dummy IDs for detection-only mode

track_ids = list(range(len(boxes)))

# Get masks if available

masks = results[0].masks

# Process each detection

for i, (box, track_id) in enumerate(zip(boxes, track_ids)):

x1, y1, x2, y2 = box

center_x = int((x1 + x2) / 2)

center_y = int((y1 + y2) / 2)

# Check if pill center is in ROI

if self.point_in_roi((center_x, center_y)):

current_pills_in_roi.add(track_id)

current_count += 1

# Get color for this track ID

color = self.generate_color(track_id)

# Draw segmentation if enabled

if show_segmentation and masks is not None:

mask = masks.data[i].cpu().numpy()

mask = cv2.resize(mask, (width, height))

mask = (mask > 0.5).astype(np.uint8)

# Create colored mask

colored_mask = np.zeros_like(frame)

colored_mask[mask == 1] = color

# Blend with original frame

frame = cv2.addWeighted(frame, 1, colored_mask, 0.5, 0)

# Draw contour

contours, _ = cv2.findContours(mask, cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

cv2.drawContours(frame, contours, -1, color, 2)

# Calculate centroid for label placement

if show_labels and len(contours) > 0:

M = cv2.moments(contours[0])

if M["m00"] != 0:

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

else:

cX, cY = center_x, center_y

# Draw track ID

label = f'{track_id}'

(label_w, label_h), _ = cv2.getTextSize(

label, cv2.FONT_HERSHEY_SIMPLEX, 0.7, 2

)

# Background rectangle

cv2.rectangle(frame,

(cX - label_w//2 - 5, cY - label_h//2 - 5),

(cX + label_w//2 + 5, cY + label_h//2 + 5),

(0, 0, 0), -1)

# Text

cv2.putText(frame, label,

(cX - label_w//2, cY + label_h//2),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2)

elif show_segmentation:

# Fallback: draw circle

cv2.circle(frame, (center_x, center_y), 15, color, -1)

if show_labels:

label = f'{track_id}'

(label_w, label_h), _ = cv2.getTextSize(

label, cv2.FONT_HERSHEY_SIMPLEX, 0.6, 2

)

cv2.putText(frame, label,

(center_x - label_w//2, center_y + label_h//2),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 255, 255), 2)

elif show_labels:

# Only labels, no segmentation

label = f'{track_id}'

(label_w, label_h), _ = cv2.getTextSize(

label, cv2.FONT_HERSHEY_SIMPLEX, 0.7, 2

)

# Background rectangle

cv2.rectangle(frame,

(center_x - label_w//2 - 5, center_y - label_h//2 - 5),

(center_x + label_w//2 + 5, center_y + label_h//2 + 5),

(0, 0, 0), -1)

# Text

cv2.putText(frame, label,

(center_x - label_w//2, center_y + label_h//2),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2)

# Draw ROI polygon with light transparent overlay

if self.roi:

pts = np.array(self.roi, np.int32)

pts = pts.reshape((-1, 1, 2))

# Create overlay with light color

overlay = frame.copy()

cv2.fillPoly(overlay, [pts], (144, 238, 144)) # Light green

# Blend overlay with frame (light transparency)

cv2.addWeighted(overlay, 0.15, frame, 0.85, 0, frame)

# Draw polygon border

cv2.polylines(frame, [pts], True, (0, 255, 0), 3)

# DISPLAY CURRENT COUNT (not cumulative)

count_text = f'Current Pills: {current_count}'

# Get text size for background

(text_width, text_height), baseline = cv2.getTextSize(

count_text, cv2.FONT_HERSHEY_SIMPLEX, 1.5, 4

)

# Draw background rectangle in upper right

cv2.rectangle(frame,

(width - text_width - 30, 10),

(width - 10, text_height + baseline + 30),

(0, 0, 0), -1)

# Draw text

cv2.putText(frame, count_text,

(width - text_width - 20, text_height + 20),

cv2.FONT_HERSHEY_SIMPLEX, 1.5, (0, 255, 0), 4)

# Optional: Display frame number

frame_info = f'Frame: {frame_count}/{total_frames}'

cv2.putText(frame, frame_info, (20, 40),

cv2.FONT_HERSHEY_SIMPLEX, 0.8, (255, 255, 255), 2)

# Update statistics

if current_count > max_pills_seen:

max_pills_seen = current_count

frame_counts.append(current_count)

# Write frame

out.write(frame)

# Display progress

if frame_count % 30 == 0:

print(f"Frame {frame_count}/{total_frames} | Current pills: {current_count} | Max seen: {max_pills_seen}")

# Cleanup

cap.release()

out.release()

cv2.destroyAllWindows()

# Print statistics

print("\n" + "=" * 50)

print("PROCESSING COMPLETE")

print("=" * 50)

print(f"Total frames processed: {frame_count}")

print(f"Maximum pills seen simultaneously: {max_pills_seen}")

print(f"Average pills per frame: {np.mean(frame_counts):.2f}")

print(f"Minimum pills seen: {np.min(frame_counts)}")

print(f"Output saved to: {output_path}")

print("=" * 50)

return max_pills_seen

def count_pills_in_video(video_path, model_path='best.pt', output_path='output_pill_count.mp4',

show_labels=True, show_segmentation=True, use_tracking=True,

conf_threshold=0.6, iou_threshold=0.5):

"""

Main function to count CURRENT pills in a video (not cumulative)

Args:

video_path: Path to input video

model_path: Path to YOLO model (default: 'best.pt')

output_path: Path to save output video (default: 'output_pill_count.mp4')

show_labels: If True, display track IDs on pills (default: True)

show_segmentation: If True, display segmentation masks and contours (default: True)

use_tracking: If True, use ByteTrack for stable counting (default: True)

conf_threshold: Confidence threshold for detections (default: 0.6)

iou_threshold: IOU threshold for NMS (default: 0.5)

Returns:

max_pills: Maximum number of pills seen simultaneously in the video

"""

counter = RealTimePillCounter(model_path, conf_threshold, iou_threshold)

max_pills = counter.process_video(video_path, output_path, show_labels,

show_segmentation, use_tracking)

return max_pills

Recommended Settings:

show_labels=True: Display track IDs for verificationshow_segmentation=False: Cleaner visualizationuse_tracking=True: Stable counting across framesconf_threshold=0.6: Balanced precision/recall

if __name__ == "__main__":

# Example: Process a video with current count

video_path = r"video_dataset\pill sample 2.mp4"

model_path = r"yolo12_segmentation\train_run\weights\best.pt"

output_path = "counted_pills_full.mp4"

# Full visualization with tracking (recommended)

max_pills = count_pills_in_video(

video_path,

model_path,

output_path,

show_labels=True,

show_segmentation=False,

use_tracking=True,

conf_threshold=0.6, # Adjust based on your model

iou_threshold=0.5

)

print(f"\nMaximum pills seen simultaneously: {max_pills}")

Conclusion

As shown in the demo video, the final system is robust and accurate. It can process video in real-time, providing a live count of pills within the designated area. The maximum count provides a reliable final tally for the video segment.

This guide provides a complete pipeline. You can further enhance it by:

- Training on more data with various pill shapes, colors, and backgrounds to improve generalization.

- Integrating the counter class directly into a live camera feed for a truly real-time application.

- Adding a database to log counting sessions with timestamps for quality control and audit trails.

By combining Labellerr's efficient data annotation platform with the power of YOLO instance segmentation, you can build a production-ready pill counting system that saves time, reduces errors, and streamlines pharmaceutical operations.

FAQ

Q1. Why is fine-tuning YOLO important for pill counting tasks?

Fine-tuning helps YOLO adapt to specific pill shapes, colors, and lighting conditions in pharmaceutical environments, improving detection accuracy.

Q2. What type of dataset is required to fine-tune YOLO for pill counting?

You need a labeled dataset containing images of pills under different orientations, lighting, and background conditions, annotated in YOLO format.

Q3. Can YOLO detect overlapping or partially visible pills?

Yes, with proper training data and segmentation models (like YOLOv12-seg), YOLO can effectively detect and count overlapping or partially visible pills.

Resources

Simplify Your Data Annotation Workflow With Proven Strategies

.png)