5 Best Deep Learning models — When Should You Use Them?

In scientific computing, deep learning has become quite popular, and businesses that deal with complicated issues frequently employ its techniques. To carry out particular tasks, all deep learning models employ various kinds of neural networks. Modern precision can be attained by deep learning models, sometimes even outperforming human ability. In this blog, we have discussed the most common deep-learning models in detail.

Let’s first understand deep learning and how it works.

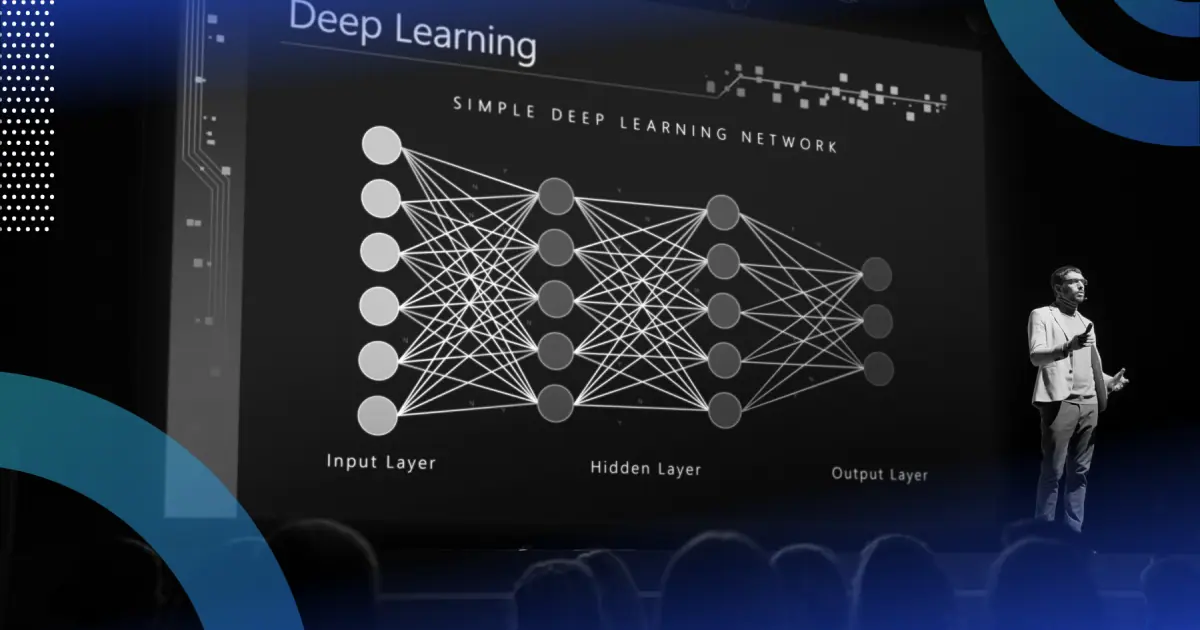

What is deep learning?

Through the use of a machine learning approach called deep learning, computers are taught to learn by doing just like people do. Driverless cars use deep learning as a vital technology to recognise stop signs and tell a person from a lamppost apart.

Recently, deep learning has attracted a lot of interest, and for legitimate reasons. It is producing outcomes that were previously unattainable.

Deep learning is the process through which a computer model directly learns to carry out categorization tasks from images, texts, or sounds. Modern precision can be attained by deep learning models, sometimes even outperforming human ability. A sizable collection of labelled data and multi-layered neural network architectures are used to train models.

How does deep learning operate?

While self-learning representations are a hallmark of deep learning models, they also rely on ANNs that simulate how the brain processes information. In order to extract features, classify objects, and identify relevant data patterns, algorithms exploit independent variables in the likelihood function throughout the training phase. This takes place on several levels, employing the algorithms to create the models, much like training computers to learn for themselves.

Several algorithms are used by deep learning models. Although no network is thought to be flawless, some algorithms are more effective at carrying out particular tasks. It's beneficial to develop a thorough understanding of all major algorithms in order to make the best choices.

Most common deep learning models

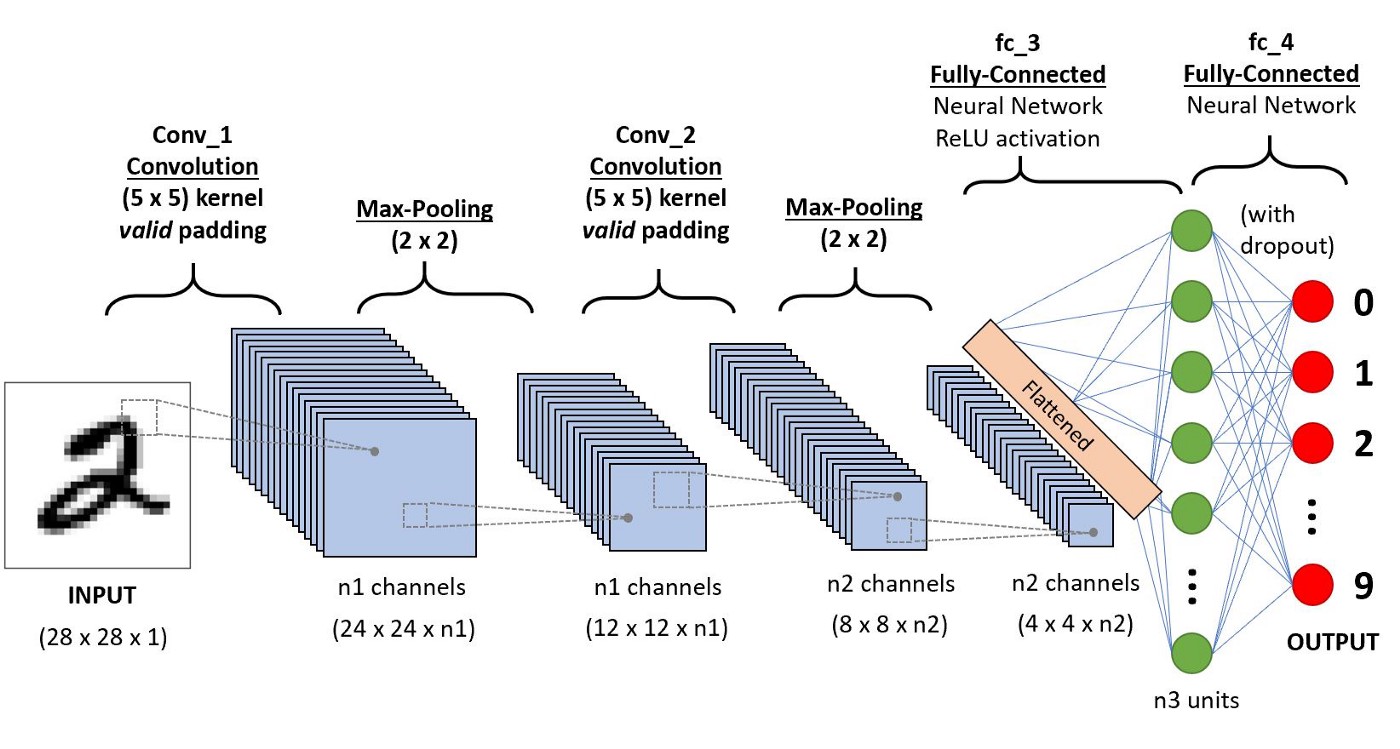

1. Convolutional neural networks (CNNs)

CNNs, often referred to as ConvNets, have several layers and are mostly used for object detection and image processing. When it was still known as LeNet in 1988, Yann LeCun created the first CNN. It was used to identify characters such as ZIP codes and numbers.

Use of CNNs

The identification of satellite photos, processing of medical images, forecasting of time series, and anomaly detection all make use of CNNs.

How Do CNNs Function?

The processing and feature extraction levels in CNNs are numerous:

Convolution Layer

A convolution layer on CNN uses a number of filters to carry out the convolution operation.

ReLU layers in Rectified Linear Unit (ReLU)

CNNs are used to manipulate elements. An improved feature map is the result.

Layering Pools

- The pooling layer receives the adjusted feature map after that. Pooling is a downsampling procedure that shrinks the feature map's dimensions.

- The pooling layer flattens the two-dimensional arrays produced by the convolutional feature map into a solitary, lengthy, continuous linear vector.

Fully Connected Layer

The pooling layer's flattened matrix, which is provided as an input, creates a fully linked layer that categorizes and labels the images.

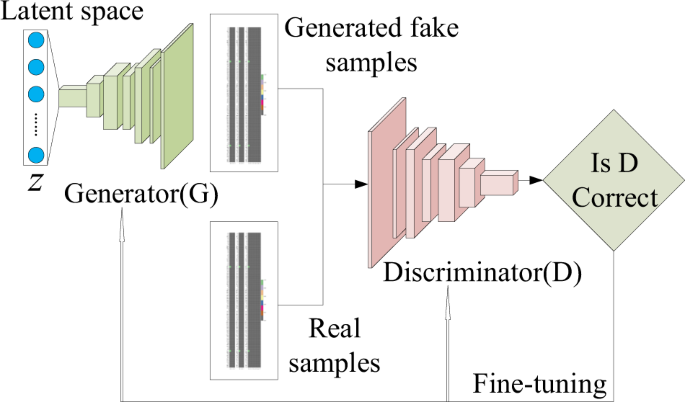

2. Generative adversarial networks (GANs)

Deep learning generative algorithms called GANs produce new data points that mimic the training data. GAN consists of two parts: a generator that learns to produce false data and a discriminator that absorbs the false data into its learning process.

Over time, GANs have become more often used. They can be employed for dark-matter studies to replicate gravitational lensing and enhance astronomy photos. In order to recreate low-resolution, 2D textures at classic video games in 4K or higher resolutions, video game producers use GANs.

Use of GANs

GANs aid in producing cartoon characters and realistic images, taking pictures of real humans, and rendering 3D objects.

How do GANs function?

- The discriminator gains the ability to discriminate between the real data sample and the generator's bogus data.

- The generator creates bogus data during early training, and the differentiator quickly picks up on the fact that it is untrue.

- In order to update the model, the GAN delivers the outcomes to the discriminator and the generator.

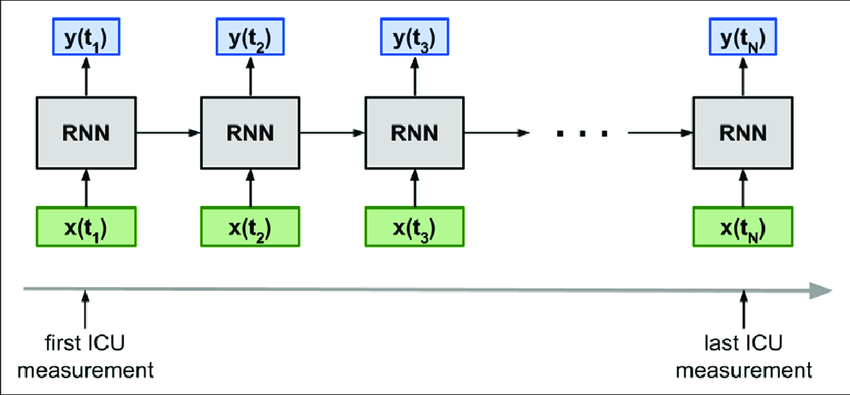

3. Recurrent neural networks (RNNs)

The outcomes from the LSTM can be provided as input to the current stage thanks to the directed cycles formed by connections between RNNs.

The LSTM's output becomes an input for the current phase and, thanks to its internal memory, may remember prior inputs.

Use of RNNs

Natural language processing, time series analysis, handwriting recognition, and computational linguistics are all common applications for RNNs.

How do RNNs function?

- The inputs at time t receive a feed from the outcome at time t-1.

- Similar to this, the intake at time t+1 receives input from the outcome at time t.

- Any length of input can be handled by RNNs.

- The computation takes into consideration historical data, and the size of the model does not grow as the input size does.

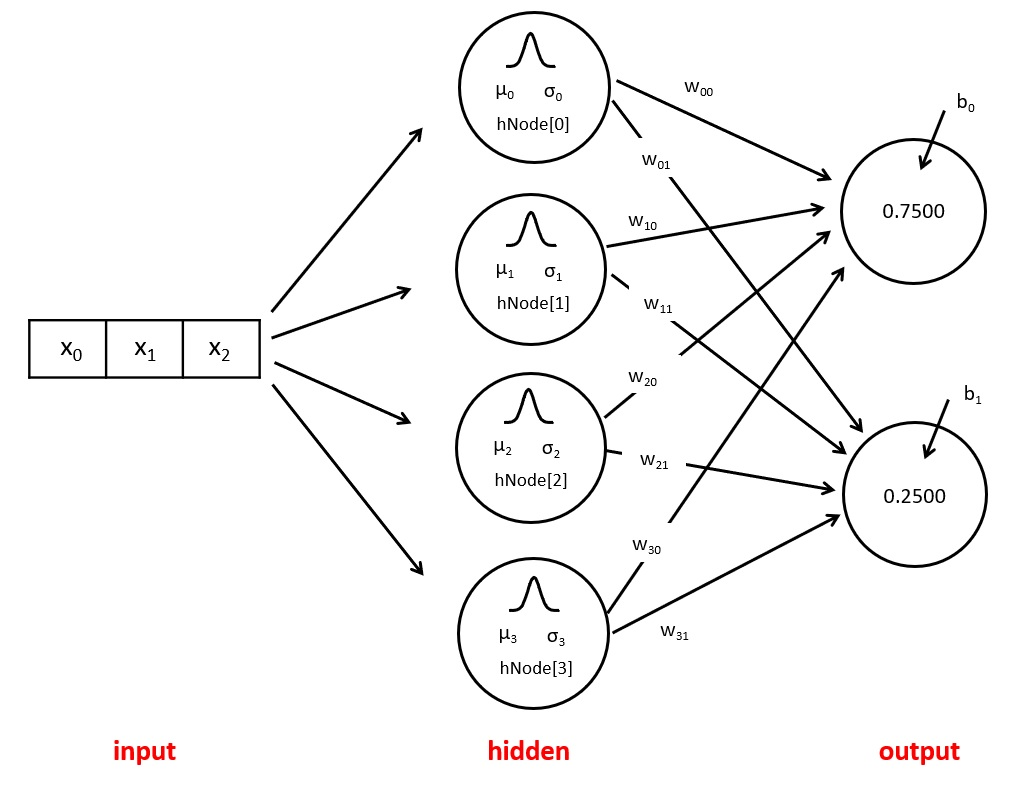

4.Radial basis function networks (RBFNs)

Radial basis functions are a unique class of neural network feedforward networks (RBFNs) that are used as activation functions.

Use of RBFNs

They are typically used for classifications, regression, as well as time-series prediction and have input data, a hidden layer, and a convolution layer.

How Do RBFNs Function?

- By comparing input instances to samples from the training set, RBFNs may classify data.

- The input layer of RBFNs is fed via an input vector. They contain an RBF neural layer.

- One node per group or type of data is present in the output layer, where the function calculates the weighted total of the inputs.

- Gaussian transfer functions, whose outputs are inversely correlated with the distance from the centre of the neuron, are present in the neurons of the hidden layer.

- Radial-basis functions from the input and the neuron's parameters are combined linearly to form the network's output.

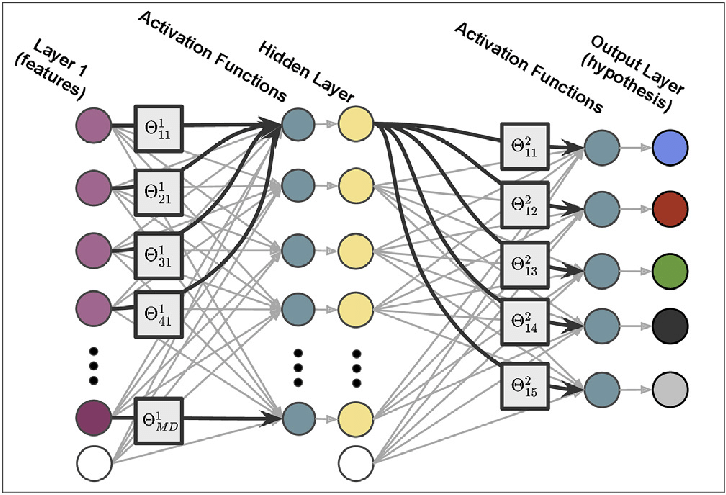

5. Multilayer perceptrons (MLPs)

MLPs are a perfect site to begin studying deep learning technologies.

MLPs are a kind of neural network feedforward that contain many layers of activation-function-equipped perceptrons. A completely coupled input data and a hidden layer make up MLPs.

Use of MLPs

They can be used to create speech recognition, picture recognition, and machine translation software since they have the same amount of input and output stages but may have several hidden layers.

How Do MLPs Function?

- The network's input layer receives the data from MLPs. To ensure that the signal only travels in one direction, the strata of neurons are connected in a graph.

- The weights between the main layer and the concealed layers are used by MLPs to calculate the input.

- MLPs choose which nodes to fire by using activation functions. ReLUs, sigmoid factors, and tanh are examples of activation functions.

- A training data set is used by MLPs to train the model to recognise correlations and discover dependencies between independent and target variables.

To keep yourself updated with such amazing information, keep visiting us!

Simplify Your Data Annotation Workflow With Proven Strategies

.png)