BERT Explained: State-of-the-art language model for NLP

Language is the basis of communication, and proper understanding and interpretation of language is crucial in natural language processing (NLP). Let's introduce BERT, a cutting-edge language model that has transformed NLP.

BERT has established a new benchmark for language processing thanks to its sophisticated neural network architecture and capacity for contextual text analysis.

In this blog, we'll go into great detail about what makes BERT unique and how it's changed NLP as we know it. So let's plunge into the world of BERT while you're strapped in!

What is BERT?

Bidirectional Encoder Representations from Transformers, or BERT, is a cutting-edge machine learning framework for natural language processing (NLP).

It is based on transformer architecture and was created by researchers at Google AI Language. BERT uses the surrounding text to provide the context in order to help computers understand the meaning of ambiguous words in the text.

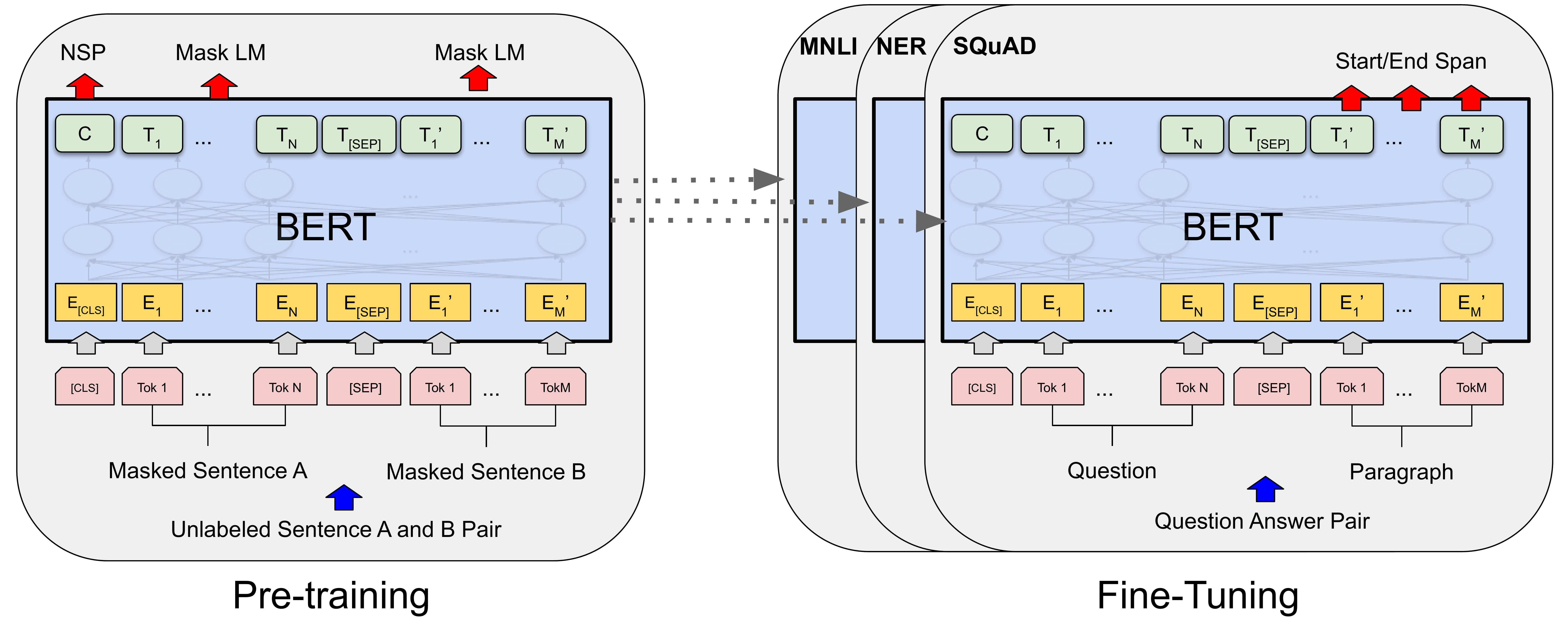

It has produced cutting-edge outcomes in various NLP tasks, such as sentiment analysis and question-answering. In order to improve its performance on specific NLP tasks, BERT can be fine-tuned with smaller datasets after being pre-trained on massive text corpora like Wikipedia. BERT is a very sophisticated and complex language model that aids in automating language comprehension.

How does BERT stand out from other models?

A state-of-the-art NLP model called BERT has outperformed earlier models in a variety of NLP tasks, including sentiment analysis, language inference, and question-answering.

Since BERT is profoundly bidirectional and unsupervised, it considers the context from both the left and right sides of each word, setting it apart from other NLP models.

In order to improve its performance on specific NLP tasks, BERT can be fine-tuned with smaller datasets after being pre-trained on huge text corpora like Wikipedia.

BERT's design makes it conceptually straightforward yet empirically effective since it enables the model to take into account the context from both the left and right sides of each word.

On eleven natural language processing tasks, BERT has produced new state-of-the-art results, surpassing human performance by 2.0%. Examples include raising the GLUE benchmark to 80.4% (7.6% absolute improvement), MultiNLI accuracy to 86.7 (5.6% absolute improvement), and the SQuAD v1.1 question answering Test F1 to 93.2 (1.5% absolute improvement).

BERT continues to be a very successful and commonly used NLP model, despite the fact that other models, like GPT-3, have also demonstrated remarkable performance on a variety of NLP tasks.

Finding it an interesting read, then explore DINO: Unleashing the Potential of Self-Supervised Learning!

How does BERT's architecture differ from other NLP models?

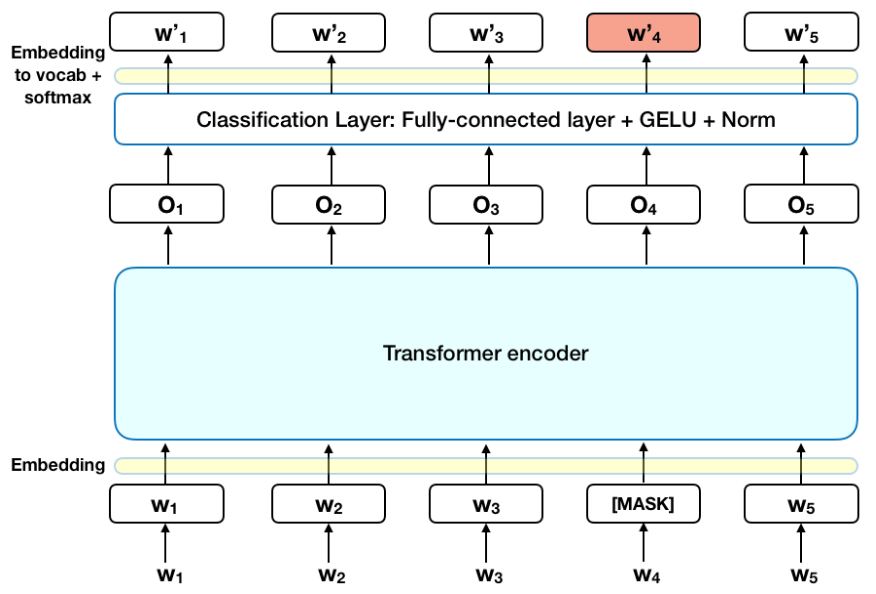

Unlike previous NLP models, BERT's architecture is based on the transformer architecture. To be more precise, the model may take into account the context from both the left and right sides of each word thanks to the transformer encoder layers that make up BERT.

This is different from context-free models, which produce a single-word embedding representation for each word in the lexicon, such as word2vec or GloVe.

BERT considers both the left and right sides of each word's context because it is highly bidirectional and unsupervised. BERT's approach enables the creation of state-of-the-art models for a variety of tasks, including question answering and language inference, with only one additional output layer, without requiring significant task-specific architecture modifications.

You must have come across the significance of transformer architecture in LLMs. To help you understand better, we have explained below in simple terms:

As a result of its ability to manage long-range dependencies in natural language processing (NLP) jobs effectively, the transformer model or architecture is important in big language models.

The transformer model is a neural network design that processes input data using self-attention mechanisms, enabling the model to concentrate on important sections of the input sequence and disregard unimportant ones.

Because of this, the transformer model performs many NLP tasks quite well when it comes to comprehending the context and relationships between words in natural language text.

The transformer model has been applied to numerous sophisticated language models, including BERT, GPT-2, and GPT-3, which have produced cutting-edge outcomes in a variety of NLP tasks.

What are Some Other State-of-the-art language models for NLP?

There are several state-of-the-art language models for NLP, and some of the most popular ones are:

ELMo

Embeddings from Language Models is a bidirectional LSTM-based deep contextualized word representation model that captures the context of words in a phrase. Numerous NLP tasks, such as sentiment analysis, named entity identification and question answering, have been found to perform better when using ELMo.

GPT

A generic Pre-trained Transformer is a language model that has been pre-trained on a sizable corpus of text and employs a transformer-based design. On a variety of language modeling tasks, such as text completion, summarization, and question answering, GPT has been demonstrated to produce cutting-edge results.

XLNet

eXtreme Multi-task Learning via Adversarial Training of a Language Model has a transformer-based architecture and has been pre-trained on a sizable corpus of text. On a variety of NLP tasks, including text categorization, question answering, and natural language inference, XLNet has been demonstrated to produce state-of-the-art results.

These models are tailored for certain NLP tasks after being pre-trained with a vast quantity of data. They accomplish cutting-edge outcomes by utilizing sophisticated approaches including attention, dropout, and multi-task learning.

The most recent advances in NLP language models are driven by both the enormous increases in processing power as well as the development of clever techniques for lightening models while keeping excellent performance.

Applications of BERT

Natural language processing (NLP) applications for BERT include named entity recognition, biomedical entity recognition, sentiment analysis, next-sentence prediction, paraphrasing, question-answering, reading comprehension, and others.

BERT excels in deciphering the meaning of the text and producing precise predictions because it takes into account the context from both the left and right sides of each word.

BERT has been used to better decision-making processes, automate workflows, and improve customer service in a variety of industries, including healthcare, banking, and e-commerce. BERT is a useful tool for multilingual NLP applications because it is also available in 103 languages.

In a variety of NLP tasks, such as named entity recognition, biomedical entity recognition, sentiment analysis, next-sentence prediction, paraphrasing, question-answering, reading comprehension, and others, BERT has demonstrated superior performance.

On a number of benchmarks, including the GLUE benchmark, MultiNLI accuracy, and the SQuAD v1.1 question-answering Test F1, BERT has produced state-of-the-art scores. BERT excels at deciphering text meaning and producing precise predictions because it takes into account the context from both the left and right sides of each word.

BERT is frequently used to enhance decision-making processes, automate workflows, and improve customer service in a variety of industries, including healthcare, banking, and E-commerce.

These are just some applications that explore how artificial intelligence is transforming businesses!

Conclusion

In conclusion, BERT's sophisticated language processing skills have significantly changed the NLP industry. Its capacity for comprehending linguistic complexity and context has raised the bar for language models. BERT is well-positioned to continue advancing the field of NLP and paving the path for more complex language models in the future because of its wide acceptance in industry and academics.

For more such interesting content, keep exploring Labellerr!

FAQs

- What is BERT?

BERT is a Bidirectional Encoder Representation from Transformers. Researchers at Google AI Language have created a cutting-edge language model for natural language processing (NLP).

2. How does BERT work?

BERT is a transformer-based model for language modeling that employs a bidirectional training strategy. It is first fine-tuned for certain NLP tasks after being pre-trained on a sizable corpus of text. BERT achieves cutting-edge outcomes by utilizing sophisticated approaches including attention, dropout, and multi-task learning.

3. What NLP activities can be performed using BERT?

In a wide range of NLP tasks, such as Question Answering and Natural Language Inference, among others, BERT has demonstrated cutting-edge findings. BERT may also be applied to text categorization, named entity identification, and sentiment analysis.

4. Does BERT provide accurate results?

On several NLP tasks, BERT has produced state-of-the-art results, and because it is updated constantly, its accuracy is exceptional. By adjusting the model for certain NLP tasks, the model's accuracy may be raised still more.

5. Is BERT available in a variety of languages?

Yes, the BERT model can be used for projects that are not English-based because it is accessible and pre-trained in more than 100 languages.

Book our demo with one of our product specialist

Book a Demo