Zero-Shot Performance Of CLIP Over Animal Breed Dataset: Here're The Findings

The CLIP (Contrastive Language–Image Pre-training) model represents a groundbreaking convergence of natural language understanding and computer vision, allowing it to excel in various tasks involving images and text.

Its foundation is rooted in zero-shot transfer learning, which empowers the model to make accurate predictions on entirely new classes or concepts it has never encountered in its training data.

This innovative approach builds upon earlier explorations in computer vision, where the utilization of natural language as a flexible prediction space facilitated the generalization and transfer of knowledge.

CLIP derives inspiration from pioneering research that harnessed natural language supervision to achieve zero-shot transfer to various computer vision classification datasets. One pivotal element of CLIP's success lies in the scaling of its pre-training task, which taps into the vast reservoir of text-image pairs found on the internet.

Figure: Zero-shot Image Classification

By training the model to predict which textual descriptions are associated with given images, CLIP effectively learns to recognize a broad spectrum of visual concepts and associate them with their corresponding textual representations.

This proficiency in zero-shot learning enables CLIP to tackle an extensive range of tasks, from image classification to object detection. Furthermore, CLIP adopts modern deep learning architectures, particularly Transformers, to intricately model the intricate relationships between images and text.

As part of a broader movement revisiting the learning of visual representations through natural language supervision, CLIP stands out as a remarkable achievement, effectively bridging the chasm between natural language and computer vision.

Its ability to associate images with textual descriptions during pre-training empowers it to excel in tasks requiring generalization to unseen classes or concepts, positioning it as a pivotal advancement in the realm of multimodal AI.

In this work, we use Animal Breed Classification Dataset which includes various images of different animals of multiple breeds.

About Datasets

Before moving on to the analysis of the CLIP Model, we have a look at the dataset used.

Animal Breed Classification

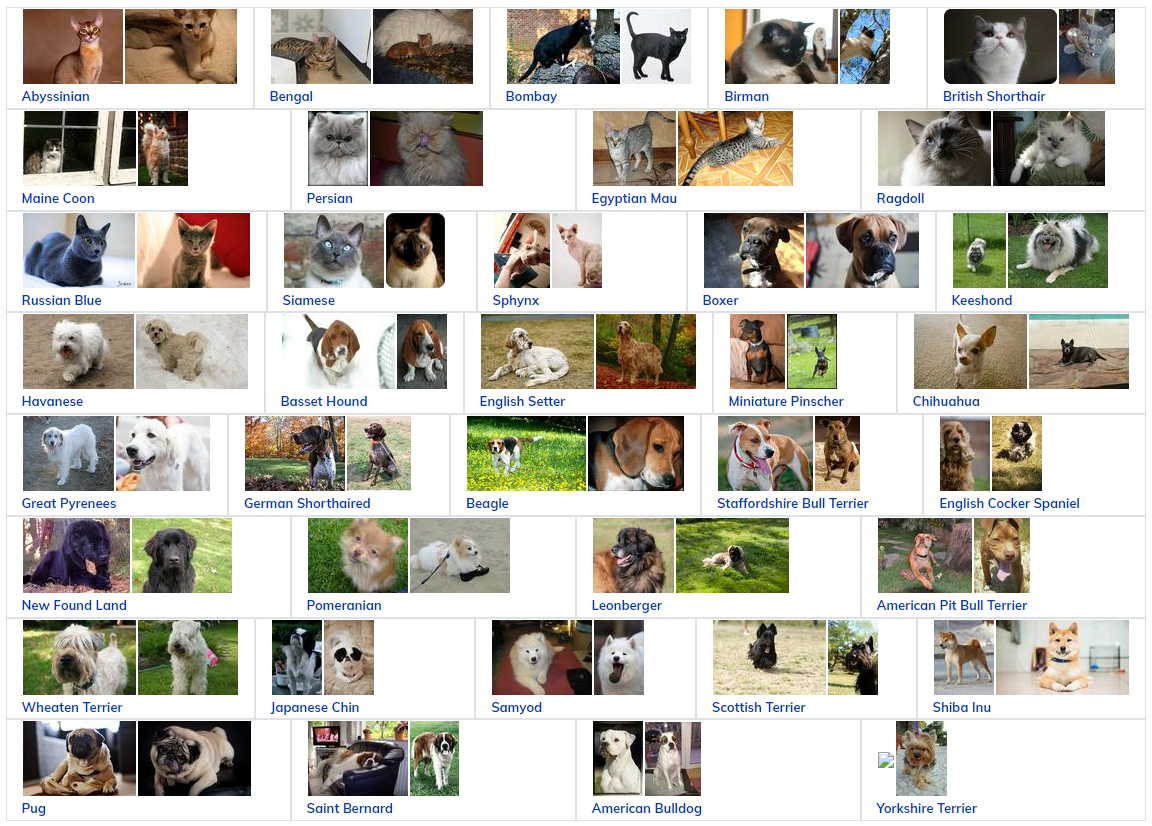

The dataset here consists of 37 pet categories, containing approximately 200 images for each type. These images exhibit significant diversity in terms of scale, pose, and lighting conditions.

Additionally, each image in the dataset comes with detailed annotations, including information about the pet's breed, the region of interest for the pet's head, and precise pixel-level treemap segmentation.

Figure: Animal Breed Classification Dataset

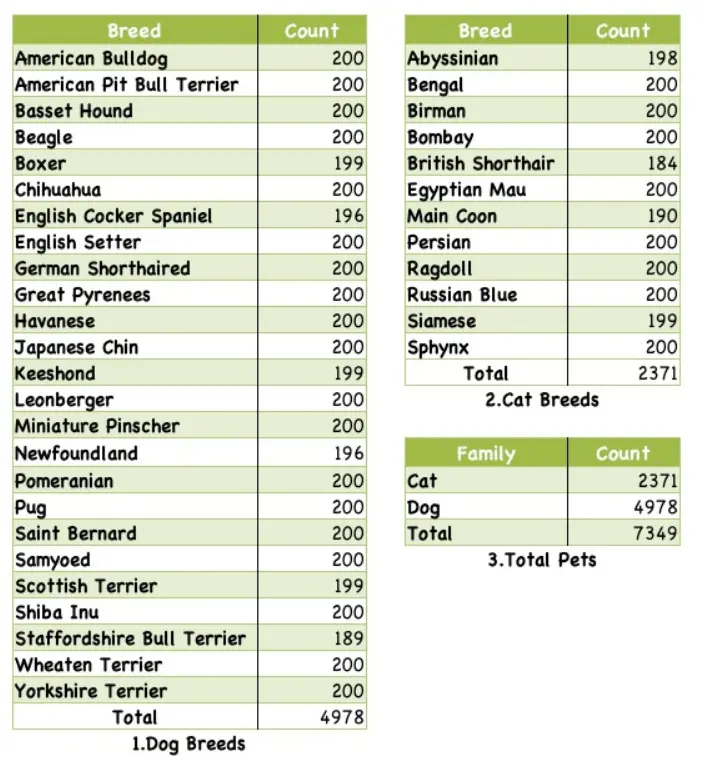

For more detail about the dataset, we can have a look at the below image.

Figure: Dataset Statistics

Hands-on With Code

For trying out the code below for analyzing the zero-shot capability of the CLIP Model, below are some prerequisites you should be familiar with.

Pre-Requisites

To proceed further, one should be familiar with:

- Python: All the below code will be written using Python.

- Pytorch: PyTorch, founded on the Torch library, is a machine learning framework utilized for tasks like computer vision and natural language processing.

- Google Colaboratory: Colab is a cost-free Jupyter Notebook environment that operates exclusively within the cloud.

Tutorial

We begin by installing the required packages.

# Install PyTorch with specific versions and dependencies

!pip install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=11.0

# Install additional packages required for the project

!pip install ftfy regex tqdm

# Install the CLIP library from the OpenAI GitHub repository

!pip install git+https://github.com/openai/CLIP.git

Next, we load the required libraries.

import os

import clip

import torch

from torchvision.datasets import OxfordIIITPet

import matplotlib.pyplot as pltLoading the Model, in GPU or CPU

# Load the model

device = "cuda" if torch.cuda.is_available() else "cpu"

model, preprocess = clip.load('ViT-B/32', device)Download the required Dataset.

# Download the dataset-

data = OxfordIIITPet(root=os.path.expanduser("~/.cache"), download=True)Next, we prepare the input data.

# Prepare the inputs. Evaluating on 2500th example.

image, class_id = data[2500]

image_input = preprocess(image).unsqueeze(0).to(device)

text_inputs = torch.cat([clip.tokenize(f"a photo of a {c}") for c in data.classes]).to(device)Next, we calculate the input features, i.e. encode these via the CLIP Model.

# Calculate features

with torch.no_grad():

image_features = model.encode_image(image_input)

text_features = model.encode_text(text_inputs)So, now we compute the similarity between the image features and text features and pick the top 5 labels that match the input images.

# Pick the top 5 most similar labels for the image

image_features /= image_features.norm(dim=-1, keepdim=True)

text_features /= text_features.norm(dim=-1, keepdim=True)

similarity = (100.0 * image_features @ text_features.T).softmax(dim=-1)

values, indices = similarity[0].topk(5)Finally, we print our results and plot them for visualization.

# Print the result

print("\nTop predictions:\n")

for value, index in zip(values, indices):

print(f"{data.classes[index]:>16s}: {100 * value.item():.2f}%")

#Plotting the Image

plt.imshow(image)

plt.title(f"Image for class: {data.classes[class_id]}")

plt.axis('off')

plt.show()Output

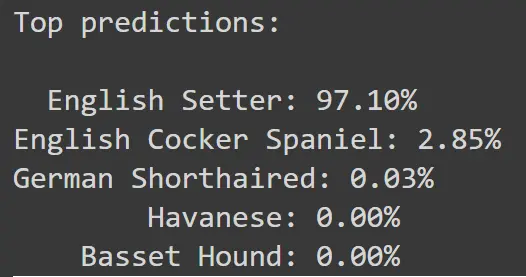

Figure: True Class: English Setter.

Figure: Model output on top-5 labels with matching probabilities

So, here we see that the clip model is able to classify the dog breed without any prior training over the dataset. We run the above inference over multiple images and below we can see:

Figure: Inference Images on Dataset using CLIP

From the above example, we can see that CLIP has a decent zero-shot capability over animal breed classification, as it gives ⅚ correct classification over images.

Thus, we can say that the CLIP Model adapts pretty well to the provided dataset. Now, what if we want to improve the performance of the CLIP Model over this dataset?

For this, we will have to fine-tune our CLIP Model. We aim to do that in the next part of the article, as to keep the articles short and precise.

Conclusion

In addressing the zero-shot capabilities of the CLIP Model, it's evident that CLIP represents a groundbreaking advancement in the field of multimodal AI.

Its ability to generalize and excel in tasks involving both images and text, without specific prior training, is a testament to its pioneering zero-shot transfer learning foundation.

The model's proficiency in making accurate predictions on entirely new classes or concepts it hasn't encountered during training is a remarkable feat, bridging the gap between natural language understanding and computer vision.

CLIP's success is underpinned by its innovative approach, drawing inspiration from the intersection of computer vision and natural language supervision. By pre-training the model on a massive corpus of text-image pairs from the internet, CLIP learns to recognize a wide spectrum of visual concepts and their textual representations.

Its adoption of modern deep learning architectures, particularly Transformers, allows it to intricately model the intricate relationships between images and text.

Throughout our analysis, we explored CLIP's adaptability over different datasets, showcasing its impressive zero-shot capabilities in tasks such as animal breed classification.

CLIP's ability to classify images accurately without specific dataset-tailored training positions it as a powerful tool for various applications, from image classification to object detection.

It's clear that CLIP has the potential to drive significant advancements in the realm of multimodal AI, revolutionizing the way we interact with and understand visual and textual data.

In the next part of this blog, we will focus on fine-tuning CLIP and will try to predict more accurately.

Frequently Asked Questions (FAQ)

1. What is OpenAI's CLIP?

Well, CLIP, which stands for Contrastive Language-Image Pre-training, is a system that acquires visual knowledge through guidance from natural language. You see, in traditional supervised computer vision setups, models are trained or fine-tuned using a predefined set of labels.

However, this approach imposes a constraint on the model because it needs to be retrained whenever it encounters new labels, limiting its adaptability and scalability.

2. Which CLIP model stands out as the top performer?

Well, the H/14 variant has achieved an impressive 78.0% zero-shot top-1 accuracy on the ImageNet dataset and an impressive 73.4% on zero-shot image retrieval at Recall@5 on MS COCO. As of September 2022, it holds the distinction of being the finest open-source CLIP model available.

These CLIP models undergo self-supervised training on an extensive dataset consisting of hundreds of millions or even billions of (image, text) pairs.

Book our demo with one of our product specialist

Book a Demo