Evolution of AI: From Turing's Test to Now

Table of Contents

- What is artificial intelligence (AI)?

- Early Days of AI Research and Pioneering Minds

- How does AI work?

- Why is artificial intelligence important?

- What are the types of artificial intelligence?

- AI Two Sides: A Boon and a Challenge

- Applications of AI

What is artificial intelligence (AI)?

A subfield of computer science known as artificial intelligence (AI) studies the development of intelligent agents, or self-governing systems having the capacity for reasoning, learning, and action.

AI research has been incredibly successful in creating efficient methods to address a variety of issues, from diagnosing medical conditions to playing video games.Though definitions of AI vary, there are some fundamental ideas that are present in all of them. They consist of :

Reasoning - The capacity to derive inferences and reach decisions using information and logic.

Learning - The capacity to pick up new information and abilities by experience.

Acting autonomously - The capacity to operate in the outside environment without specific programming.

Early Days of AI Research and Pioneering Minds

Early days (1940s-1950s)

In the realm of comprehending and developing control and communication systems, the years 1940–1960 were a time of extraordinary intellectual ferment and innovation. Several scientific and technological fields are still greatly impacted by the work of Norbert Wiener and others from this era.

Technological advances throughout the wartime combined with a growing interest in the relationships between biological and mechanical systems resulted in the revolutionary field of cybernetics.

In 1943, a publication by Warren McCulloch and Walter Pitts presented the idea of an artificial neuron—a mathematical representation of a real neuron.

The birth of AI(1950s)

1950 saw the publication of Alan Turing's groundbreaking work "Computing Machinery and Intelligence," which introduced the Turing Test as a means of assessing a machine's level of intelligence. This starts a discussion on what constitutes "intelligent" machine behaviour.

1956, The Dartmouth workshop, led by Claude Shannon, Nathaniel Rochester, John McCarthy, and Marvin Minsky, is credited with establishing artificial intelligence as a legitimate academic discipline. Scholars convene to deliberate on the possibilities of building sentient robots and establish the foundation for subsequent progress.

1958, Alex Bernstein creates the first computer programme capable of playing chess. It's rudimentary by today's standards, but it's a big step forward for AI.

Rise and fall (1960s-1980s)

For AI, the 1960s and early 1970s were a time of immense promise. Significant advancements were made by researchers in fields like machine learning, computer vision, and natural language processing.

1966 - Joseph Weizenbaum's chatbot ELIZA simulates a psychotherapist and shows how AI might be used to improve human-computer interaction.

The field did, however, also experience some setbacks. For instance, the "AI winter" of the late 1970s and early 1980s was a time of disillusionment brought on by the shortcomings of early computers and the challenges associated with creating efficient AI algorithms.

The rise of deep learning and the AI boom

AI witnessed a comeback in popularity in the 1990s, partly due to advancements in computer hardware and software.

AI is being revolutionised by the creation of deep learning algorithms, which are inspired by the composition and operations of the brain. These algorithms have revolutionised computer vision, speech recognition, and natural language processing because they are particularly good at tasks involving pattern identification and data-driven learning.

Artificial intelligence (AI) is being used in many aspects of our daily life, from virtual assistants like Siri and Alexa to facial recognition on smartphones. Progress in domains like autonomous vehicles, health diagnosis, and science is still propelled by deep learning.

AI is still developing quickly, and new uses for it are showing up in industries like robotics, healthcare, and climate change. Additionally, ethical worries regarding bias and possible AI abuse are becoming more and more prevalent.

AI's past is evidence of human curiosity and inventiveness. From the first visions of artificial intelligence to the sophisticated algorithms of today, AI has gone a long way and is still developing quickly. It will be essential going forward to make sure AI is created and applied ethically, for the good of both the earth and humankind.

How does AI work?

Artificial Intelligence is facilitated by advanced algorithms and models that replicate human cognitive processes. The following steps are usually involved in the process:

1. Input:

AI starts by gathering data, which can be text, images, speech, or other formats.

Engineers ensure this data is readable by the algorithms.

Context and desired outcomes are clearly defined at this stage.

2. Processing:

AI analyzes the inputted data to extract patterns and make decisions.

It interprets pre-programmed data and leverages learned behaviors to recognize patterns in real-time data.

3. Data Outcomes:

AI predicts outcomes based on its analysis.

This step determines the success or failure of the prediction.

4. Adjustments:

If outcomes are unsatisfactory, AI adjusts its algorithms and rules to learn from mistakes.

Outcomes may be refined to better align with desired results.

5. Assessment:

AI analyzes data, makes inferences and predictions, and provides feedback for future runs.

This assessment phase is crucial for continuous improvement.

Why is artificial intelligence important?

Machines can now learn from experience, adapt to new inputs, and carry out activities that humans would normally be unable to complete thanks to artificial intelligence (AI). The majority of AI examples that are discussed nowadays, such as machines that can play chess and self-driving automobiles, mainly rely on deep learning and natural language processing. With the use of these technologies, computers may be taught to process vast volumes of data and identify patterns in the data in order to do particular jobs.

Automated Learning and Discovery – By utilising data, AI simplifies repetitious learning and discovery processes and reduces the need for manual intervention in mundane tasks.

Enhanced Product Intelligence - By adding intelligent features, enhancing their capabilities, and giving customers access to more advanced functionality, artificial intelligence (AI) enhances current products.

Adaptive Programming through Progressive Learning - Through algorithms for continuous learning, artificial intelligence (AI) develops, letting data direct programming and enabling systems to respond dynamically to changing circumstances.

In-depth Data Analysis with Neural Networks - Artificial Intelligence (AI) use neural networks with multiple hidden layers to examine large and complex datasets, revealing deeper insights and patterns that may be difficult to find using conventional methods.

Precision through Deep Neural Networks - Deep neural networks enable AI to operate with extraordinary accuracy, honing its capacity to make accurate judgements and predictions based on intricate patterns seen in the data.

Optimizing Data Utilization - Artificial Intelligence (AI) optimises data through effective extraction of meaningful information, enabling well-informed decision-making, and revealing hidden relationships within the large amount of available data.

What are the types of artificial intelligence?

AI Based on Capabilities

Weak AI or Narrow AI - AI that can intelligently carry out a specific task is known as narrow AI.In the field of artificial intelligence, narrow AI is the most prevalent type that is currently accessible.

Narrow AI examples include chess, purchase suggestions on e-commerce sites, self-driving automobiles, speech recognition, and image recognition.

General AI - Artificial intelligence (AI) that is capable of doing any intellectual work as well as humans is known as general AI.

There isn't yet a system that falls under general artificial intelligence (AI) that can carry out any task as well as a human.

Currently, the goal of global research is to create machines that possess general artificial intelligence.

Super AI - Super AI refers to a level of system intelligence where machines are able to outperform humans at any task and have cognitive qualities. It is an outcome of general AI.

Super artificial intelligence is currently only an imaginative concept. Real-world system development is still a world-changing undertaking.

AI Based on functionality

Reactive Machines - The most primitive forms of AI.These AI systems don't keep track of memories or past encounters for use in the future.

These devices simply consider the situations that exist right now and respond to them in the best way feasible.

Example - Google's AlphaGo, IBM's Deep Blue system etc.

Limited Memory - Machines with limited memory can temporarily store certain data or memories.

These devices have a certain amount of time to use stored data.

One of the best instances for limited memory systems is in self-driving cars.

Theory of Mind - Theory of Mind AI should be able to communicate socially with humans and comprehend human emotions, people, and beliefs.

There are currently no such AI machines.

Self-Awareness - It is Future of AI. These robots will be extremely intelligent and possess sentience, emotions, and self-awareness.

It is a hypothetical idea.

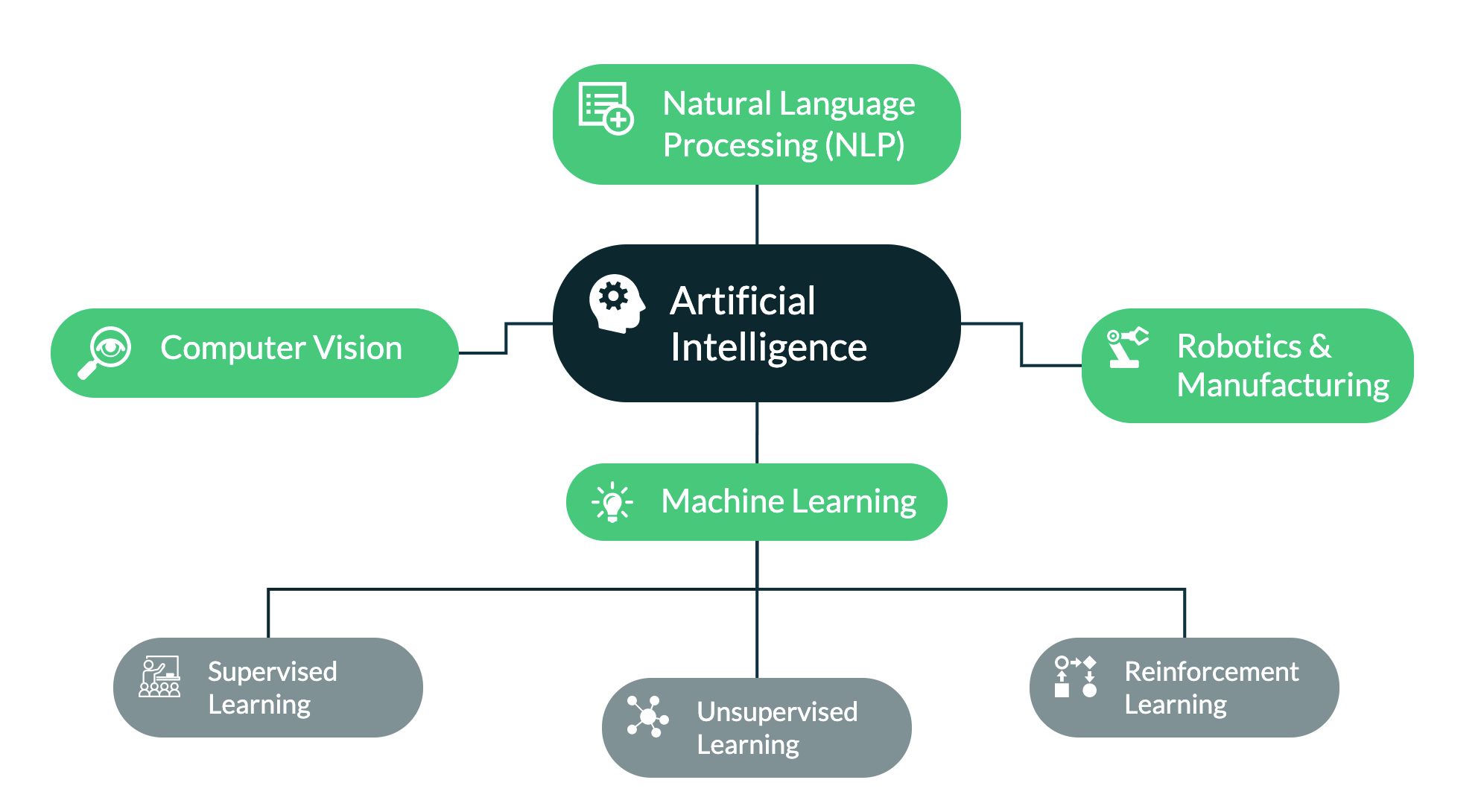

Subset of AI's

Though there are many varieties of AI, the following are some of the most popular ones:

Machine learning: Without being expressly programmed, this kind of AI learns from data using algorithms. Autonomous vehicles and facial recognition software are just two examples of the many applications that machine learning has been utilised to produce.

Natural language processing: A subset of artificial intelligence that studies how people communicate in natural languages with computers. Chatbots and machine translation are two examples of applications that have been developed using natural language processing.

Computer vision -This subset of artificial intelligence focuses on computers' visual and cognitive capacities. Applications like medical picture analysis and object recognition have been developed using computer vision.

Robotics - This branch of AI is dedicated to robot design and manufacture. Robots are frequently utilised to carry out jobs that are hard for people to regularly complete or accomplish.

AI Two Sides: A Boon and a Challenge

Our world is changing quickly due to artificial intelligence (AI), which presents both enormous opportunities and urgent challenges. There are still concerns about job displacement and ethical ramifications even with its apparent ability to increase efficiency, decrease errors, and even address societal issues like disinformation. To have a more comprehensive understanding of AI's impact, let's examine both sides of the issue.

On the Bright Side

Unleashing Efficiency : Artificial intelligence (AI) improves decision-making, streamlines procedures, and automates repetitive work, which results in notable efficiency benefits across a range of industries. Imagine AI systems anticipating and preventing equipment faults, saving time and resources, or robots laboriously constructing cars in factories.

Reduced Human Error : In vital domains like healthcare and finance, AI's analytical skills can reduce human error. AI-powered fraud detection systems can protect financial transactions, while medical diagnosis tools can help diagnose diseases earlier and more accurately.

Innovation Unlocked : Artificial Intelligence is a potent instrument for scientific research and technological progress. AI is advancing innovation and reshaping the future through the creation of new materials and sustainable energy solutions.

The Shadow Side

Job Displacement Fears : AI-powered automation gives rise to worries about employment displacement, especially in industries where routine tasks are involved. Although the field of artificial intelligence will create new occupations, the transition for those who are displaced may be difficult and require retraining and adaptability.

Ethical dilemmas : There are a number of ethical issues to consider, including algorithmic prejudice, privacy issues, and the possibility of using AI improperly for manipulation or spying. In order to prevent unexpected effects, it is imperative that AI be developed and implemented responsibly.

Explainability and Transparency : AI decision-making processes can be ambiguous, making it challenging to comprehend how and why particular results are arrived at. Transparency can boost confidence and prevent ethical AI development.

The enormous promise of AI cannot be overlooked, but the difficulties it offers in developing and implementing it must be carefully considered. We can minimise the hazards associated with AI while maximising its potential by encouraging honest communication, giving ethical issues top priority, and funding reskilling programmes. Recall that AI is a tool, and like any tool, the way we choose to utilise it will determine the impact it has. Let's make sure that, in the thrilling path of technological innovation, AI becomes a force for progress and shared wealth, leaving no one behind.

Applications of AI

Business Intelligence

AI streamlines data collection and analysis, identifying trends and relationships within structured and unstructured data.

Visualization tools powered by AI make complex data easily digestible, leading to better decision-making, increased productivity, and cost reduction.

Revolutionizing Healthcare

AI assists in disease diagnosis, analyzing patient data to detect patterns and enabling earlier, more accurate diagnosis.

By analyzing vast datasets, AI helps develop new drugs and personalized treatment plans tailored to individual needs.

Transforming Education

AI personalizes learning experiences, identifying areas where students need support and providing targeted instruction.

Engaging tools powered by AI keep students active, fostering better understanding and knowledge retention.

Administrative tasks like grading and scheduling can be automated, freeing up teachers for valuable teaching time.

Enhancing Agriculture

AI models optimize crop yields by analyzing soil conditions, weather patterns, and growth data, resulting in more efficient food production.

Automation of tasks like harvesting and irrigation reduces labor costs while promoting environmental sustainability.

Modernizing Manufacturing

AI automates production processes, minimizing errors and maximizing efficiency.

Optimization of workflows by AI increases productivity and product quality.

Defect detection and improved quality control are further benefits of AI in manufacturing.

Additionally, AI has a significant impact on a wide range of businesses, including public administration and financial services.AI is being used in many fields, such as finance (fraud detection and risk management), retail (personalised shopping experiences), transportation (self-driving cars and traffic management), energy (energy efficiency and demand prediction), and government (enhanced public safety and citizen services).

Book our demo with one of our product specialist

Book a Demo