How To Chose Perfect LLM For The Problem Statement Before Finetuning

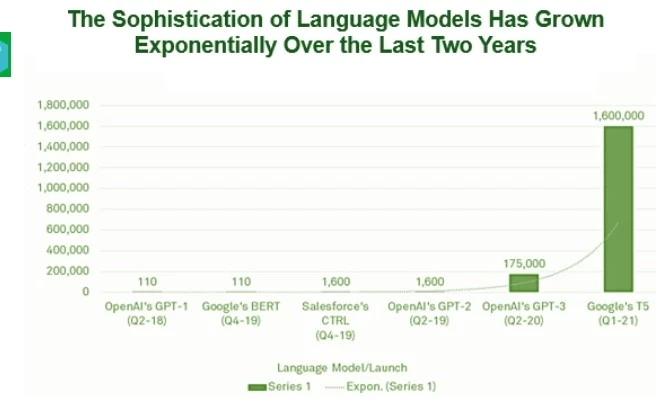

Large Language Models (LLMs) are AI-enabled tools that excel at producing text responses that are human-like when given particular prompts, and they are radically changing how people interact with computers.

One of the most well-known LLMs is ChatGPT, which shot to fame in the latter half of 2022. Large Language Models are now widely used, and conversations about them on social media and in the press are common.

The goal of this blog is to cut through the noise and offer a succinct assessment of the usual scenarios in which several types of LLM models have proven to be useful.

When adopting AI solutions for enterprises, it is crucial to choose the appropriate LLM for a certain use case.By carefully choosing the proper LLM, firms may gain a significant competitive advantage, empower employees, improve operational efficiency and accuracy, and make more informed and wiser decisions.

Although ChatGPT is well-known, there are many alternative LLM-powered options out there, and your decision may depend on the particular needs of your use case. Alternative models to OpenAI's GPT models, which only power ChatGPT, include Google's PaLM, Antropropic's Claude, and Meta's LLAMA. These models may better suit your needs.

Why is it important to consider use case when selecting LLM ?

The use case, or the main function you require, is what determines the best language model (LLM) or AI platform for your company. Several important considerations need to be taken into account.

(i) Defining Objectives: The first stage is to describe your goals for the LLM in detail. Is it used for sentiment analysis, content creation, text analysis, or translation? Setting defined goals is essential.

(ii) Task complexity : Determine how difficult the tasks are that the LLM needs to complete. Do you need simple text parsing or more complex features like the ability to produce insights and recommendations? Your LLM decision is influenced by work complexity.

(iii) Budgetary Constraints: There are important financial considerations. Carefully consider your spending plan. Will you occasionally, regularly, or widely employ the LLM? Make sure your budget and use needs are in line with the costs of different models.

Practical Applications: Real-World Uses

I. Question Answering

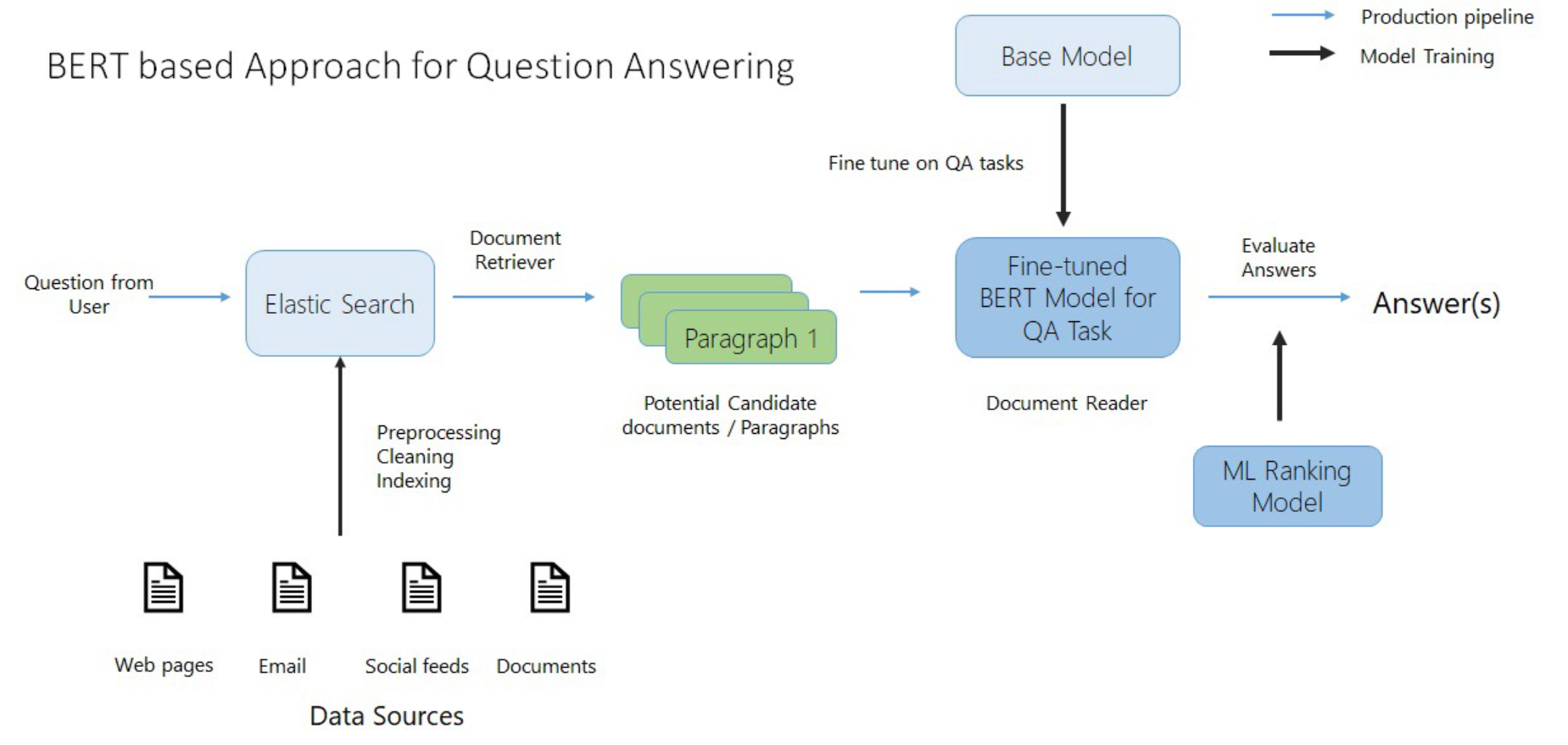

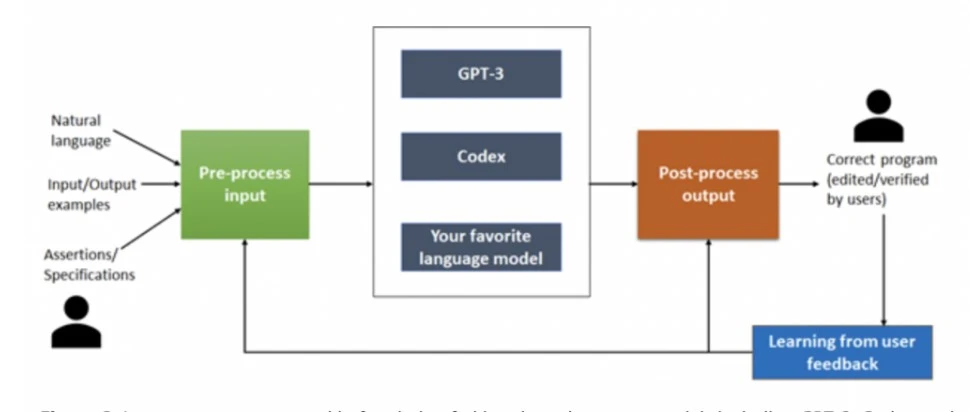

Question answering using LLMs involves a two-step process: understanding the user's query and then summarizing the relevant information into a concise answer. This is achieved by leveraging large language models, like GPT-3.5, which are designed for natural language understanding and generation.

(i)Understanding User Intent: The first step is to use LLMs to comprehend what the user is looking for. This often involves parsing the query, identifying keywords, and understanding context and semantics.

(ii)Summarizing Information: After identifying the relevant information, another LLM is used to generate a summarized response that best answers the user's query.

LLMs are designed with composability in mind, making it easy to chain multiple models together for more complex tasks.

LLM models that can be used for Question- Answering:

(i) GPT-3.5 and GPT-4(Generative Pre-trained Transformer 3.5 and 4)

One of the most comprehensive and adaptable language models is GPT. It consists of 175 billion parameters, which makes it capable to comprehend and produce text that resembles of human.

Pros:

- High-quality responses

- Ease of use

- Resource-intensive

Cons:

- High computational demands

- Costly API access

- Expensive to employ extensively

(ii) BERT (Bidirectional Encoder Representations from Transformers)

The BERT system, created by Google, considers the words to the left and right of each word in the input text to understand the context of the words in a phrase. BERT may be fine-tuned for certain tasks, such as question-answering, and has been pre-trained on a large corpus of text. On various benchmarks, it has produced cutting-edge results. It is renowned for its capability to successfully capture contextual information, making it appropriate for activities where comprehension of context is essential, such question-answering.

Pros:

- Effective Answers

- Natural Language Understanding

- Versatility

- Open-Source

Cons:

- Complex Fine-Tuning

- Privacy and Data Concerns

- Continuous Maintenance

(iii) Contriever

Contriever is a specialist LLM created by Facebook Research with the goal of efficiency for information retrieval and question-answering tasks. When contemplating its application, it is critical to take into account factors like computational costs, data requirements, and how well it matches the specific use case in contrast to competing models.

Pros:

- Efficient Information Compilation

- Specific Problem Solving

- Low Latency

Cons:

- Scaling Complexity

- Fine-Tuning Expenses

- Inference Costs

(iv) LLaMA:

Usage for Question-Answering: The LLaMA language model is designed primarily for activities involving document summarization and question-answering. It is made to be exceptionally good at comprehending queries and extracting brief, pertinent information from documents.

How it is Used: When a user asks a question or presents a query to the model, LLaMA can be used to provide an answer. Following an analysis of the question and a document search to find and compile the most pertinent data, LLaMA will offer a condensed response.

Pros:

- High Comprehension and Accuracy

- Efficient Information Retrieval

- Consistent Response

Cons:

- High Training Costs

- Fine-Tuning Expenses

- Dependency on Data Quality

II. Summarization

As data volumes continue to skyrocket, especially as computer systems produce more and more information on their own, it is becoming more and more important to have excellent summaries in order for us humans to grasp all those articles, podcasts, movies, and earnings calls.

That LLMs can also do it is a blessing. In this setting, two basic summarising strategies have become more popular. First, there is the method of abstractive summarization, which calls for the creation of original material. This newly written book seeks to distill the essence and important ideas of more extensive information.

It essentially rephrases and distills the material in a unique way, making it easier to understand and more interesting. The second technique is extractive summarizing, which aims to identify and extract the most crucial and pertinent information from a given piece of text or prompt.

Using an existing sentence or phrase to best represent the main idea of the source material is the goal of this strategy. The outcome is a brief summary that accurately reflects the original text.

LLM models that can be used for Summarization:

(i)BERT (Bidirectional Encoder Representations from Transformers):

Description: BERT is a transformer-based model that has been fine-tuned for summarization tasks. It understands context by considering the surrounding words in both directions.

Pros:

- Effective at understanding context and relationships within a document.

- Can be fine-tuned for extractive or abstractive summarization.

Cons:

- Prone to producing lengthy summaries, making it less suitable for concise summaries.

- Limited to fixed-length input/output, which can be a limitation for long documents.

(ii) GPT Series ( GPT-2, GPT-3.5, GPT -4):

Description: GPT models are autoregressive transformers. GPT-2 and GPT-3.5, in particular, have been used for summarization by conditioning them to generate concise summaries.

Pros:

- Capable of generating abstractive summaries that are coherent and contextually relevant.

- Adaptable to various languages and domains.

Cons:

- Tend to generate verbose or redundant text in some cases.

- May struggle with very long documents.

(iii) BERTSUM:

Description: BERTSUM is a variant of BERT specifically designed for extractive summarization. It treats summarization as a binary classification task to select important sentences.

Pros:

- Excellent at extractive summarization tasks, especially when you want to preserve the original document's structure.

- Provides more control over the length and coherence of summaries.

Cons:

- Not suitable for abstractive summarization where content needs to be paraphrased.

(iv) LaMDA (Language Model for Dialogue Applications)

LaMDA, or Language Model for Dialogue Applications, is a large language model chatbot developed by Google AI. It can be used for a variety of tasks, including summarization. LaMDA can be used to summarize text by extracting the most important information and presenting it in a concise and coherent way.

Here are some of the pros of using LaMDA for summarization:

- It can be used to summarize a variety of text formats, including articles, blog posts, and even code.

- It can be used to summarize text in a variety of languages.

- It can be used to summarize text in a variety of styles, including factual, creative, and persuasive.

- It is still under development, but it is constantly learning and improving.

Here are some of the cons of using LaMDA for summarization:

- It can sometimes produce summaries that are not factually accurate.

- It can sometimes produce summaries that are not objective.

- It can sometimes produce summaries that are not creative or engaging.

Overall, LaMDA is a powerful tool that can be used for summarization. However, it is important to be aware of its limitations and to use it with caution.

Here are some additional things to consider when using LaMDA for summarization:

(i)The quality of the summary will depend on the quality of the input text.

(ii) It is important to provide LaMDA with clear instructions about what kind of summary you want.

(iii) You may need to edit the summary manually to improve its accuracy, objectivity, and creativity.

Overall, LaMDA is a promising tool for summarization. However, it is still under development and it is important to be aware of its limitations.

(v) Bloom

Bloom is a large language model (LLM) that is specifically designed for summarization tasks. It is trained on a massive dataset of text and code, and it can be used to generate summaries of text documents, code, and other kinds of data.

Bloom can be used in a variety of summarization tasks, including:

- Generating summaries of news articles

- Summarizing research papers

- Creating abstracts for academic papers

- Writing blog posts

- Generating product descriptions

- Creating marketing copy

Bloom has several advantages over other summarization methods. First, it is able to generate summaries that are both accurate and concise. Second, it is relatively fast and efficient, making it a good choice for tasks where speed is important. Third, it is able to generate summaries in a variety of styles, making it a versatile tool for a variety of applications.

However, Bloom also has some limitations. First, it can sometimes generate summaries that are not factually accurate. Second, it can be biased in its summaries, depending on the data that it is trained on. Third, it can be difficult to control the length and style of the summaries that it generates. Overall, Bloom is a powerful tool for summarization tasks. It is accurate, concise, and relatively fast. However, it is important to be aware of its limitations before using it.

Here are some additional pros and cons of Bloom summarization:

Pros:

- Accurate and concise summaries

- Relatively fast and efficient

- Versatile and can be used for a variety of tasks

- Can generate summaries in a variety of styles

Cons:

- Can sometimes be inaccurate

- Can be biased

- Can be difficult to control the length and style of the summaries

Overall, Bloom summarization is a powerful tool that can be used to generate accurate and concise summaries of text documents. However, it is important to be aware of its limitations before using it.

Some of the products based on LLM’s to be used for summarization:

1. Assembly AI: This ground-breaking programme excels at transcription and summarization of audio and video content. Assembly AI makes the consumption, analysis, and sharing of multimedia content easier by transforming spoken words and visual information into textual form.

2. Davinci: Making use of GPT-3, Davinci is a flexible model that can perform a variety of tasks, including producing text summaries. By condensing dense textual information into more manageable and accessible formats, this flexible tool improves content comprehension and overall utility.

3. Cohere Generate: As a product built on large language models, Cohere Generate stands out. It can also rephrase content and condense long chapters into a few key ideas. By facilitating easier access to and comprehension of complicated material, this functionality helps to improve communication.

4. Megatron-Turing NLG: This remarkable Large Language Model excels at a variety of NLP tasks, including summarization. It streamlines the procedure for obtaining important data from a variety of sources, enabling quicker and more precise knowledge extraction.

5. Viable: Viable is a specialised tool created to compile data from many sources. It is especially useful for improving productivity and decision-making in intricate commercial operations. It brings together data from several sources to give a unified view for well-informed decision-making.

The best LLM model for summarization will depend on the specific task and the resources available. However, all of the models listed above have shown promise for summarization tasks, and they are all worth considering.

Here are some additional factors to consider when choosing an LLM model for summarization:

(i)The size of the model: Larger models tend to be more accurate, but they can also be more computationally expensive.

(ii) The training data: The model should be trained on a dataset that is relevant to the summarization task.

(iii)The fine-tuning process: The model should be fine-tuned on a dataset that is similar to the data that it will be used on.

(iv) The user's needs: The model should be able to meet the specific needs of the user, such as the desired length of the summary or the level of detail.

III. Clustering

Clustering is a valuable technique used in natural language processing (NLP) and information retrieval to group documents or text data based on their content similarity. It involves organizing large datasets into clusters or categories, making it easier to manage, search, and understand the information. Various language models, including Large Language Models (LLMs), play a crucial role in this process.

LLMs can be used for clustering tasks in a variety of ways. For example, they can be used to:

(i)Identify similar documents: LLMs can be used to identify documents that are similar in terms of their content. This can be done by comparing the text of the documents to the LLM's internal representations of language.

(ii) Group documents into categories: LLMs can be used to group documents into categories based on their content. This can be done by training the LLM on a dataset of documents that have already been labeled with categories.

(iii) Discover new topics: LLMs can be used to discover new topics by identifying groups of documents that discuss similar concepts. This can be done by clustering the documents based on their word embeddings, which are numerical representations of the meaning of words.

LLMs are a powerful tool for clustering tasks, but they also have some limitations. For example, they can be biased, and they can be susceptible to generating harmful or offensive content. It is important to be aware of these limitations when using LLMs for clustering tasks.

Let's elaborate on some of the real-world applications and the LLMs used for clustering:

(i)LLMa

LLMa is designed to be multilingual, able to understand and generate text in multiple languages. This makes it a good choice for clustering tasks where the documents are in multiple languages. However, LLMa is not as powerful as GPT-3, so it may not be the best choice for clustering tasks where high accuracy is required.

Pros:

(i) LLMa is a multilingual model, which means it can be used to cluster documents in multiple languages.

(ii) LLMa is trained on a massive dataset of text, which allows it to learn the nuances of language and identify even subtle similarities between documents.

(iii) LLMa can be fine-tuned for specific clustering tasks, which can improve its performance.

Cons:

(i) LLMa is a computationally expensive model, which can make it difficult to use for large datasets.

(ii) LLMa is still under development, so it may not be as accurate as other LLMs for clustering tasks.

(iii) LLMa can be susceptible to generating harmful or offensive content, which can be a problem for some applications.

Overall, LLMa is a powerful tool for clustering tasks, but it is important to be aware of its limitations. If you are working with a large dataset or need high accuracy, you may want to consider using a different LLM.

(ii) LaMDA

Pros:

(i)LaMDA is designed to be informative and comprehensive in its answers, and to avoid generating harmful or offensive content. This makes it a good choice for clustering tasks where it is important to produce accurate and unbiased results.

(ii)LaMDA is trained on a massive dataset of text and code, which allows it to learn the nuances of language and identify even subtle similarities between documents.

(iii)LaMDA can be fine-tuned for specific clustering tasks, which can improve its performance.

Cons:

(i)LaMDA is still under development, so it may not be as accurate as other LLMs for clustering tasks.

(ii)LaMDA can be computationally expensive to use, especially for large datasets.

LaMDA is not as powerful as some other LLMs, so it may not be the best choice for clustering tasks where high accuracy is required.

Overall, LaMDA is a powerful tool for clustering tasks, but it is important to be aware of its limitations. If you are working with a large dataset or need high accuracy, you may want to consider using a different LLM.

(iii) GPT

GPT-3: GPT-3 is a large language model developed by OpenAI. It is trained on a massive dataset of text and code, and can generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way. Some of its pros include its ability to generate human-quality text, its ability to learn from a massive dataset, and its ability to be fine-tuned for specific tasks. However, it also has some cons, such as its potential to generate harmful or offensive content, and its need for a lot of computational resources.

GPT-4: GPT-4 is the successor to GPT-3. It is still under development, but it is expected to be even more powerful and versatile than its predecessor. Some of its potential pros include its ability to generate even more realistic and human-quality text, its ability to learn from even larger datasets, and its ability to be fine-tuned for even more specific tasks. However, it also has some potential cons, such as its potential to be even more harmful or offensive than GPT-3, and its need for even more computational resources.

Pros:

(i)GPT is a very powerful language model that can generate high-quality word embeddings.

(ii)GPT is trained on a massive dataset of text, which allows it to learn the nuances of language and identify even subtle similarities between documents.

(iii) GPT can be fine-tuned for specific clustering tasks, which can improve its performance.

Cons:

(i) GPT can be computationally expensive to use, especially for large datasets.

(ii) GPT can be biased, which can lead to inaccurate clustering results.

(iii) GPT can be susceptible to generating harmful or offensive content, which can be a problem for some applications.

Products:

Cohere Embed

Cohere is a company that offers NLP solutions, including Cohere Embed, which generates text embeddings. Text embeddings are numerical representations of text documents that capture their semantic meaning.

Cohere Embed can be used as the foundation for building custom clustering applications. By converting text data into embeddings, you can measure the similarity between documents using distance metrics like cosine similarity.

These embeddings help in clustering documents with similar themes, topics, or content together, making it easier to categorize and navigate large text datasets.

Azure Embeddings Models

Microsoft Azure provides various NLP tools and models, including Azure Embeddings Models.These models can generate embeddings for text data, allowing users to cluster documents based on their content. Azure Embeddings Models can be integrated into applications and services to enable clustering functionalities, such as organizing news articles into categories or grouping customer reviews by sentiment.

OpenAI Embeddings Models

OpenAI has developed state-of-the-art language models, such as GPT-3.5 (the model you are currently interacting with), that can generate text embeddings.

OpenAI's embeddings can be used for clustering purposes by measuring the similarity between text documents. Developers can leverage OpenAI's models to build custom clustering solutions that group documents, social media posts, or any text-based data into meaningful clusters.

These LLMs contribute significantly to the clustering process by providing high-quality text embeddings. Here's how the clustering process generally works with LLMs:

(i)Text Embedding Generation: LLMs are used to convert text documents into dense vector representations (embeddings) that capture the semantic meaning of the text.

(ii)Similarity Measurement: To assess how similar or dissimilar two documents are, embeddings are subjected to pairwise similarity metrics like cosine similarity or Euclidean distance.

(iii) Clustering Algorithm: Many clustering algorithms can be used to find out how much the texts are similar.

(iv) Cluster Analysis: Once documents are clustered, analysts or users can analyze and label these clusters to understand the underlying themes or topics within the data.

Applications: The clustered data can be used in various applications, such as content recommendation, topic modeling, information retrieval, and personalized content delivery.

LLMs like Cohere Embed, Azure Embeddings Models, and OpenAI Embeddings Models are essential tools for text clustering applications. They enable the creation of meaningful clusters in large text datasets, facilitating better organization, understanding, and utilization of textual information across a wide range of industries and use cases.

IV. Classification

Although classification and clustering are both methods for organising data, classification differs significantly in that we are already aware of the groupings. This approach is frequently used to assess sentiment, ascertain intent, and detect illegal behaviour. The two main methods for categorization are few-shot learning and supervised learning. A classifier is trained using embeddings created from the data in the supervised learning approach. These embeddings act as representations of the data, and the classifier learns to categorise or group these representations.

The few-shot learning strategy, on the other hand, relies on rapid engineering to give examples to a Language Model (LLM). These examples teach the LLM how to successfully do the classification task.

Large Language Models (LLMs) have revolutionized the field of NLP and are instrumental in a wide range of applications, including classification tasks. Several LLMs, such as GPT-4, GPT-3.5, PaLM 2, Cohere, LLaMA, and Guanaco-65B, have gained prominence in 2023 for their unique capabilities.

GPT-4 stands out for its advanced reasoning abilities and factuality improvements but may be slower and costlier to use. GPT-3.5 offers fast responses but is prone to generating false information. PaLM 2 excels in commonsense reasoning and multilingual support.

Cohere provides high accuracy but at a relatively higher price point. LLaMA, an open-source model, has shown remarkable potential and rapid innovation in the open-source community but is limited to research use. Guanaco-65B is another open-source model known for its performance and cost-effectiveness.

The choice of an LLM for classification tasks depends on factors like response time, accuracy, cost, and specific use-case requirements. Now, let's see each LLM's pros and cons below:

1. GPT-4

Pros:

- Exceptional capabilities in complex reasoning and advanced coding.

- Multimodal, accepts both text and images as input.

- Improved factuality and reduced hallucination.

- Trained on a massive 1+ trillion parameters.

- Alignment with human values through Reinforcement Learning from Human Feedback (RLHF).

Cons:

- Slower response times compared to older models.

- Higher inference time may not be suitable for real-time applications.

- Costly for high-volume usage.

- Limited information on internal architecture.

2. PaLM 2 (Bison-001)

Pros:

- Strong in commonsense reasoning, formal logic, mathematics, and coding.

- Outperforms GPT-4 in reasoning evaluations.

- Multilingual and understands idioms and nuanced texts.

- Quick response times and offers three responses at once.

Cons:

- Limited availability, with only the Bison model currently accessible.

- May not be as versatile as GPT-4 for general tasks.

- Specific to certain use cases and domains.

3. Cohere

Pros:

- Offers models with a range of sizes, from small (6 billion parameters) to large (52 billion parameters).

- Cohere Command model is known for its accuracy and robustness.

- Developed by former Google employees with expertise in the Transformer architecture.

- Focuses on solving generative AI use cases for enterprises.

- Used by companies like Spotify, Jasper, HyperWrite, etc.

- High accuracy in benchmark tests, as per Stanford HELM.

Cons:

- Pricing is relatively higher compared to some other LLMs (e.g., $15 for 1 million tokens).

- May not be as cost-effective for projects with extensive token usage.

- Specific models may have limited availability based on their sizes.

- Less known in the broader AI community compared to other models

4. LLaMA

Pros:

- Available in various sizes, from 7 billion to 65 billion parameters.

- Shows remarkable capability in many use cases.

- Rapid innovation in the open-source community using LLaMA.

- Utilizes publicly available data and is open-source.

Cons:

- Released for research purposes only and not for commercial use.

- Limited availability and commercial support compared to other models.

- May not have the same level of fine-tuning as commercial models.

- May require additional effort for customization.

Several applications have been developed for classification purposes, some of which include:

1. Azure Embeddings Models and OpenAI Embeddings Models: These models generate text embeddings that can be used to build unique classification systems. You can create your own custom classifiers suited to particular tasks by utilising these embeddings.

2. Cohere Classify: Cohere provides libraries that make it possible to incorporate categorization functionality into your apps. With the help of these libraries, programmers may easily incorporate classifiers into their applications, simplifying operations like sentiment analysis and intent categorization, among other things.

Essentially, classification is a method for classifying data into predetermined groups, making it particularly beneficial for a variety of natural language processing jobs and applications. The objective is to accurately categorise data into recognised groups based on their embeddings or prompts, regardless of whether you opt for a supervised or few-shot learning strategy.

Conclusion

In conclusion, the choice of a Large Language Model (LLM) for specific use cases is a critical decision that can significantly impact the success of AI-powered applications in today's rapidly evolving landscape. Understanding the nuances of your objectives, the complexity of tasks, budgetary constraints, and the unique strengths and limitations of available LLMs is essential.

Whether it's for question answering, summarization, clustering, or classification, various LLMs like GPT-4, PaLM 2, Cohere, LLaMA, and Guanaco-65B offer distinct advantages and cater to different needs. The era of AI-enabled natural language processing is marked by unparalleled potential, and harnessing the right LLM for your use case can unlock unprecedented competitive advantages, enhance efficiency, and empower informed decision-making.

Ultimately, the future of AI-driven applications depends on making the informed, context-aware choice of an LLM that aligns seamlessly with your specific objectives and requirements.

Frequently Asked Questions

1. What is a large language model (LLM) use case?

Large language models (LLMs) have gained widespread popularity due to their versatility and ability to improve efficiency and decision-making across various industries. These models find applications in diverse fields, showcasing their potential.

They enhance tasks like natural language understanding, sentiment analysis, and chatbots in customer service, revolutionizing how businesses engage with their customers. LLMs also assist in content generation for marketing, journalism, and creative writing, offering automated solutions to streamline content production.

Moreover, they aid in data analysis and research by quickly extracting insights from vast textual data sources, making them indispensable tools for modern organizations.

2. How do I choose the right LLM for my use case?

Selecting the appropriate Large Language Model (LLM) for your specific needs and budget entails evaluating several pivotal factors. Key considerations encompass factors such as cost-effectiveness, response time, and hardware prerequisites.

Equally crucial are aspects like the quality of training data, the refinement process, the model's capabilities, and its vocabulary. These elements significantly impact the LLM's performance and its alignment with the unique requirements of your use case, making them essential aspects to weigh when making your selection.

3. How does LLM work?

Large Language Models (LLMs) accomplish all functions by integrating various methodologies, including text preprocessing, named entity recognition, part-of-speech tagging, syntactic parsing, semantic analysis, and machine learning algorithms.

LLMs possess the capacity to extract valuable insights from extensive volumes of unstructured data, such as social media posts or customer feedback and reviews. These models utilize a multifaceted approach that involves a range of linguistic and computational techniques to make sense of and derive meaning from diverse textual information sources.

4. What is good model for LLM?

A good model for Large Language Models (LLMs) can be exemplified by Meta's Galactica or Stanford's PubMedGPT 2.7B. These models are tailored for specific domains, with Galactica being trained on scientific papers and PubMedGPT on biomedical literature.

Unlike general-purpose models, they are domain experts, offering in-depth knowledge in a particular area. The effectiveness of such models underscores the importance of the proportion and relevance of the training data, highlighting how specialization can enhance LLMs' performance in specific tasks and industries.

Book our demo with one of our product specialist

Book a Demo